Towards a Modern LIMS: Dynamic Tables, No-Code Databases, and Serverless Validations

Scientific data that demands the flexibility of spreadsheets with the integrity of databases

Back in 2018 I was tasked with implementing a Laboratory Information Management System (LIMS) for a genomic sequencing core facility at Weill Cornell Medicine. A LIMS is essentially a database with a few key features:

- Dynamic Tables: sequencing is a bleeding edge field and it’s not unusual to modify columns and tables every month as new kits and methods are developed

- Strong Typing: longs, floats, decimals, control over rounding and assigning units of measurement is obligatory for scientific data

- Validations: sequencing machines are expensive to run — values and dependencies need to be reliably checked and and cross checked

- Spreadsheet-like UI: spreadsheets are ubiquitous in wet labs — the web GUI must support copy-pasting of rows and columns from desktop software

After surveying the field and meeting with other sequencing cores we found the commercial offerings were all outdated, closed-source proprietary systems that were difficult to extend and required a lot of work to customize to our workflow. As for open source, the only real contender at the time was Sequencescape from the prestigious Sanger institute — it’s a mature Rails app with background jobs and plenty of features and hooks that make it more easily extended. The drawback is that it’s tightly tied to their specific in-house workflow. For example, adding a new custom column or table requires writing code, stopping the server and migrating the schema which is not something we want to be doing every month. We also looked into AirTable but at the time integrations were limited and it was more marketing/business focused — for example it did not support the types and units of measurement required for scientific data.

It didn’t take long for the institutional NIH syndrome to kick-in and we were on our way to developing a flexible, extensible LIMS from scratch, or in today’s terms: a No-Code Database. The objective was to create something reminiscent of the old MS Access GUIs that allow anyone to build out a full featured database with a few clicks. There were other cores we worked with that had similar requirements so it was important to keep the system as generic, flexible and adaptable as possible — it should work with microscopy data as easily as it works with sequencing data as easily as it works with Thai cooking recipes. Along the same lines, it should also support multitenancy to minimize our maintenance and sysadmin overhead.

Choosing a database: RDBMS vs Document vs Graph

Document databases are appealing for their fluid data models — dynamically adding new properties and collections from a UI each month would be a breeze. However this upside is also the downside: without a structured schema it becomes a lot more work to maintain data integrity, as was discovered at Shippable. Graph databases offer the best of both worlds in terms of providing a structured data model with relational integrity that is still very flexible, but in 2018 there was just not enough support in the community to make this a viable option. For example, we wanted to use open source BI tools (eg Metabase, Apache Superset, etc) for visualizing some of our data and at the time there was no easy way to connect any of these apps to a graph DB. So we settled on the old trusty RDBMS and chose PostgreSQL, primarily for JSONB and Schema support (more below).

Multitenant Dynamic Tables and Columns with Postgres

Storing Dynamic Columns in JSONB

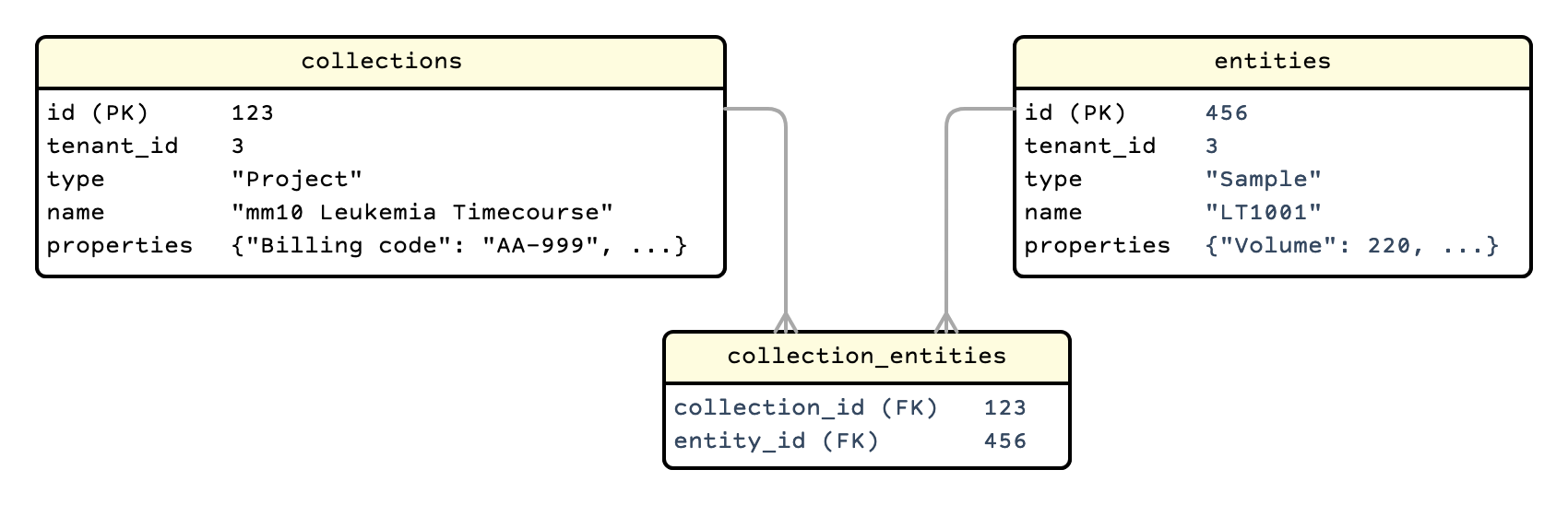

Our first thought was to try some kind of relational-document hybrid where the common base values (IDs, names, modification times, etc) are stored in columns and additional values are all stored in a single JSONB column. For example, a collection might be a sequencing Project and the entities might be the sequencing Samples for that project. A Project can have many Samples and a Sample can have many Projects.

The advantages of this method is that foreign keys can be used to enforce the integrity of high-level relationships (eg. Projects and Samples) while additional custom properties and values can be dynamically added or removed without any disruption. Postgres also provides functions to support the expansion of JSONB key-values into native column queries, which allows for checking/coalescing of missing keys or values implicitly from DB queries rather than writing tedious manual checks in the application logic.

The two main disadvantages we found were that queries got very complicated very quickly and as soon as the JSONB fields got larger (>5000 characters) it didn’t scale — the performance was unbearably slow, especially when trying to GROUP BY a JSONB value. We tried tuning TOAST and found refactoring queries with CTE patterns made a big difference but after all our optimization it was still taking over 5 seconds to complete a simple GROUP BY of a JSONB value in a table with just 100K records.

Isolating Tenant Tables with Postgres Schemas

A Schema in Postgresql is not just the data model but it’s a namespace feature that contains database objects such as tables, columns, indexes, etc. that in turn allows data belonging to different tenants to be isolated within a single database. So if we’re making an app to dynamically build out a database, why not just use Postgres to create and alter the tables in a dedicated schema?

If we’re writing code to directly CREATE and ALTER tables then we could no longer use our ORM (we were using Active Record with Rails) leaving us to write out raw SQL for every operation like cavepeople. Every table and column also has a whole bunch of metadata (eg. most numeric columns also need a unit of measurement) and the app needs to know about the structure and values of this metadata before it can do anything useful. So in addition to losing our ORM we would also need to implement a metadata system to track the tables and columns, which quickly starts to feel like you’re creating a duplicate of the database.

Entity-Attribute-Value Models

This is the classic database (anti-)pattern for dynamic property values that has been best described as giving you enough rope to hang yourself with. Some favorable opinions can be found but it’s well known that beyond the most basic applications, queries quickly become extremely painful to write, slow to execute and impossible to understand.

If we abstract away from the database implementation of EAV models and instead consider it at an object level it becomes more justifiable to our use case of building out multiple dynamic RDBMS:

- An Entity is a DB row: a thing that has an ID and a type or Composition (eg a Project or a Sample)

- Each Entity is linked to one or more Attributes (columns) and each Attribute has an instance of an EntityAttributeValue (a column-row value) — which can also be a link to another Entity (a foreign key)

- The result is that the EAV equivalent of a traditional table (eg. a Samples table) is a collection of all the EntityAttributeValues with the linked Entity matching the specific Composition type (eg. Samples)

At the database level this is a typical EAV mess of numeric keys and mystery values but with our ORM we can work more intuitively and effectively. For example, we can strongly type the attributes (and include measurement units) so at the object level we’re calling .val() but at the database level we’re SELECTing from the column that matches the type (eg the dec_val column instead of the txt_val or date_val column) and applying the corresponding unit conversion functions.

We actually went one step further and borrowed the concept of an Aspect from Alfresco content modelling, so that common Attributes can be grouped together — for example the Measurable Aspect may include Attributes for Molarity, Molecular Weight, Concentration, etc.. With this feature, an Entity can use Aspects to model a lifecycle or workflow, for example before a Sample can be sequenced it must have the Measureable and QualityControlable Aspects.

Postgres Unlogged Tables as Caches

Using objects on top of an EAV model is more intuitive and easier to work with but it doesn’t solve the problem of slow SELECT queries. We are also left with the same limitation we had with Graph DBs: we can not make use of tools that are expecting a relational data structure.

Our solution was to pivot the data from our EAV model into a RDBMS relational structure to act as a cache for queries and external tools. We wrote a function in pure PL/pgSQL that selects all of the EAV data for a specified table (Composition) and pivots it to a regular table structure, including IDs, indexes and foreign keys. We made use of the Unlogged Tables feature because the data is disposable and with a bit of tuning we can build a table of 500K rows in a few seconds.

This means that:

- SELECT queries are offloaded to the cache tables

- Incremental INSERTs and UPDATEs have two steps inside a transaction: (1) write the EntityAspectAttributeValue from the ORM which triggers (2) a write to the corresponding cache table from a custom DAL

- Batch INSERTs and UPDATEs only write the EntityAspectAttributeValues and then rebuild the cache table

- Any changes to dynamic columns or tables also rebuild the cache table

Every instance in the EAV model has a tenant_id which is used to isolate the cache tables to separate Postgres Schemas so they can be accessed with sensible names (eg. weill_cornell.samples) and be secured respectively.

This solution gives us all the advantages of building out multiple, isolated databases at the object level with both the data and metadata in static tables managed by the ORM, while still supporting performant, regular SELECT queries and external tools using the cache tables.

Serverless Validations

Validations are the backbone of scientific workflow — if we can’t cross check values before running a thousand dollar experiment then we may as well be using a note pad. We first tried implementing the validations within the app using a relational model: each Validation was linked to one or more reusable Rules and each Rule contained a logical Ruby expression string to evaluate the values. As validations got more advanced we continued to build-out the relational model. For example we added relations to account for the different levels of validation ie AttribueValues vs Aspects vs Entities vs Compositions.

After a few weeks, what we hoped would be a nice library of reusable validation components configurable from the UI turned into a mess of conditions and exceptions. Serverless, stateless computing such as AWS Lambda is a perfect fit for this kind of discrete functional computation so we started moving the validations over to a serverless cloud implementation and never looked back. Now validations are far more specific for particular cases but this is made up for by the ease of duplicating, editing and deploying new validations on-the-fly from a serverless web console.

Spreadsheet-like UI

We were hoping to find a grid web UI library that could dynamically discover the data model from an API so all we had to do was write the interface on the server-side and plug it in. There were plenty of commercial offerings that worked with statically predefined databases but none that could read the model from a call and fetch the corresponding column headers and values dynamically. A GraphQL Jamstack such as Gridsome or Gatsby looks well suited to this application but I still haven’t found a package that supports a writable grid with pagination, sorting, filtering and column selection (similar to a Google Sheets UI) for a dynamic data model.

The next best thing was to find a UI library and write the supporting logic ourselves. We settled on Handsontable which is an impressive full featured spreadsheet-like Javascript library with a nice API, good documentation and an active community. The only issue was at that time there were no boiler plates or examples for interfacing a browser MVC to a server-side model, so we developed our own Javascript Model and Controller to manage the Handsontable View and talk to our custom Rails REST API. We would have liked to use a standard interface but restSQL and JSON/q appeared poorly supported or abandoned and PostgREST was great for direct DB querying but not well suited to our custom DAL. If we were building it again today GraphQL is the obvious choice.

As is often the case, the UI quickly became the most time consuming and costly component — it works nicely but it’s complex, difficult to extend and a headache to maintain. This makes it the number one contender for a rewrite with a modern Jamstack but after investing so much time and with a small dev team it’s hard to justify making that call.

Final Thoughts

It’s 2021 and there have been big developments across the landscape— from apps like Hasura that can dynamically build out a GraphQL database in Postgres, to type checking in Rails with sorbet, to full support for React, Angular and Vue from Handsontable. I’d love to hear your experiences with LIMS or databases supporting dynamic tables with multitenant data models.

Over at Whitebrick we’re currently working on a best-of-breed open source alternative to Airtable, so if you’re looking for a No-Code database or modern, flexible LIMS-as-a-Service please get in touch.

Posted with permission from the Author from: https://towardsdatascience.com/towards-a-modern-lims-dynamic-tables-no-code-databases-and-serverless-validations-8dea03416105

Illustration licensed from

Illustration licensed from