Posted on December 4, 2023

By LabLynx

Journal articles

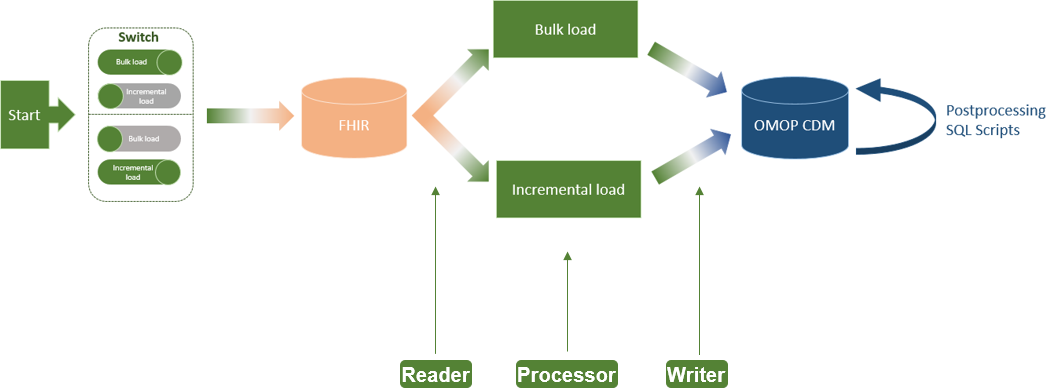

In this 2023 paper published in

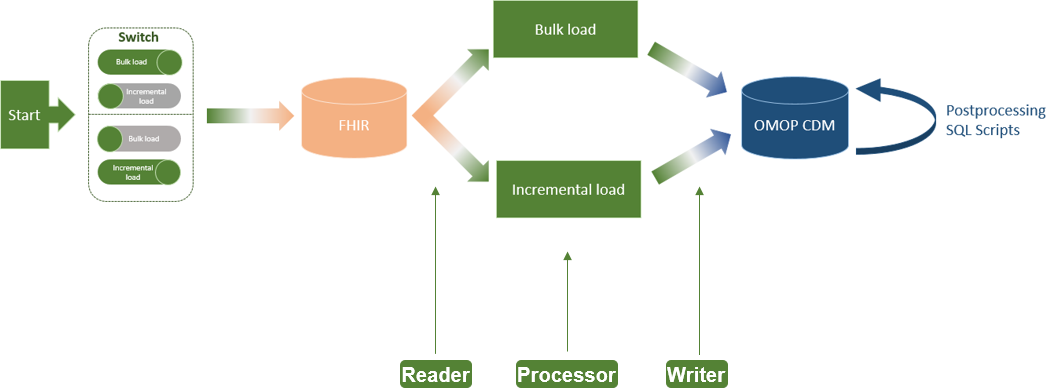

JMIR Medical Informatics, Henke

et al. of Technische Universität Dresden present the results of their effort to add "incremental loading" to the Medical Informatics in Research and Care in University Medicine's (MIRACUM's) clinical trial recruitment support systems (CTRSSs). Those CTRSSs already allows bulk loading of German-based FHIR data, supporting "the possibilities for multicentric and even international studies," but MIRACUM needed greater efficiencies when updating such data on a daily, incremental basis. The paper presents their literature review and approach to adding incremental loading to their systems. They conclude that the extract-transfer-load (ETL) "process no longer needs to be executed as a bulk load every day" with the change, instead being able to rely on "using bulk load for an initial load and switching to incremental load for daily updates." They add that this process has international applicability and is not limited to German FHIR data.

Posted on November 28, 2023

By LabLynx

Journal articles

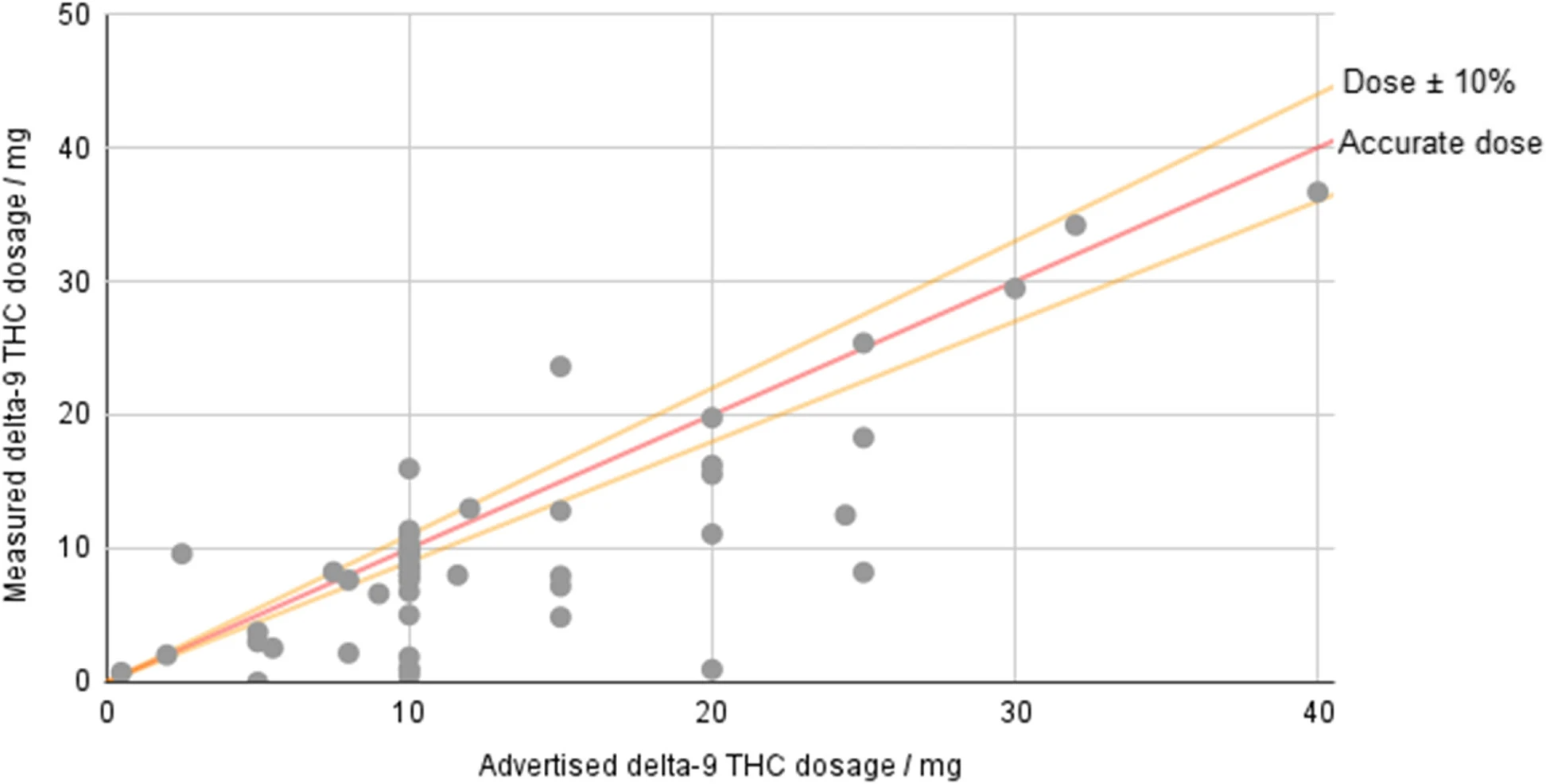

In this 2023 paper published in the

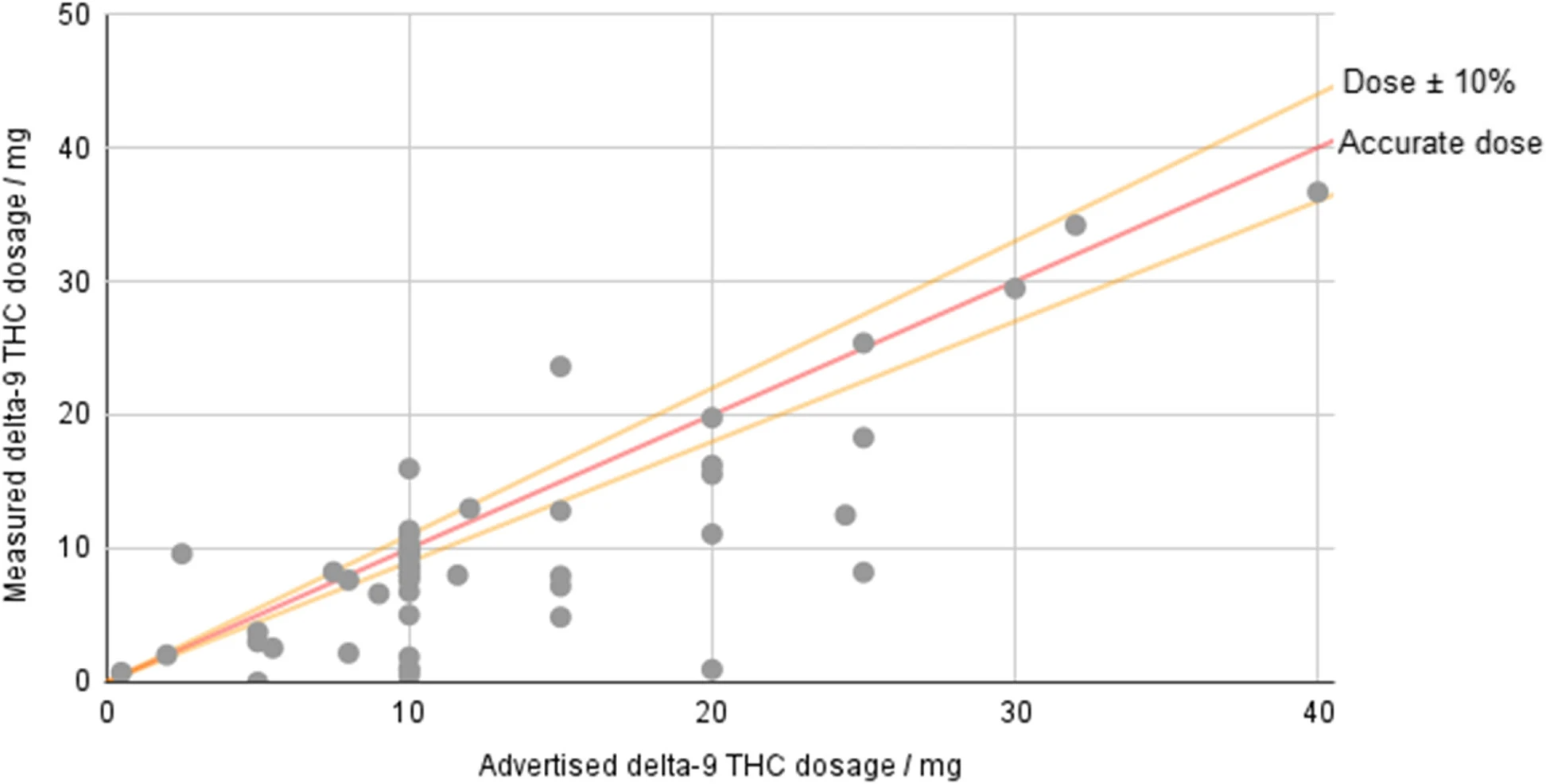

Journal of Cannabis Research, Johnson

et al. examine the current state of hemp-derived delta-9-tetrahydrocannabinol (Δ

9-THC) products on the U.S. market after the passage of the Agriculture Improvement Act of 2018. Noting discrepancies and loopholes in the legislation, the authors performed laboratory analyses on 53 hemp-derived Δ

9-THC products from 48 brands, while also examining aspects such as age verification, labeling consistency, and comparison of reported company values vs. analyzed values. After describing their methodology and results, the authors conclude that "the legal status of hemp-derived Δ

9-THC products in America essentially permits their open sale while placing very few requirements on the companies selling them." The end result includes finding, for example, products "that have 3.7 times the THC content of edibles in adult-use states," as well as inaccurately labeled products.

Posted on November 20, 2023

By LabLynx

Journal articles

"Artificial intelligence" (AI) may seem like a buzzword akin to the "nanotechnology" craze of the 2000s, but it is inevitably finding its way into scientific applications, including in the materials sciences. In this December 2022 article published in

npj Computational Materials, Sbailò

et al. present their AI-driven Novel Materials Discovery (NOMAD) toolkit as an extension of their NOMAD Repository & Archive, focused on making materials science data FAIR (findable, accessible, interoperable, and reusable), as well as AI-ready. After introducing the details of their workspace and goals towards adding notebook-based tools to the NOMAD Repository & Archive, the authors dive into the details of NOMAD AI Toolkit. They close by noting their toolkit "offers a selection of notebooks demonstrating such [AI-based] workflows, so that users can understand step by step what was done in publications and readily modify and adapt the workflows to their own needs." They add that the system "will allow for enhanced reproducibility of data-driven materials science papers and dampen the learning curve for newcomers to the field."

Posted on November 13, 2023

By LabLynx

Journal articles

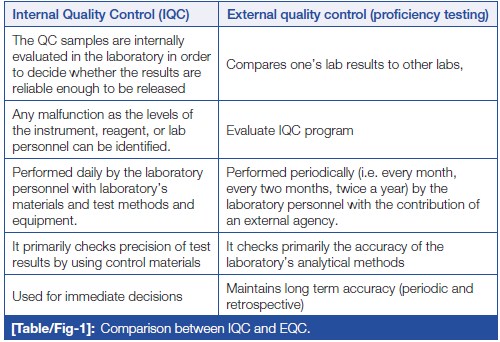

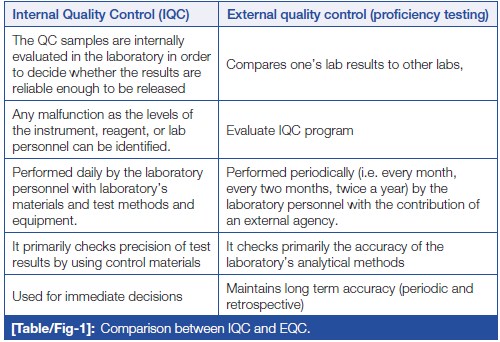

In this 2023 article published in

Journal of Clinical and Diagnostic Research, Naphade

et al. provide a brief introductory-level review of the importance of quality control (QC) to the clinical laboratory. After a brief introduction on clinical lab testing, the authors analyze the wide variety of sources for laboratory errors, covering the pre-analytical, analytical. and post-analytical phases. They then introduce the concept of quality control, followed by explaining how QC is implemented in the laboratory, including through the use of QC materials, statistical control charts, and shifts and trends. They conclude this review by stating that "reliable and confident laboratory testing avoids misdiagnosis, delayed treatment, and unnecessary costing of repeat testing." They add that given these benefits, "the individual laboratory should assess and analyze their own QC process to find out the possible root cause of any digressive test results which are not correlating with patients' clinical presentation or expected response to treatment."

Posted on November 7, 2023

By LabLynx

Journal articles

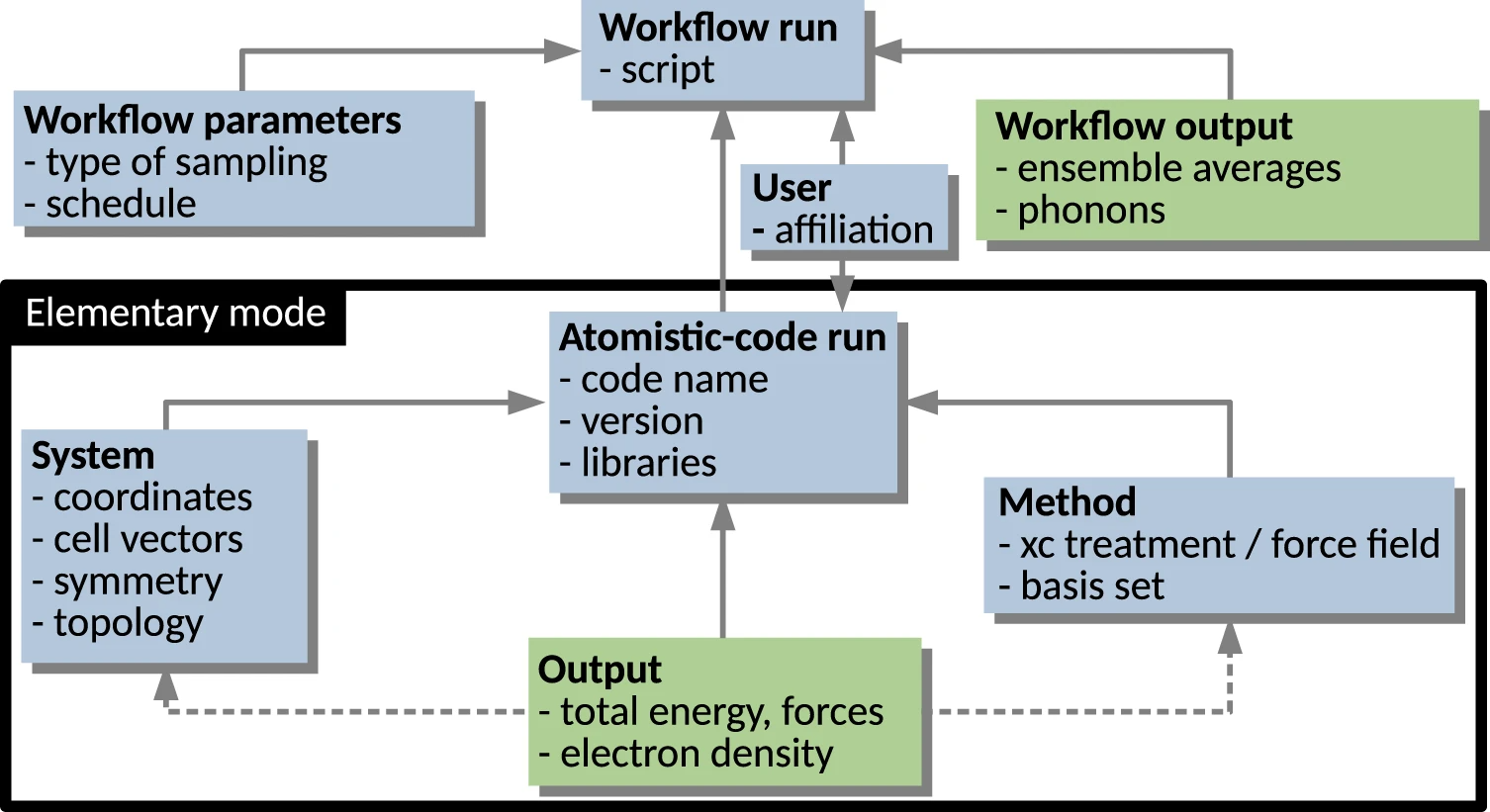

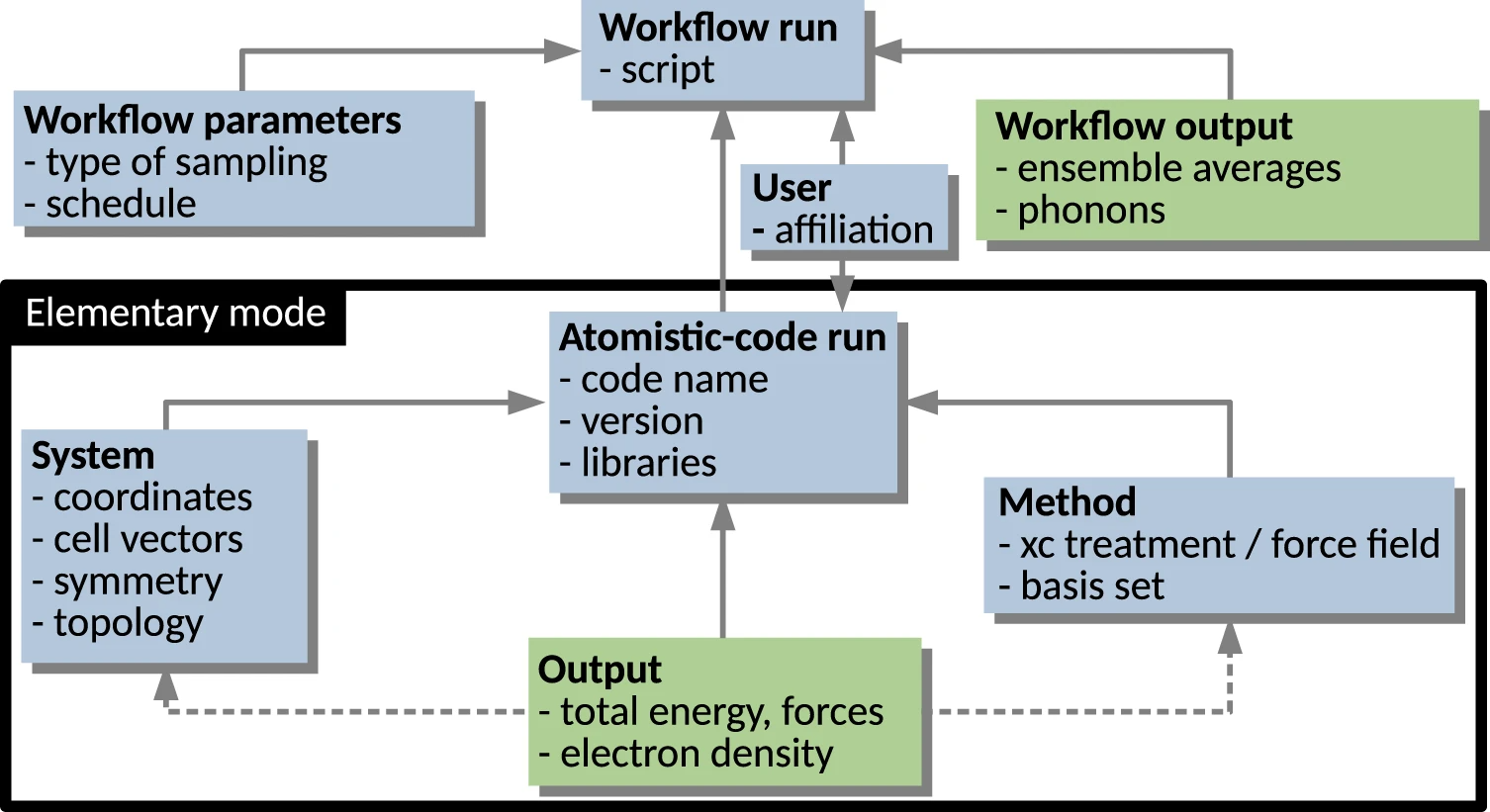

In this 2023 paper published in the journal

Scientific Data, Ghiringhelli

et al. present the results of an international workshop of materials scientists who came together to discuss metadata and data formats as they relate to data sharing in materials science. With a focus on the FAIR principles (findable, accessible, interoperable, and reusable), the authors introduce the concept of materials science data management needs, and the value of metadata towards making shareable data FAIR. They then go into specific use cases, such as electronic-structure calculations, potential-energy sampling, and managing metadata for computational workflows. They also address the nuances of file formats and metadata schemas as they relate to those file formats. They clise with an outlook on the use of ontologies in materials science, followed by conclusions, with a heavy emphasis on "the importance of developing a hierarchical and modular metadata schema in order to represent the complexity of materials science data and allow for access, reproduction, and repurposing of data, from single-structure calculations to complex workflows." They add: "the biggest benefit of meeting the interoperability challenge will be to allow for routine comparisons between computational evaluations and experimental observations."

Posted on October 31, 2023

By Shawn Douglas

Journal articles

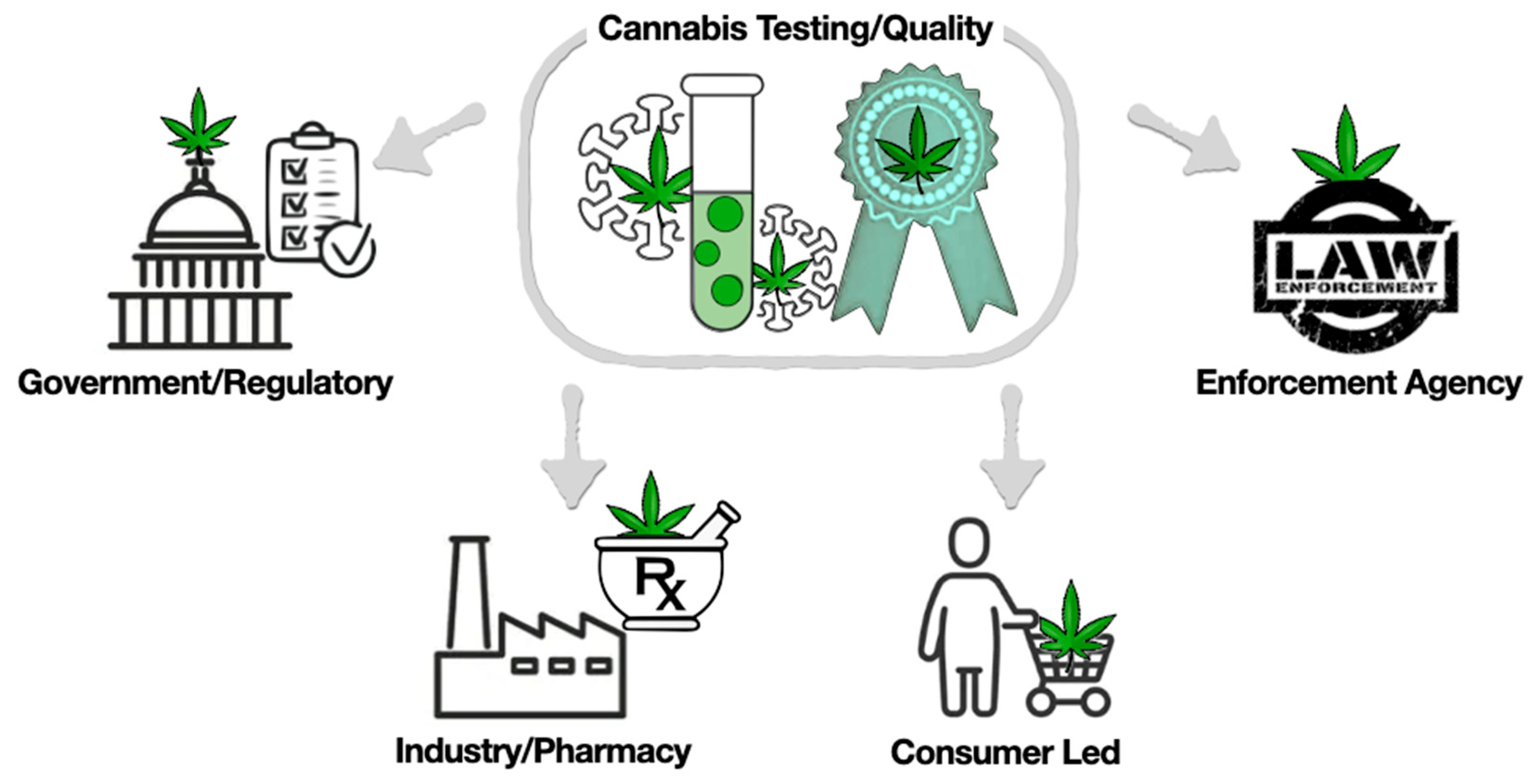

In this 2023 journal article published in

International Journal of Molecular Sciences, Jadhav

et al. recommend a more comprehensive approach to analyzing the

Cannabis plant, its extracts, and its constituents, turning to the still-evolving concept of authentomics—"which involves combining the non-targeted analysis of new samples with big data comparisons to authenticated historic datasets"—of the food industry as a paradigm worth shifting towards. After an introduction on metabolomics and a review of its various technologies, the authors examine the current state of cannabis laboratory testing, how its regulated and standardized, and a variety of issues that make cannabis testing less consistent, as well as how cannabis can become adulterated in the grow chain. They then discuss authentomics and apply it to the cannabis industry, as well address what considerations need to be made in the future to make the most of authentomics. The author conclude that an authentomics approach "provides a robust method for verifying the quality of cannabis products."

Posted on October 24, 2023

By LabLynx

Journal articles

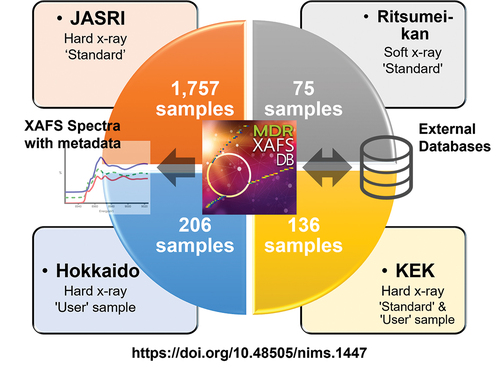

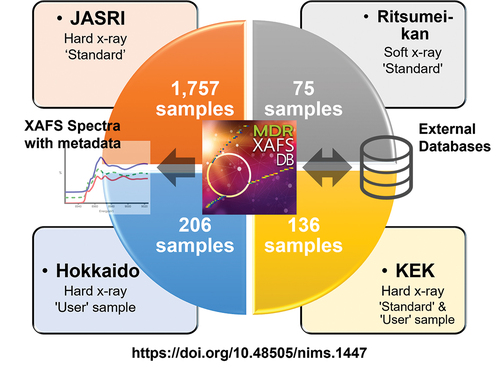

In this 2023 article published in

Science and Technology of Advanced Materials: Methods, Ishii

et al. describe their cross-institutional efforts towards integrating X-ray absorption fine structure (XAFS) spectra and associated metadata across multiple databases to improve materials informatics processes and research methods of materials discovery and analysis. Their new public database, MDR XAFS DB, was developed towards this goal, while addressing two main issues with unifying such data: the difficulties of "designing and collecting metadata describing spectra and sample details, and unifying the vocabulary used in the metadata, including not only metadata items (keys) but also descriptions (values)." After discussing the system's construction and contents, the authors conclude their system "has achieved seamless cross searchability with the use of sample nomenclature so that database users do not have to be aware of the differences in the local metadata of the facilities that provide the data." Though still with some challenges to address, the authors add that while the culture of open data hasn't truly taken hold in materials science, they hope "that this initiative will be a trigger to promote the utilization of materials data."

Posted on October 17, 2023

By LabLynx

Journal articles

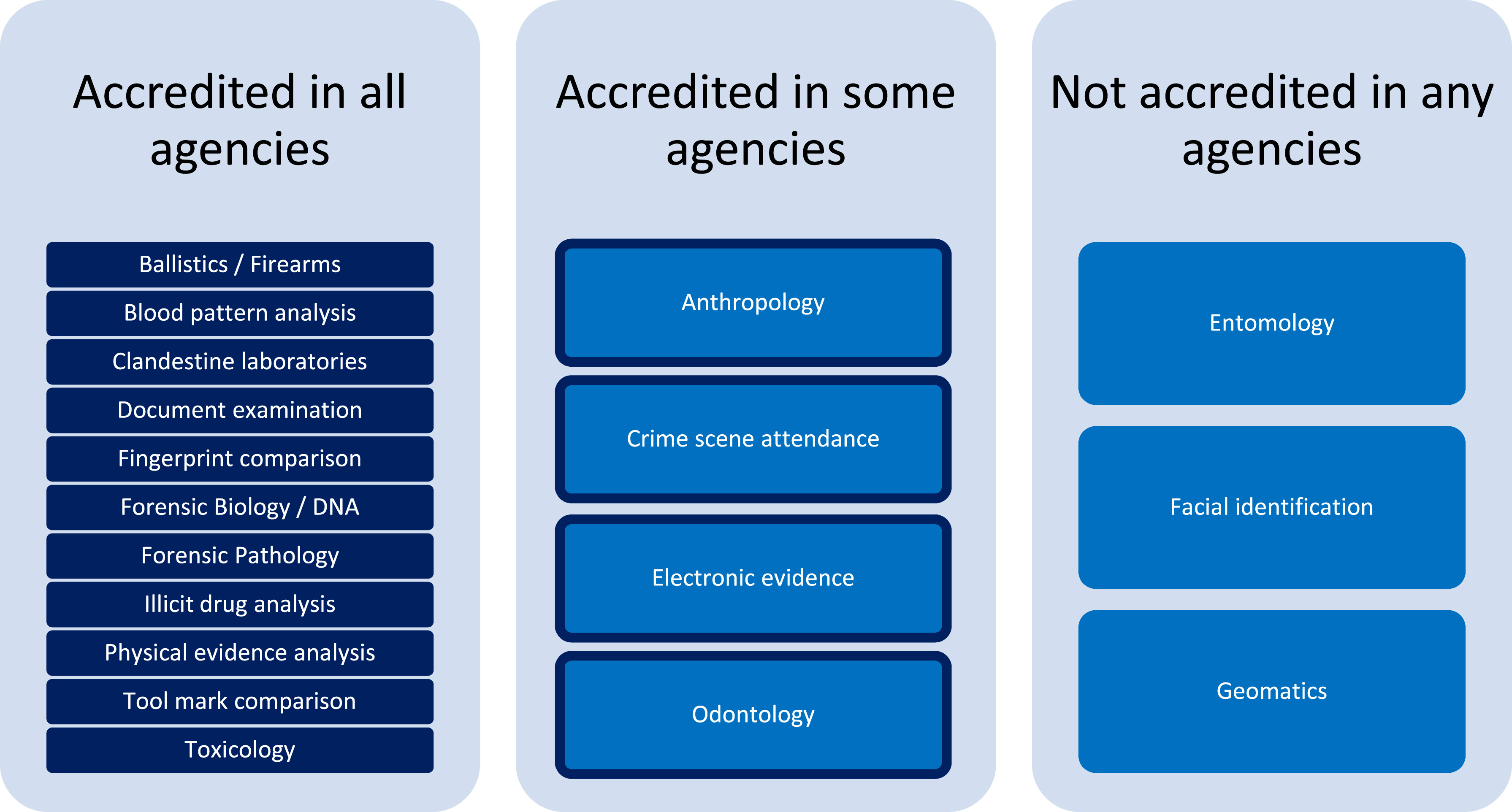

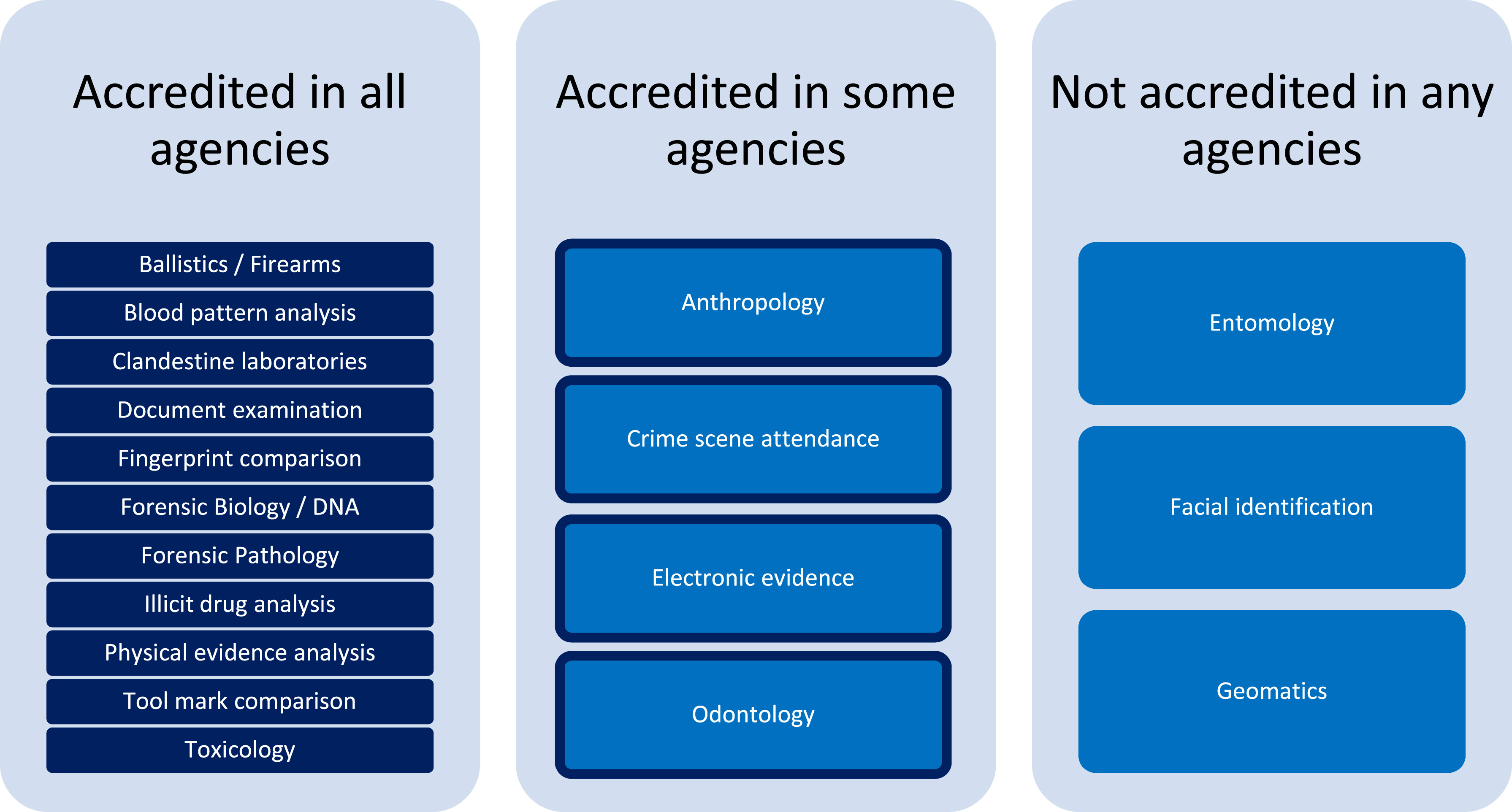

Forensic laboratories are largely bound to performing their actions with quality highly in mind. In fact, standards like ISO/IEC 17025 help such labs focus more on quality with the help of a quality management system (QMS). But even with a strong focus on quality, inconsistencies in recording, managing, and reporting quality issues can be problematic. To that point, Heavey

et al. examine government-based forensic service provider agencies in Australia and New Zealand in this 2023 journal article, using the survey format to gain insight into potential QMS weaknesses. After thoroughly reviewing the methodology and results for their survey, the authors conclude that "the need for further research into the standardization of systems underpinning the management of quality issues in forensic science" is evident from their research, with a greater need "to support transparent and reliable justice outcomes" through improving "evidence-based standard terminology for quality issues in forensic science to support data sharing and reporting, enhance understanding of quality issues, and promote transparency in forensic science."

Posted on October 9, 2023

By LabLynx

Journal articles

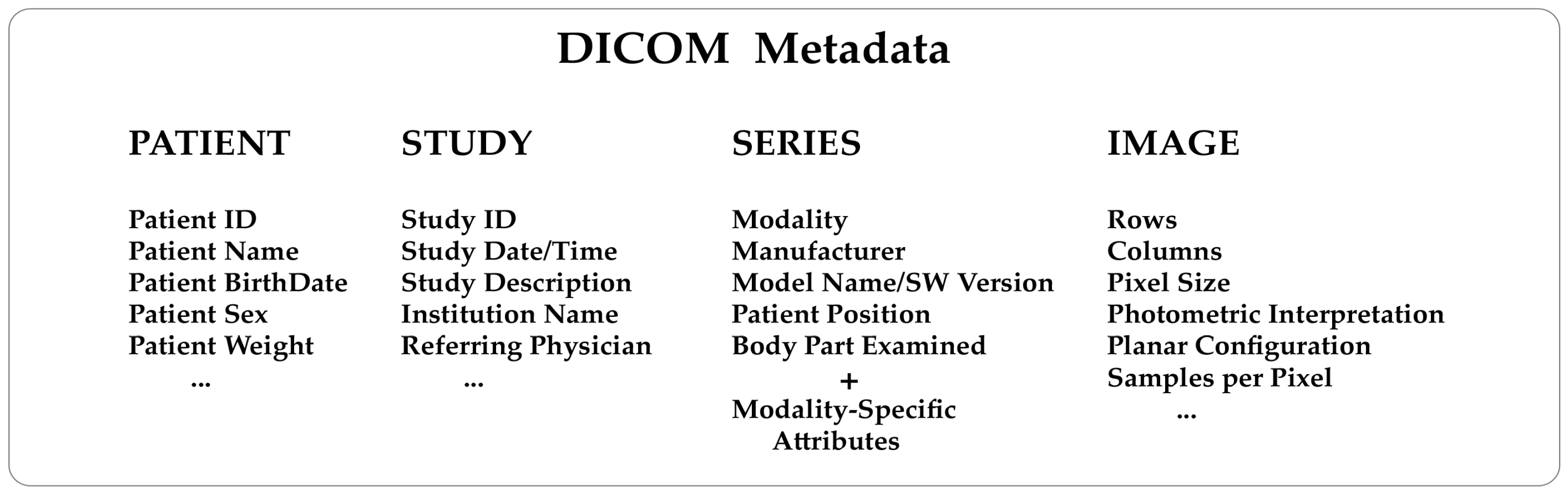

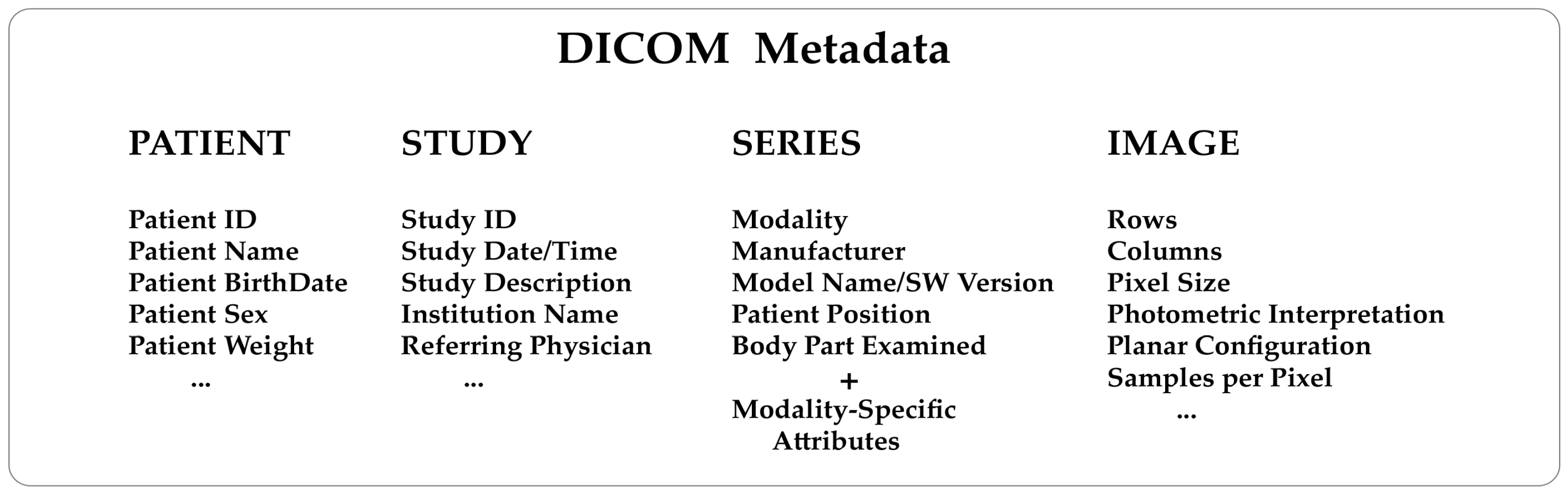

In this brief 2023 article published in the journal

Tomography, Michele Larobina of Consiglio Nazionale delle Ricerche provides background on "the innovation, advantages, and limitations of adopting DICOM and its possible future directions." After an introduction on the Digital Imaging and Communications in Medicine standard, Larobina then emphasizes the benefits of the standard's approach to handling image metadata, followed by the exchange component of the standard. Larobina then examines the strengths and weaknesses of the DICOM standard, before concluding that DICOM has not only demonstrated "the positive influence and added value that a standard can have in a specific field," but also "encouraged and facilitated data exchange between researchers, creating added value for research." Despite this, "the time is ripe to review some of the initial directives" of DICOM, says Larobina, in order for the the standard to remain relevant going forward.

Posted on October 2, 2023

By Shawn Douglas

Journal articles

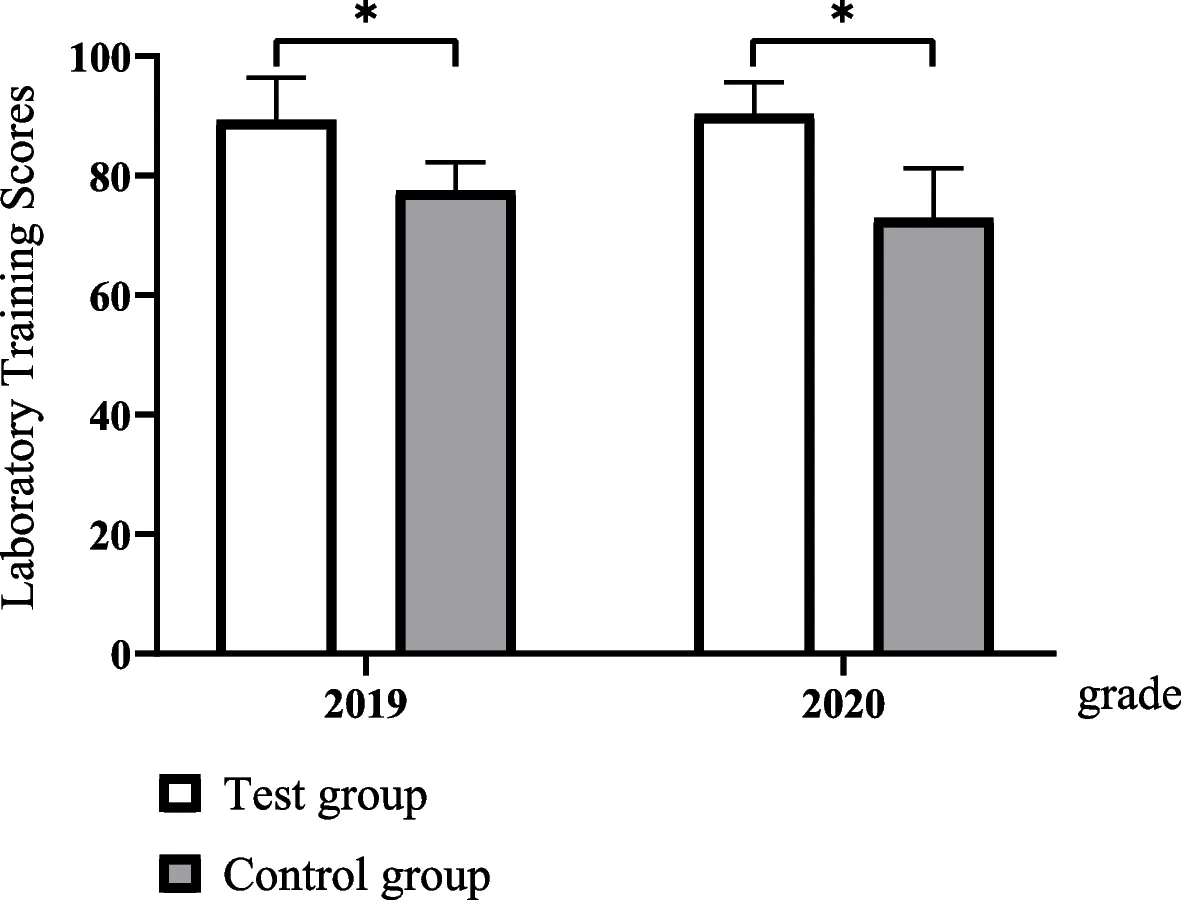

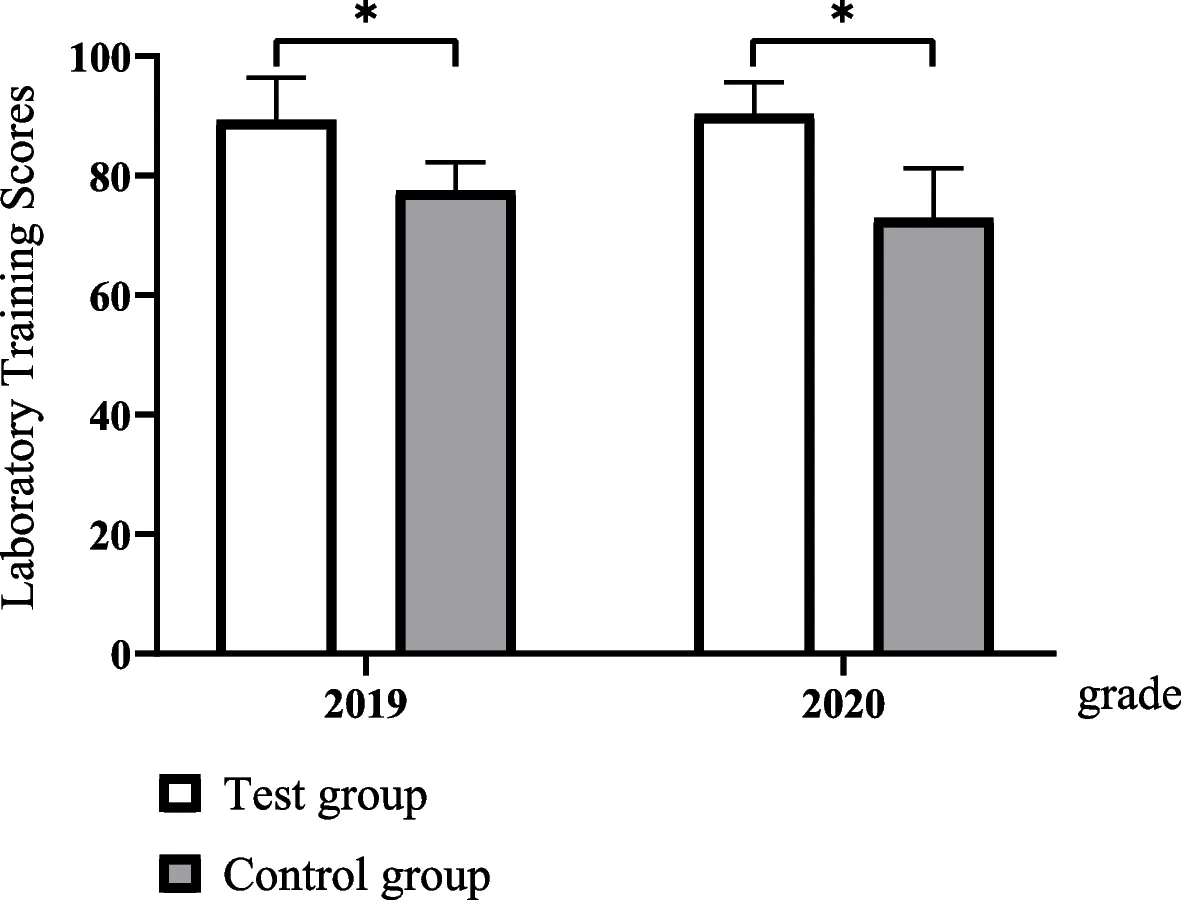

In this article published in

BMC Medical Education, Xu

et al. present their four-stage clinical biochemistry teaching methodology which incorporates elements of the International Organization for Standardization's ISO 15189 standard. Noting a lack of student awareness of ISO 15189

Medical laboratories — Requirements for quality and competence and the value its emphasis on quality management brings to laboratories, the authors set upon incorporating the standard into a case-based learning (CBL) approach. After the authors describe the details of their approach and the survey-based results analysis of its outcomes, the authors conclude that outside of improving student outcomes in clinical biochemistry, "ISO 15189 concepts of continuous improvement were implanted, thereby putting the concept of plan–do–check–act (PDCA) into practice, developing recording habits, and improving communication skills."

Posted on September 26, 2023

By Shawn Douglas

Journal articles

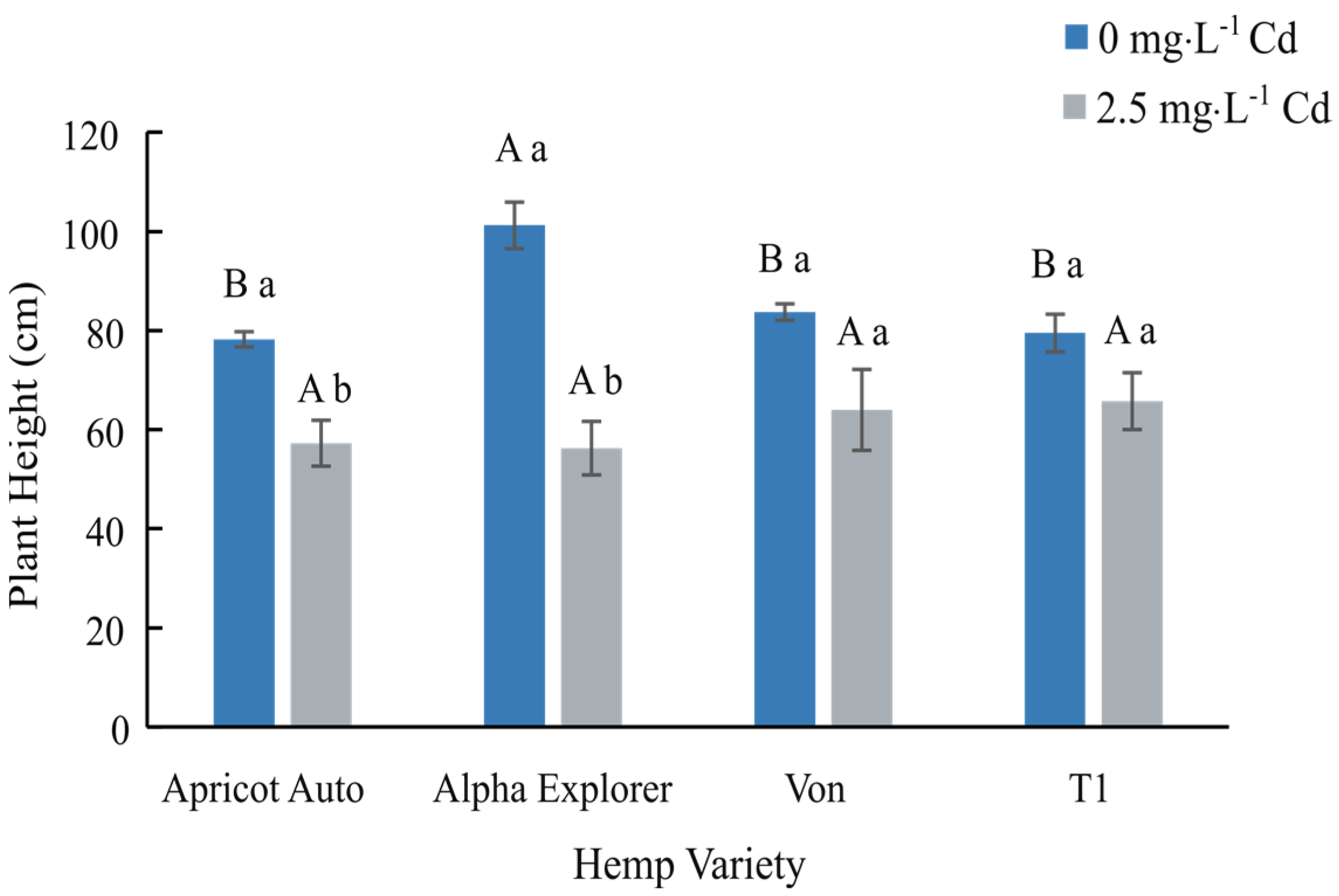

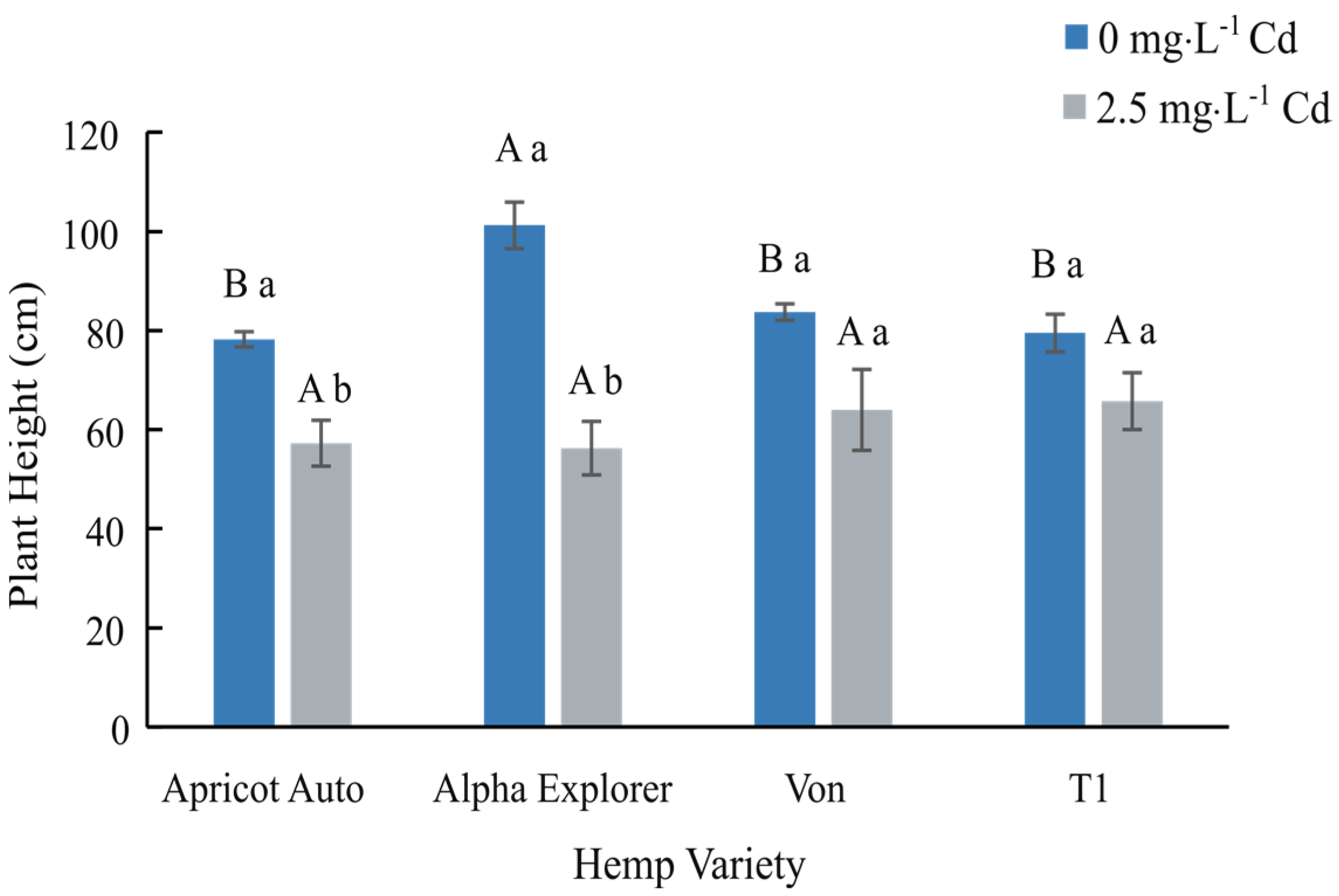

In this 2023 article published in the journal

Water, Marebesi

et al. of the University of Georgia present their findings on phytoremediation of Cadmium (Cd)-contaminated soils using day-neutral and photoperiod-sensitive hemp plants. After a thorough introduction on the topic of using

Cannabis plants for phytoremediation (including the flowers), the authors present their materials and methods, focusing on Apricot Auto, Alpha Explorer, Von, and T1 varieties of hemp. They then present the results of their experiments, addressing plant height, biomass yield, Cd concentrations in various plant tissues, nutrient partitioning, and THC/CBD concentrations. They conclude noting that "while Cd concentration was significantly higher in roots, all four varieties were efficient in translocating Cd from roots to shoots." They also conclude that THC/CBD loss is affected by remediation in some cases, as was height and biomass. Finally, they highlight the importance of Cd testing of flowers for medical markets where the plant is used.

Posted on September 18, 2023

By LabLynx

Journal articles

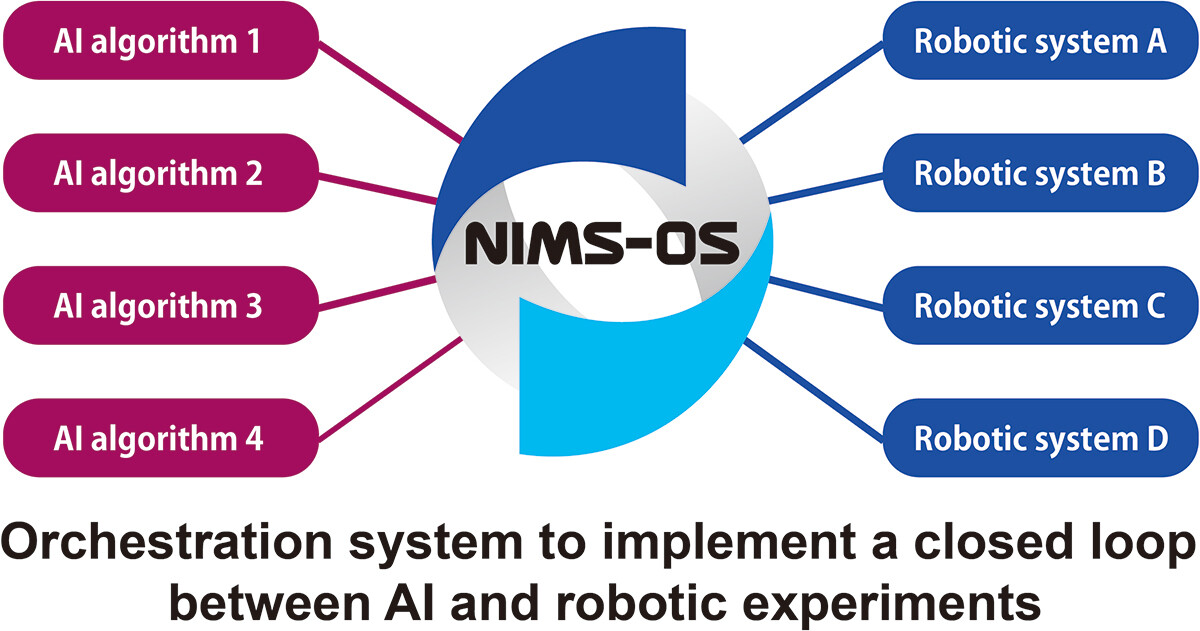

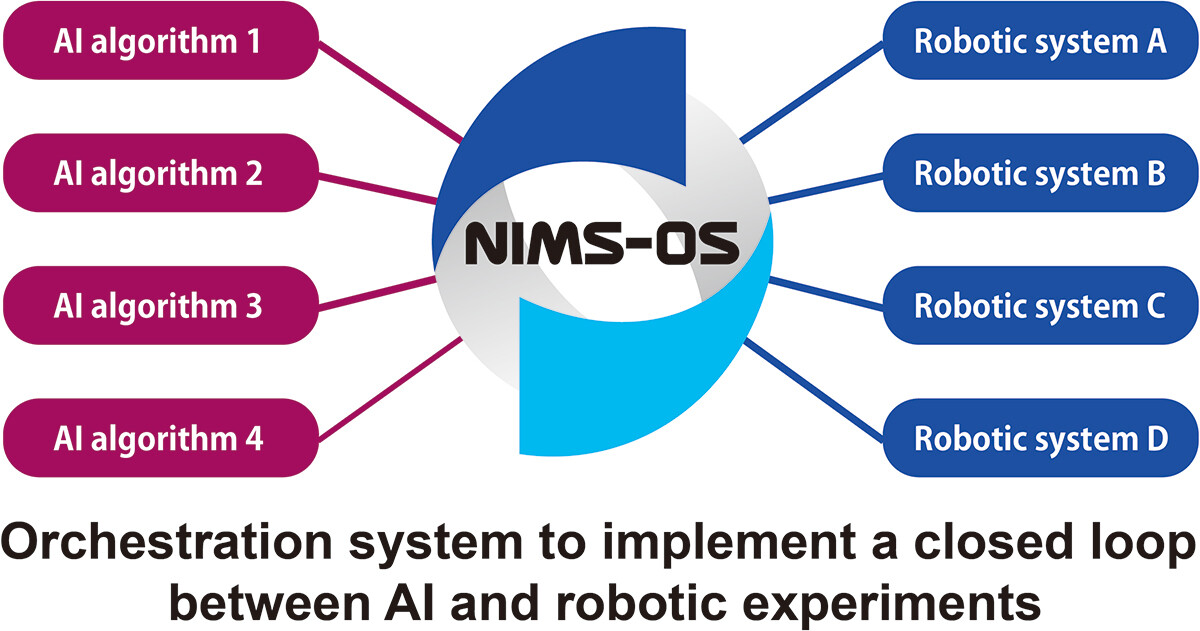

As technological, cultural, and environmental shifts continue to put pressure on the ways academic and private research is conducted, researchers must further strive to get the most out of their work. This holds true across a broad swath of scientific fields, including materials science. Noting the continuing expansion of laboratory automation and software-based tools like artificial intelligence (AI) and machine learning (ML), Tamura

et al. emphasize that materials science and novel materials research can benefit greatly from adopting these tools. In this 2023 paper, the authors present their take on this trend, in the form of NIMS-OS, a Python-based system developed to take advantage of completely automated material discovery experiments. After presenting an introduction on the topic, the authors discuss the development philosophy and approach to making NIMS-OS, while also describing use of both the Python command-line tool and the graphical user interface (GUI)-driven version of the software. The authors conclude that their system demonstrates how "various problems for automated materials exploration to be commonly performed," while at the same time being "designed to be easily adaptable to a variety of original robotic systems in materials science."

Posted on September 12, 2023

By LabLynx

Journal articles

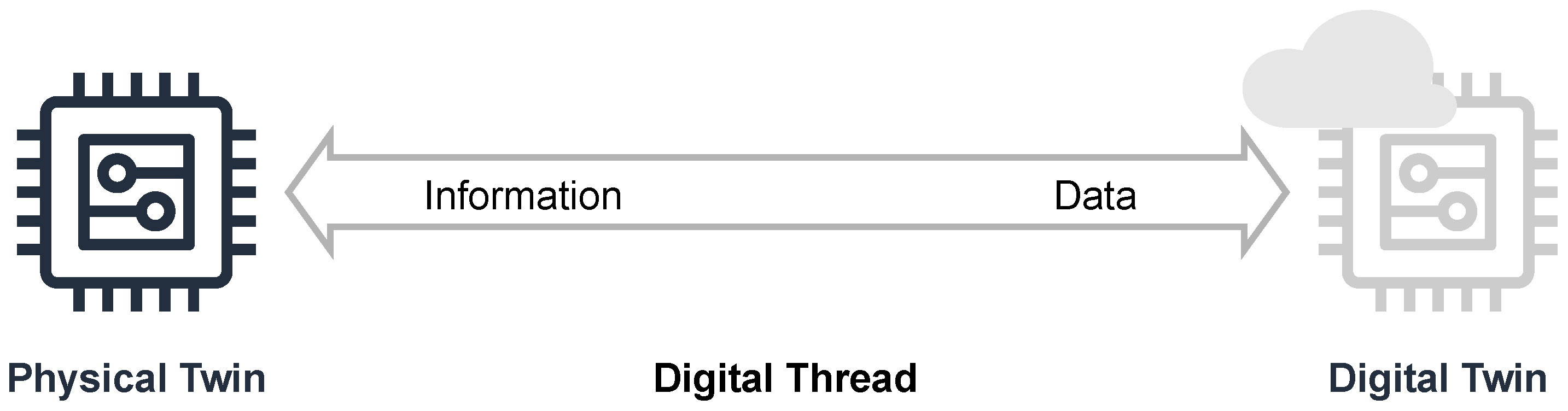

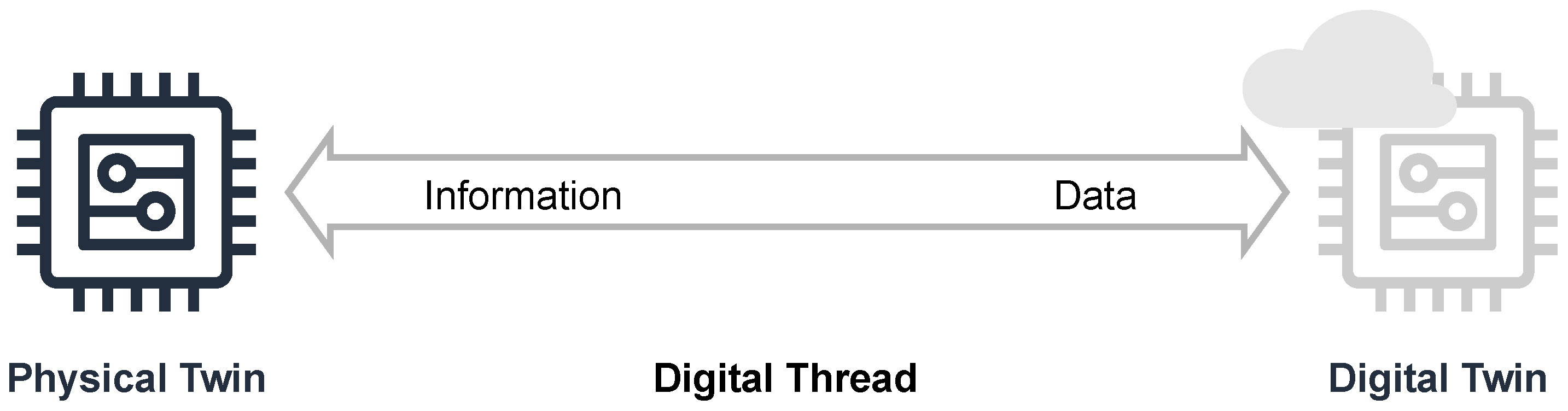

In this 2023 article published in the journal

Sensors, Lehmann

et al. of the Mannheim University of Applied Sciences present their efforts towards a research data management (RDM) system for their Center for Mass Spectrometry and Optical Spectroscopy (CeMOS). The proof-of-concept RDM leans on digital twin (DT) technology to answer the challenges posed by RDM of multiple research instruments in their institute, while at the same time addressing the principles of making data more findable, accessible, interoperable, and reusable (FAIR). After introducing the topic and presenting related work as it relates to their efforts, the authors lay out their conceptual architecture and discuss use cases within the context of their spectrometry and spectroscopy work. Then they propose an implementation, proof of concept, and evaluation of the proof of concept within the implementation's framework. After discussing their results, the authors conclude that their efforts contribute to the literature in five different ways, addressing RDM within FAIR, highlighting the value of DTs, developing a top-level knowledge graph, elaborating on reactive vs. proactive DTs, and providing a practical approach to DTs in the RDM at larger research institutes. They also provide caveats and future work that still needs to be done.

Posted on September 5, 2023

By LabLynx

Journal articles

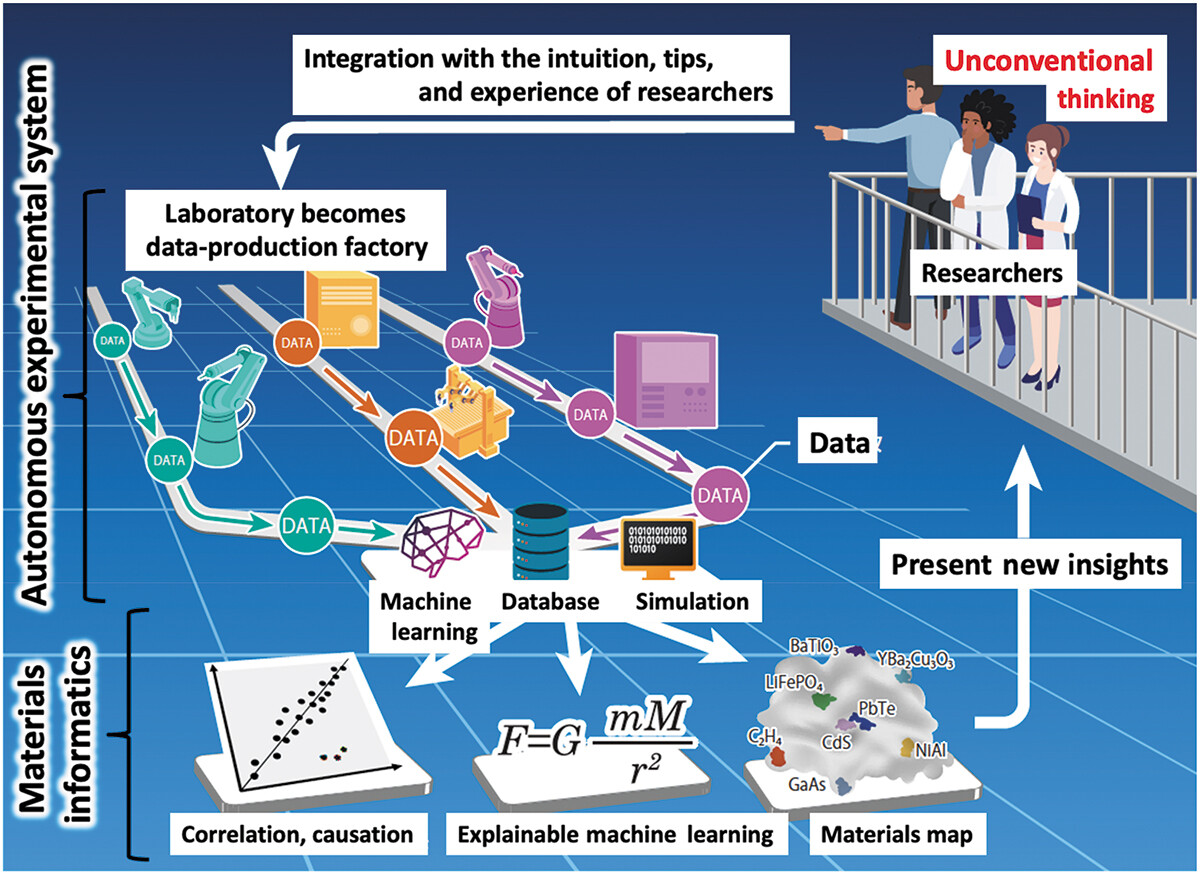

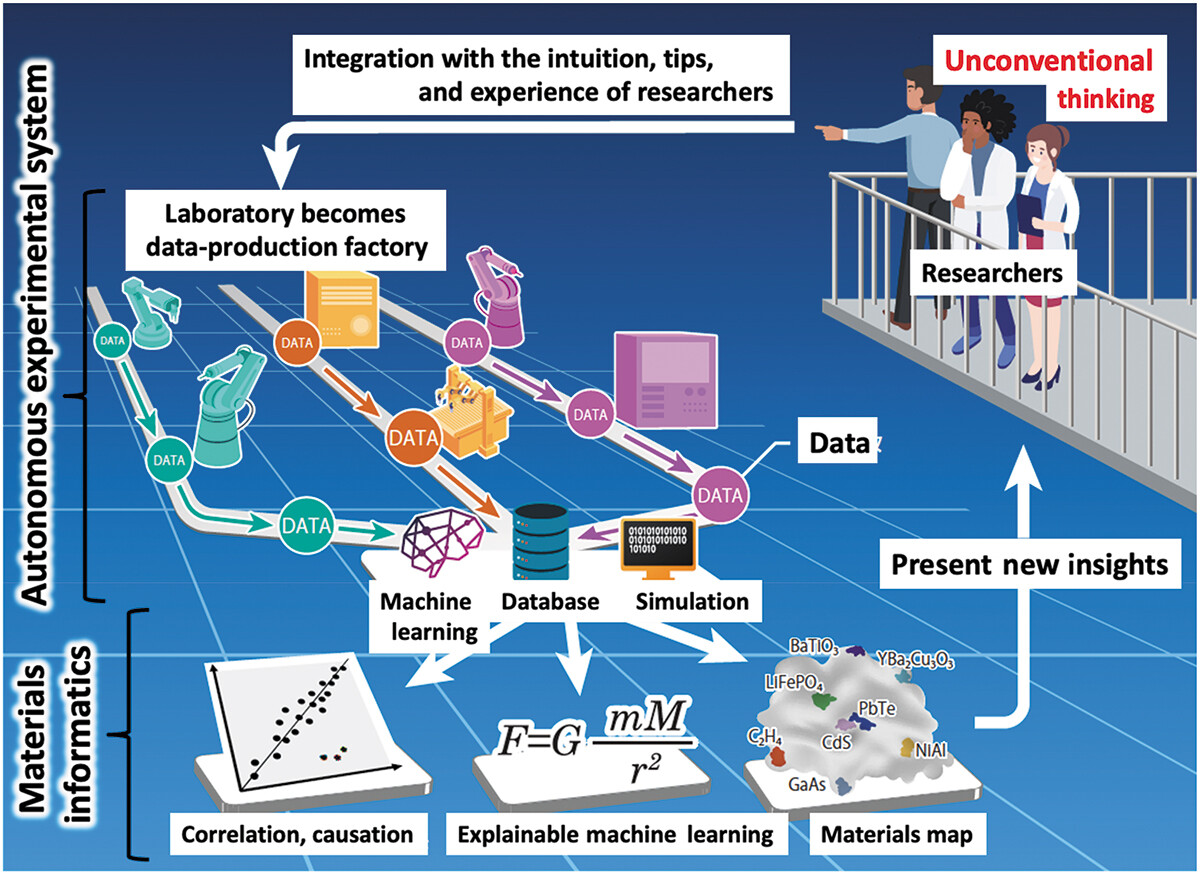

Materials science has been and continues to be an important study, particularly as we strive to overcome challenges such as climate change, pollution, and world hunger. And like many other sciences, the expanding paradigm of autonomous systems, machine learning (ML), and even artificial intelligence (AI) stands to leave a positive mark on how we conduct research on materials of all types. Ishizuki

et al. of the Tokyo Institute of Technology and The University of Tokyo echo this sentiment in their 2023 paper published in the journal

Science and Technology of Advanced Materials: Methods, which discusses the state of the art of autonomous experimental systems (AESs) and their benefits to material science laboratories. After introducing the topic, the authors explore the state of the art through other researchers' efforts, drawing conclusions based upon how AESs can be put to use in the field. After characterizing AESs' use and addressing sociocultural factors such as inventorship and authorship with AI and autonomous systems, the authors conclude that while AES will provide strong value to materials science labs, "human researchers will always be the main players," and this hybrid automation-human approach "will exponentially accelerate creative research and development, contributing to the future development of science, technology, and industry."

Posted on August 31, 2023

By LabLynx

Journal articles

In this brief paper published in

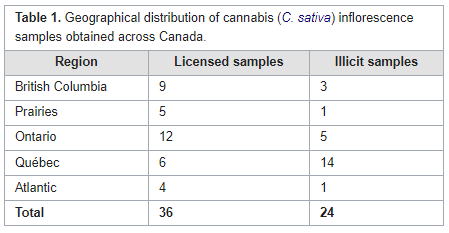

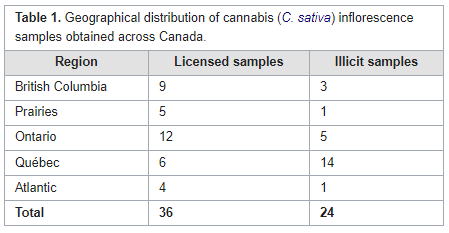

Journal of Cannabis Research, Gagnon

et al. present the results of applying a new analytical technique to quantifying hundreds of pesticides at the same time in cannabis samples. Using a combination of gas chromatography—triple quadrupole mass spectrometry (GC–MS/MS) and liquid chromatography—triple quadrupole mass spectrometry (LC–MS/MS), the authors tested not only cannabis samples from licensed retailers in Canada but also illicit cannabis samples acquired from Health Canada Cannabis Laboratory. Their methodology was fully able to manage the search for hundreds of pesticides, discovering only a handful of semi-unregulated pesticides in legal Canadian cannabis, but a surprising amount of pesticides in illicit samples. The authors conclude that their methodology, as demonstrated, has practical uses going forward towards a wider regulatory approach to ensuring Canadian cannabis is as pesticide-free as possible.

Posted on August 21, 2023

By LabLynx

Journal articles

In this 2023 journal article published in

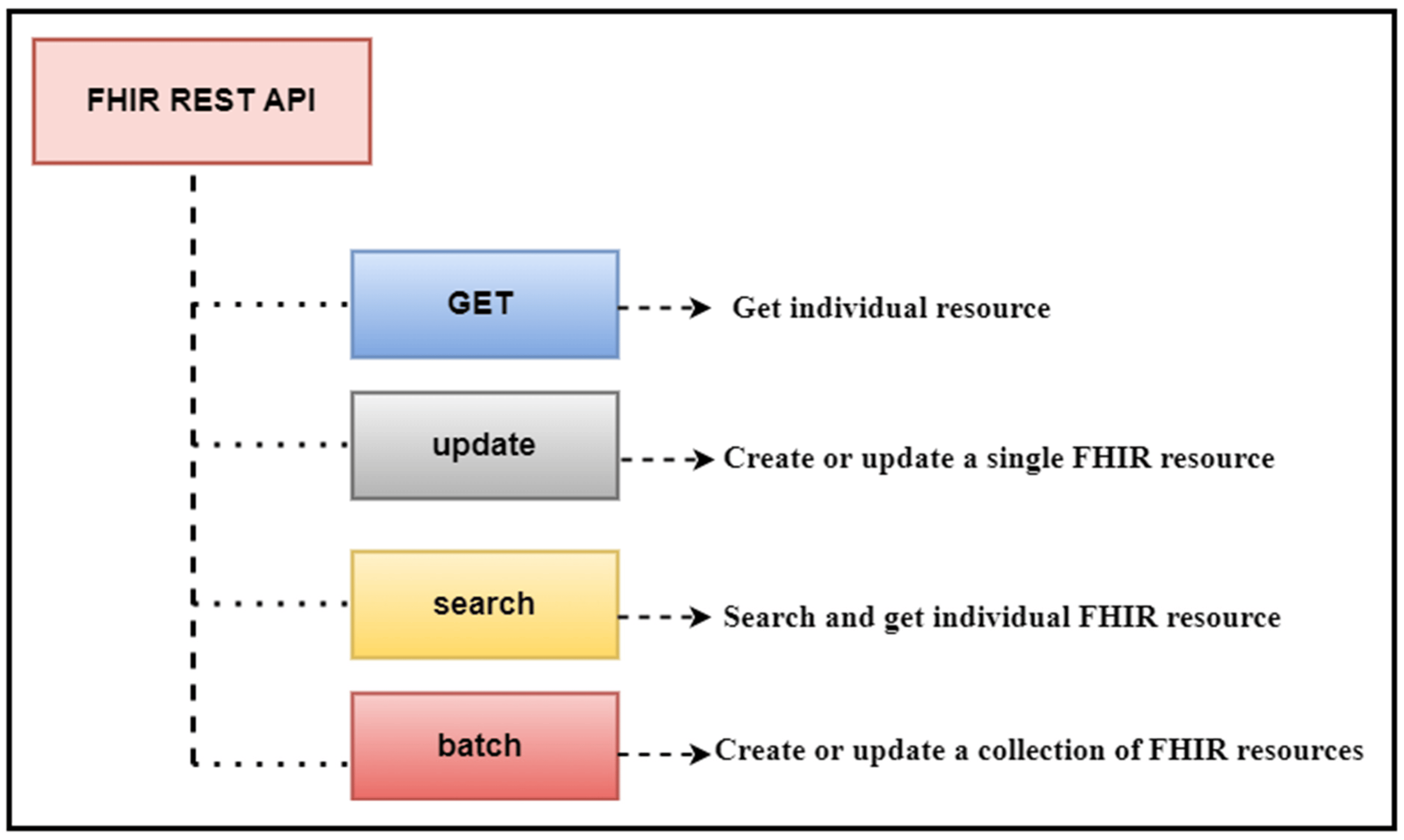

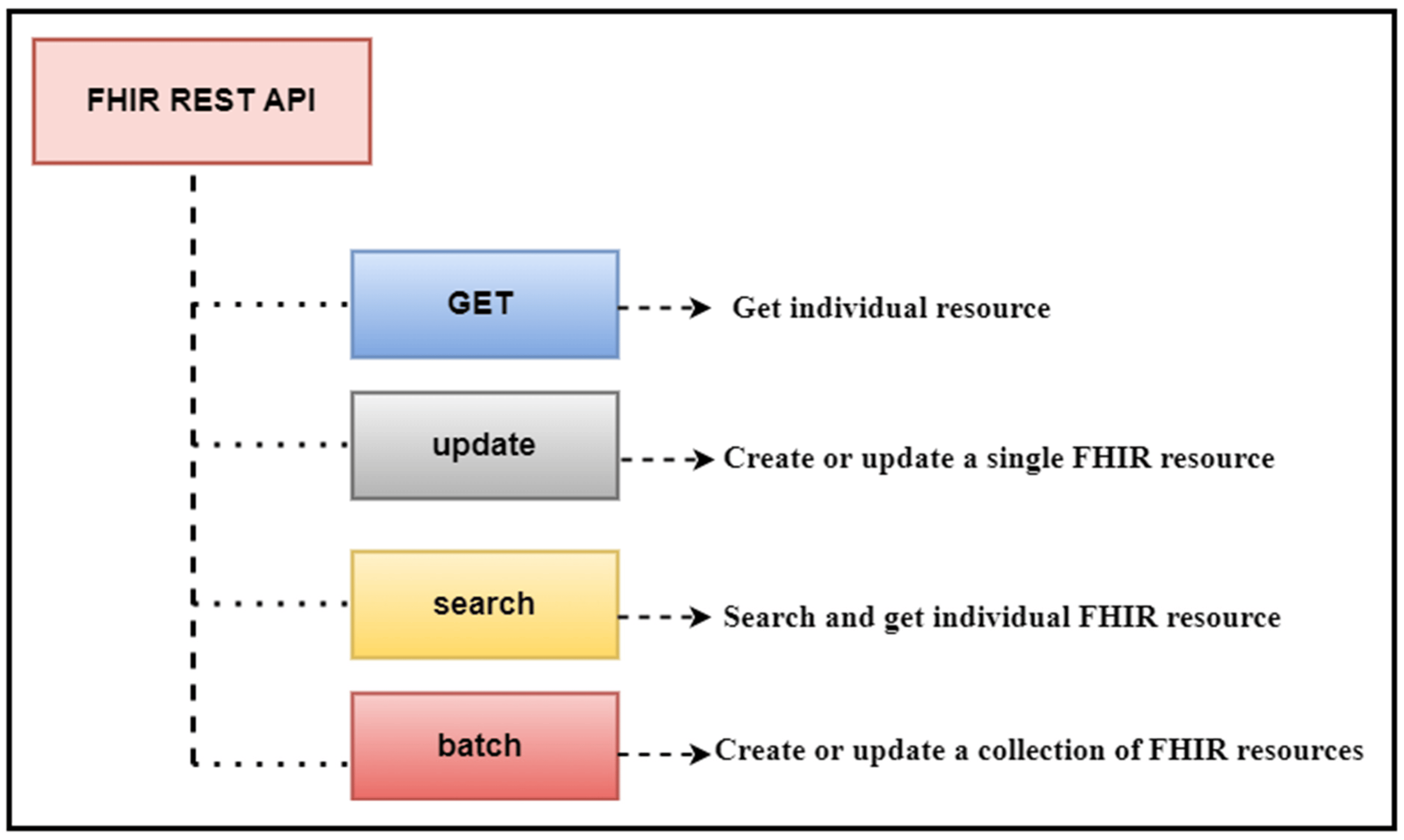

Healthcare, Ayaz

et al. present their work on a "data analytic framework that supports clinical statistics and analysis by leveraging ... Fast Healthcare Interoperability Resources (FHIR)." After providing significant background on the topic, as well as a review of the literature on the topic, the authors discuss the initial preparations that went into developing their framework, followed by the results of their implementation. The authors also present a two-part example with use cases that puts the framework to a test. After discussing the limitations and results of their framework, the authors conclude that it effectively "empowers healthcare users (patients, practitioners, healthcare providers, etc.) to perform advanced data analysis on patient data used in healthcare settings and represented in the FHIR-based standard."

Posted on August 14, 2023

By LabLynx

Journal articles

In this 2023 journal article published in

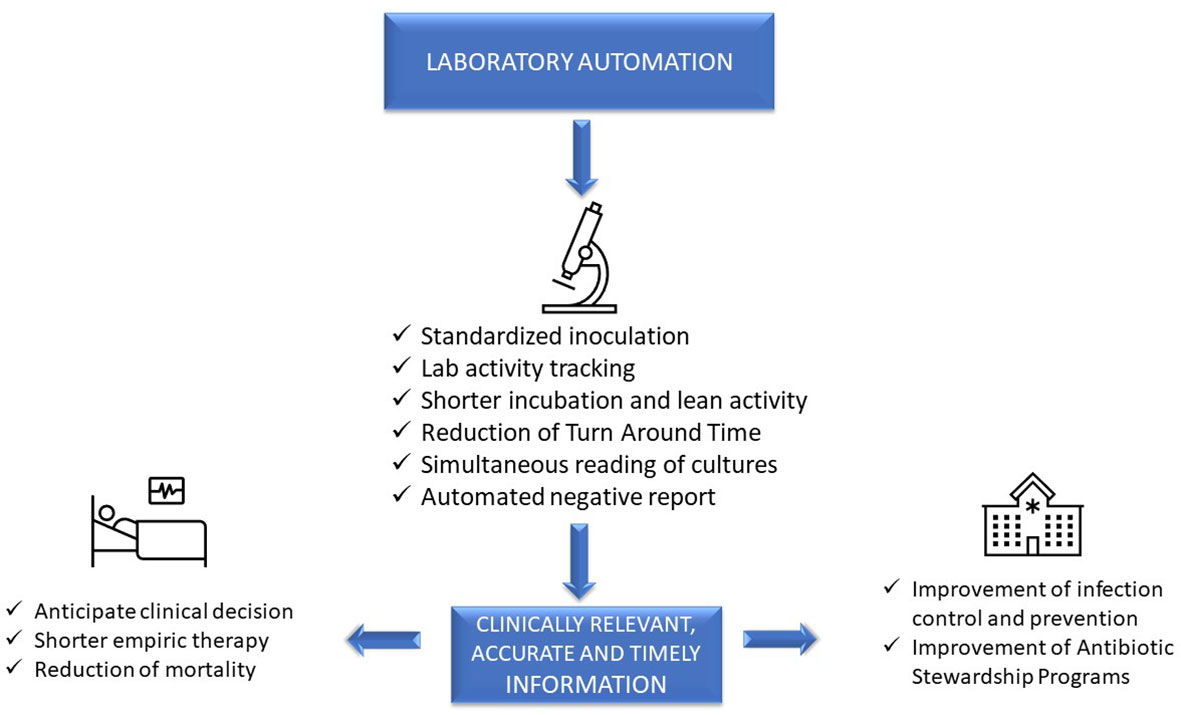

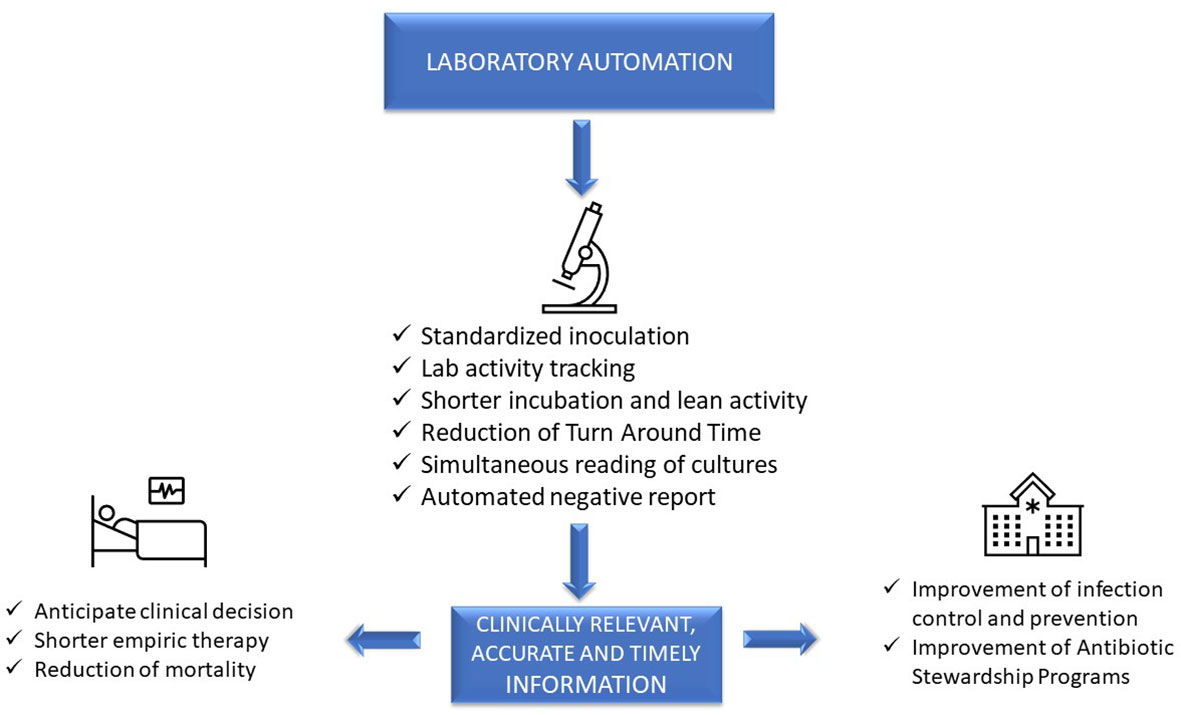

Frontiers in Cellular and Infection Microbiology, Mencacci

et al. examine laboratory automation requirements and demands within the context of clinical microbiology laboratories. Using concepts from “total laboratory automation” (TLA), artificial intelligence (AI), and clinical laboratory informatics, the authors address not only the impact of these technologies on laboratory management and workflows but also on patients and hospital systems. Discussion of the state of the art leads the authors to note that the "implementation of laboratory automation and laboratory informatics can support integration into routine practice monitoring specimens’ quality, isolation of specific pathogens, alert reports for infection control practitioners, and real-time collection of lab trend data, all essential for the prevention and control of infections and epidemiological studies." The authors conclude that "prioritizing laboratory practices in a patient-oriented approach can be used to optimize technology advances for improved patient care."

Posted on August 7, 2023

By LabLynx

Journal articles

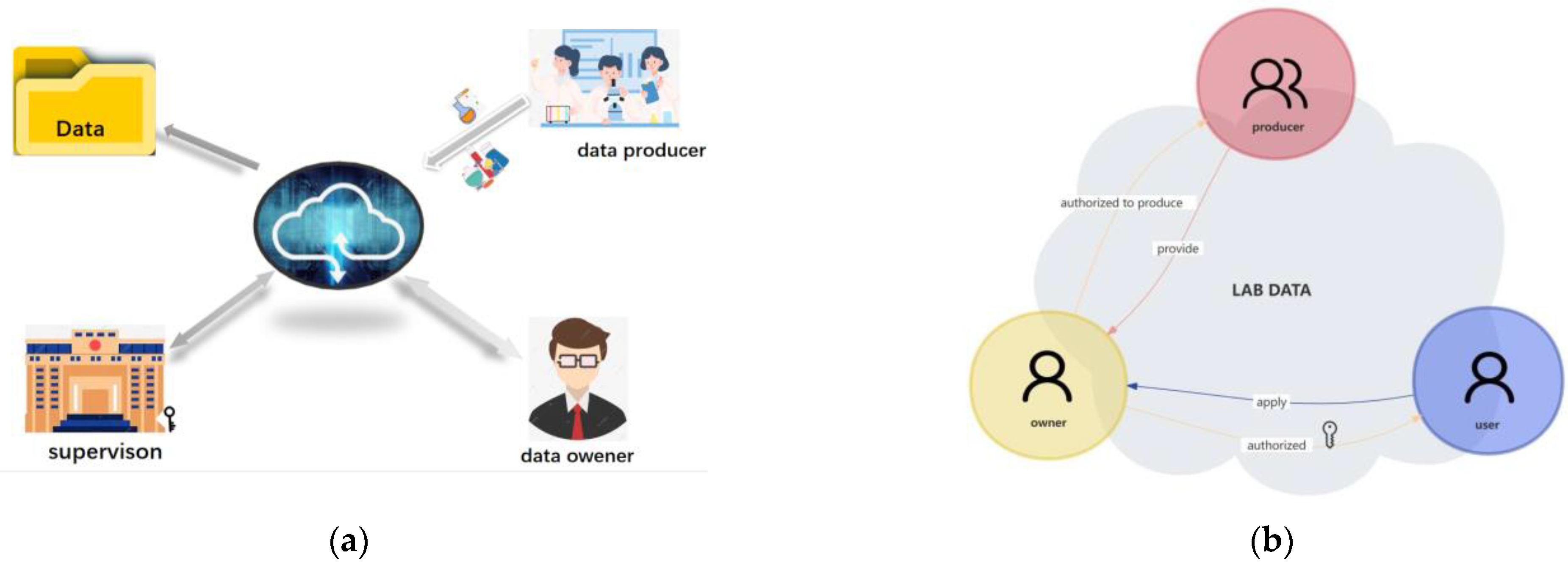

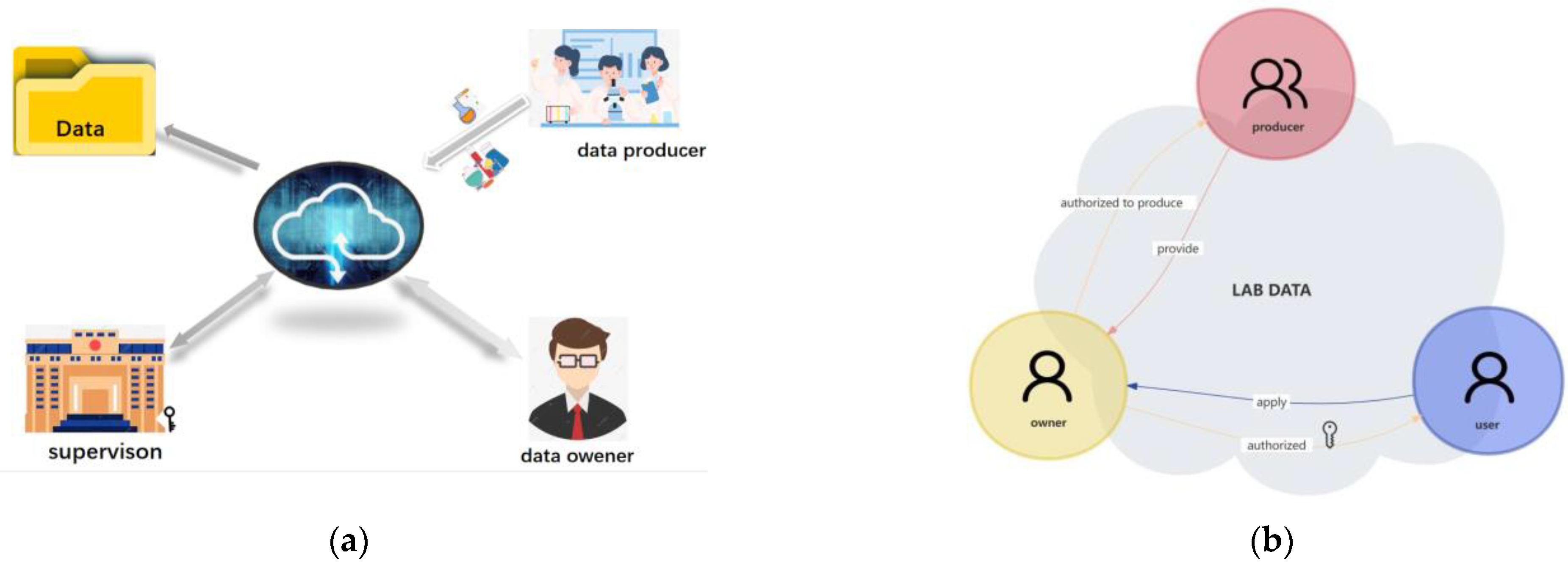

"Islands" of information and data that are dispersed across an organization irregularly can lead to inefficiencies in workflows and missed opportunities to improve or expand the organization. This statement also holds true for college and university laboratories, who equally must face down issues with untimely data, lackadaisical data security, and ownership-related issues. Zheng

et al. tackle this issue with their data-ownership-based security architecture, presented in this journal article published in

Electronics. After providing an introduction and related work, the authors provide in-depth details of the methodology used to develop their secure lab data management architecture, followed by example cases using four different algorithms to find the most suitable solution for authorized encryption and decryption of shared data across the proposed system., which allows "relevant units, universities, and laboratories to encrypt data with public keys and upload them to the data register center to form a data directory." They conclude "that the proposed strategy is secure and efficient for lab data sharing across [multiple] domains."

Posted on July 31, 2023

By LabLynx

Journal articles

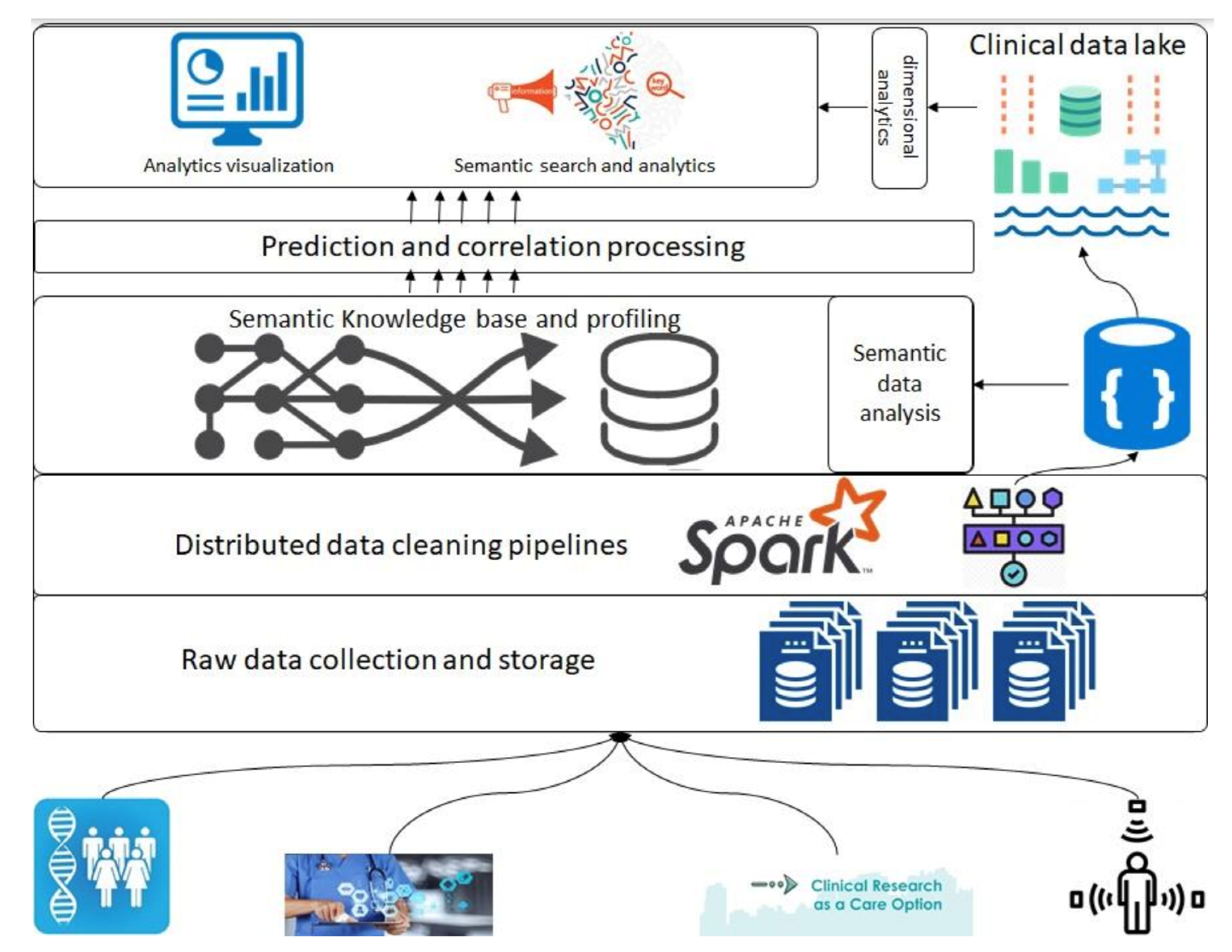

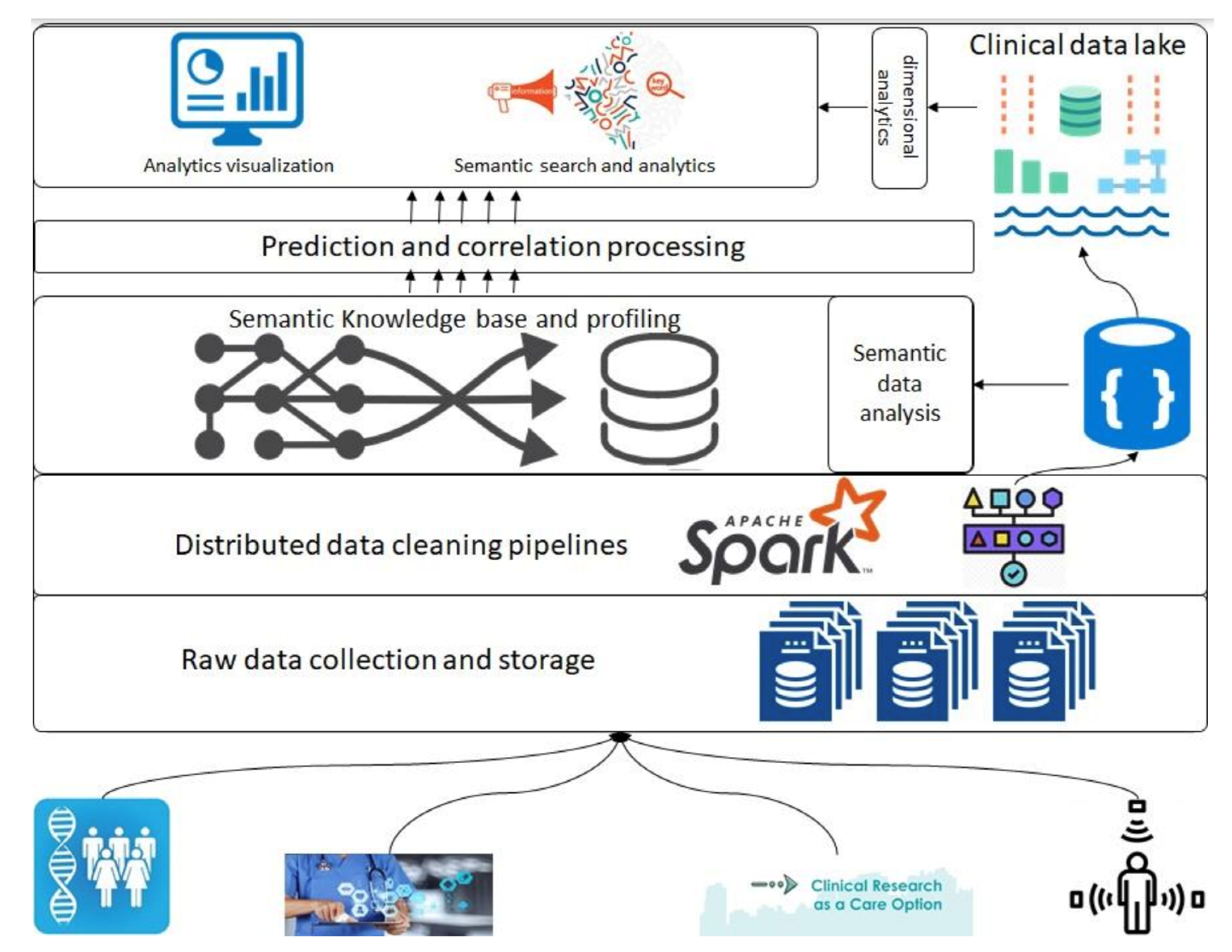

In this 2023 article published in the journal

Healthcare, Siddiqi

et al. propose a "health informatics framework that supports data acquisition from various sources in real-time, correlates these data from various sources among each other and to the domain-specific terminologies, and supports querying and analyses." After providing background information about the need for such a framework, as well as discussing the state of the art, the authors describe their methodology in detail, followed by a experimental use case demonstrating the framework's use. The authors conclude that the "framework is extendable, encapsulates the abilities to include other domains, provides support for semantic querying at the governance level (i.e., at the data assets and type assets level), and is designed towards a data and computation economic model," while at the same time supporting "evidence-based decision tracking ... which follows the FAIR principles."

Posted on July 24, 2023

By LabLynx

Journal articles

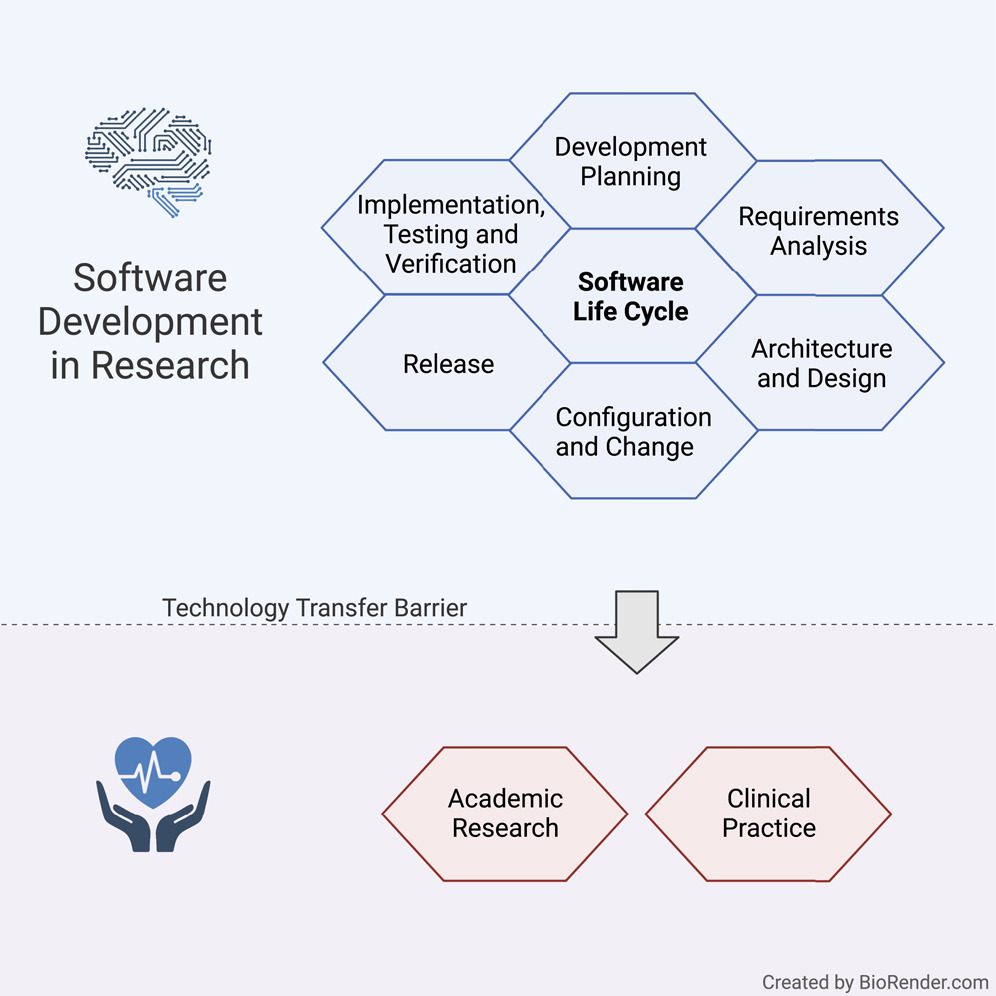

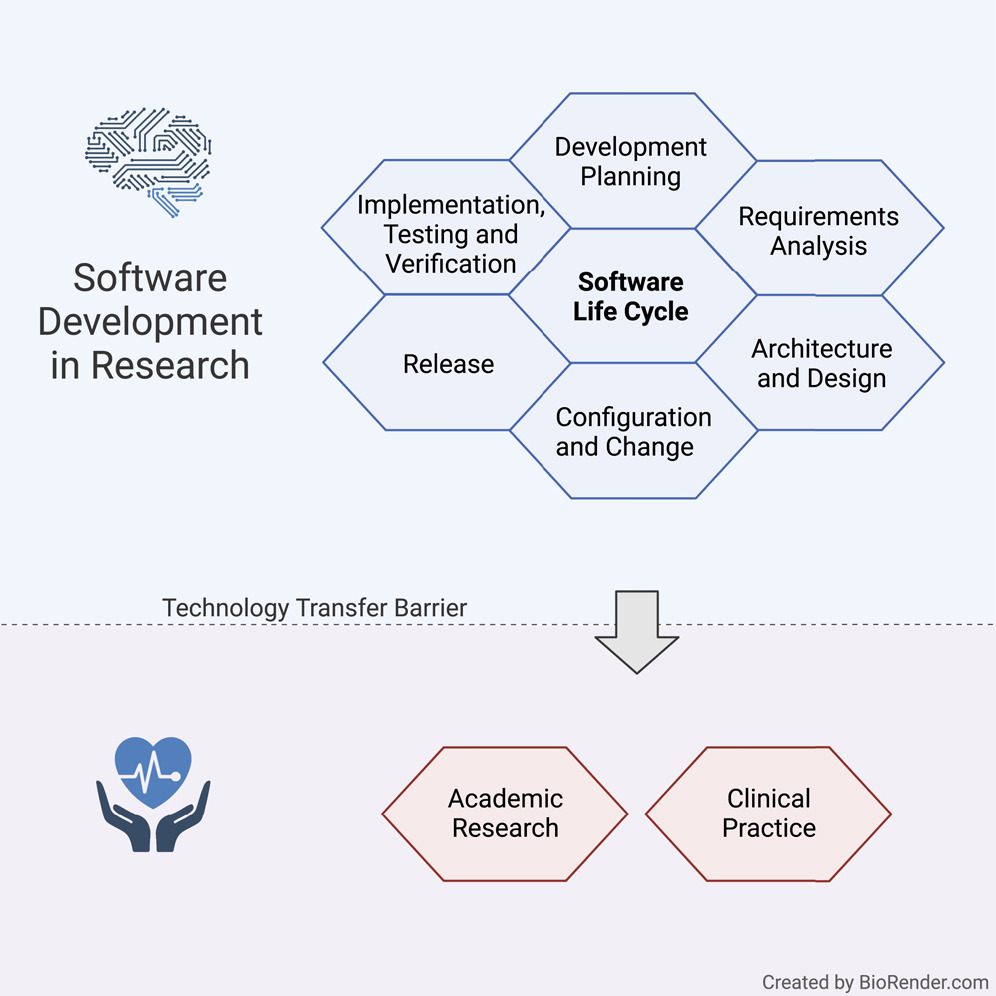

In this 2022 journal article published in

iScience, Hauschild

et al. present a set of guidelines for practicing the academic software life cycle (SLC) within medical labs and research institutes, where commercial industry SLC standards are less applicable. As part of their guidelines, the authors "propose a subset of elements that we are convinced will provide a significant benefit to research settings while keeping the development effort within a feasible range." After a solid introduction, the authors get into the details of their guidelines, addressing all the various stages within the SLC and how they are best applied in the medical academic settings. They close with a brief discussion, concluding that their guideline "lowers the barriers to a potential technology transfer toward the medical industry," while providing "a comprehensive checklist for a successful SLC."

In this 2023 paper published in JMIR Medical Informatics, Henke et al. of Technische Universität Dresden present the results of their effort to add "incremental loading" to the Medical Informatics in Research and Care in University Medicine's (MIRACUM's) clinical trial recruitment support systems (CTRSSs). Those CTRSSs already allows bulk loading of German-based FHIR data, supporting "the possibilities for multicentric and even international studies," but MIRACUM needed greater efficiencies when updating such data on a daily, incremental basis. The paper presents their literature review and approach to adding incremental loading to their systems. They conclude that the extract-transfer-load (ETL) "process no longer needs to be executed as a bulk load every day" with the change, instead being able to rely on "using bulk load for an initial load and switching to incremental load for daily updates." They add that this process has international applicability and is not limited to German FHIR data.

In this 2023 paper published in JMIR Medical Informatics, Henke et al. of Technische Universität Dresden present the results of their effort to add "incremental loading" to the Medical Informatics in Research and Care in University Medicine's (MIRACUM's) clinical trial recruitment support systems (CTRSSs). Those CTRSSs already allows bulk loading of German-based FHIR data, supporting "the possibilities for multicentric and even international studies," but MIRACUM needed greater efficiencies when updating such data on a daily, incremental basis. The paper presents their literature review and approach to adding incremental loading to their systems. They conclude that the extract-transfer-load (ETL) "process no longer needs to be executed as a bulk load every day" with the change, instead being able to rely on "using bulk load for an initial load and switching to incremental load for daily updates." They add that this process has international applicability and is not limited to German FHIR data.

In this 2023 paper published in the Journal of Cannabis Research, Johnson et al. examine the current state of hemp-derived delta-9-tetrahydrocannabinol (Δ9-THC) products on the U.S. market after the passage of the Agriculture Improvement Act of 2018. Noting discrepancies and loopholes in the legislation, the authors performed laboratory analyses on 53 hemp-derived Δ9-THC products from 48 brands, while also examining aspects such as age verification, labeling consistency, and comparison of reported company values vs. analyzed values. After describing their methodology and results, the authors conclude that "the legal status of hemp-derived Δ9-THC products in America essentially permits their open sale while placing very few requirements on the companies selling them." The end result includes finding, for example, products "that have 3.7 times the THC content of edibles in adult-use states," as well as inaccurately labeled products.

In this 2023 paper published in the Journal of Cannabis Research, Johnson et al. examine the current state of hemp-derived delta-9-tetrahydrocannabinol (Δ9-THC) products on the U.S. market after the passage of the Agriculture Improvement Act of 2018. Noting discrepancies and loopholes in the legislation, the authors performed laboratory analyses on 53 hemp-derived Δ9-THC products from 48 brands, while also examining aspects such as age verification, labeling consistency, and comparison of reported company values vs. analyzed values. After describing their methodology and results, the authors conclude that "the legal status of hemp-derived Δ9-THC products in America essentially permits their open sale while placing very few requirements on the companies selling them." The end result includes finding, for example, products "that have 3.7 times the THC content of edibles in adult-use states," as well as inaccurately labeled products.

"Artificial intelligence" (AI) may seem like a buzzword akin to the "nanotechnology" craze of the 2000s, but it is inevitably finding its way into scientific applications, including in the materials sciences. In this December 2022 article published in npj Computational Materials, Sbailò et al. present their AI-driven Novel Materials Discovery (NOMAD) toolkit as an extension of their NOMAD Repository & Archive, focused on making materials science data FAIR (findable, accessible, interoperable, and reusable), as well as AI-ready. After introducing the details of their workspace and goals towards adding notebook-based tools to the NOMAD Repository & Archive, the authors dive into the details of NOMAD AI Toolkit. They close by noting their toolkit "offers a selection of notebooks demonstrating such [AI-based] workflows, so that users can understand step by step what was done in publications and readily modify and adapt the workflows to their own needs." They add that the system "will allow for enhanced reproducibility of data-driven materials science papers and dampen the learning curve for newcomers to the field."

"Artificial intelligence" (AI) may seem like a buzzword akin to the "nanotechnology" craze of the 2000s, but it is inevitably finding its way into scientific applications, including in the materials sciences. In this December 2022 article published in npj Computational Materials, Sbailò et al. present their AI-driven Novel Materials Discovery (NOMAD) toolkit as an extension of their NOMAD Repository & Archive, focused on making materials science data FAIR (findable, accessible, interoperable, and reusable), as well as AI-ready. After introducing the details of their workspace and goals towards adding notebook-based tools to the NOMAD Repository & Archive, the authors dive into the details of NOMAD AI Toolkit. They close by noting their toolkit "offers a selection of notebooks demonstrating such [AI-based] workflows, so that users can understand step by step what was done in publications and readily modify and adapt the workflows to their own needs." They add that the system "will allow for enhanced reproducibility of data-driven materials science papers and dampen the learning curve for newcomers to the field."

In this 2023 article published in Journal of Clinical and Diagnostic Research, Naphade et al. provide a brief introductory-level review of the importance of quality control (QC) to the clinical laboratory. After a brief introduction on clinical lab testing, the authors analyze the wide variety of sources for laboratory errors, covering the pre-analytical, analytical. and post-analytical phases. They then introduce the concept of quality control, followed by explaining how QC is implemented in the laboratory, including through the use of QC materials, statistical control charts, and shifts and trends. They conclude this review by stating that "reliable and confident laboratory testing avoids misdiagnosis, delayed treatment, and unnecessary costing of repeat testing." They add that given these benefits, "the individual laboratory should assess and analyze their own QC process to find out the possible root cause of any digressive test results which are not correlating with patients' clinical presentation or expected response to treatment."

In this 2023 article published in Journal of Clinical and Diagnostic Research, Naphade et al. provide a brief introductory-level review of the importance of quality control (QC) to the clinical laboratory. After a brief introduction on clinical lab testing, the authors analyze the wide variety of sources for laboratory errors, covering the pre-analytical, analytical. and post-analytical phases. They then introduce the concept of quality control, followed by explaining how QC is implemented in the laboratory, including through the use of QC materials, statistical control charts, and shifts and trends. They conclude this review by stating that "reliable and confident laboratory testing avoids misdiagnosis, delayed treatment, and unnecessary costing of repeat testing." They add that given these benefits, "the individual laboratory should assess and analyze their own QC process to find out the possible root cause of any digressive test results which are not correlating with patients' clinical presentation or expected response to treatment."

In this 2023 paper published in the journal Scientific Data, Ghiringhelli et al. present the results of an international workshop of materials scientists who came together to discuss metadata and data formats as they relate to data sharing in materials science. With a focus on the FAIR principles (findable, accessible, interoperable, and reusable), the authors introduce the concept of materials science data management needs, and the value of metadata towards making shareable data FAIR. They then go into specific use cases, such as electronic-structure calculations, potential-energy sampling, and managing metadata for computational workflows. They also address the nuances of file formats and metadata schemas as they relate to those file formats. They clise with an outlook on the use of ontologies in materials science, followed by conclusions, with a heavy emphasis on "the importance of developing a hierarchical and modular metadata schema in order to represent the complexity of materials science data and allow for access, reproduction, and repurposing of data, from single-structure calculations to complex workflows." They add: "the biggest benefit of meeting the interoperability challenge will be to allow for routine comparisons between computational evaluations and experimental observations."

In this 2023 paper published in the journal Scientific Data, Ghiringhelli et al. present the results of an international workshop of materials scientists who came together to discuss metadata and data formats as they relate to data sharing in materials science. With a focus on the FAIR principles (findable, accessible, interoperable, and reusable), the authors introduce the concept of materials science data management needs, and the value of metadata towards making shareable data FAIR. They then go into specific use cases, such as electronic-structure calculations, potential-energy sampling, and managing metadata for computational workflows. They also address the nuances of file formats and metadata schemas as they relate to those file formats. They clise with an outlook on the use of ontologies in materials science, followed by conclusions, with a heavy emphasis on "the importance of developing a hierarchical and modular metadata schema in order to represent the complexity of materials science data and allow for access, reproduction, and repurposing of data, from single-structure calculations to complex workflows." They add: "the biggest benefit of meeting the interoperability challenge will be to allow for routine comparisons between computational evaluations and experimental observations."

In this 2023 journal article published in International Journal of Molecular Sciences, Jadhav et al. recommend a more comprehensive approach to analyzing the Cannabis plant, its extracts, and its constituents, turning to the still-evolving concept of authentomics—"which involves combining the non-targeted analysis of new samples with big data comparisons to authenticated historic datasets"—of the food industry as a paradigm worth shifting towards. After an introduction on metabolomics and a review of its various technologies, the authors examine the current state of cannabis laboratory testing, how its regulated and standardized, and a variety of issues that make cannabis testing less consistent, as well as how cannabis can become adulterated in the grow chain. They then discuss authentomics and apply it to the cannabis industry, as well address what considerations need to be made in the future to make the most of authentomics. The author conclude that an authentomics approach "provides a robust method for verifying the quality of cannabis products."

In this 2023 journal article published in International Journal of Molecular Sciences, Jadhav et al. recommend a more comprehensive approach to analyzing the Cannabis plant, its extracts, and its constituents, turning to the still-evolving concept of authentomics—"which involves combining the non-targeted analysis of new samples with big data comparisons to authenticated historic datasets"—of the food industry as a paradigm worth shifting towards. After an introduction on metabolomics and a review of its various technologies, the authors examine the current state of cannabis laboratory testing, how its regulated and standardized, and a variety of issues that make cannabis testing less consistent, as well as how cannabis can become adulterated in the grow chain. They then discuss authentomics and apply it to the cannabis industry, as well address what considerations need to be made in the future to make the most of authentomics. The author conclude that an authentomics approach "provides a robust method for verifying the quality of cannabis products."

In this 2023 article published in Science and Technology of Advanced Materials: Methods, Ishii et al. describe their cross-institutional efforts towards integrating X-ray absorption fine structure (XAFS) spectra and associated metadata across multiple databases to improve materials informatics processes and research methods of materials discovery and analysis. Their new public database, MDR XAFS DB, was developed towards this goal, while addressing two main issues with unifying such data: the difficulties of "designing and collecting metadata describing spectra and sample details, and unifying the vocabulary used in the metadata, including not only metadata items (keys) but also descriptions (values)." After discussing the system's construction and contents, the authors conclude their system "has achieved seamless cross searchability with the use of sample nomenclature so that database users do not have to be aware of the differences in the local metadata of the facilities that provide the data." Though still with some challenges to address, the authors add that while the culture of open data hasn't truly taken hold in materials science, they hope "that this initiative will be a trigger to promote the utilization of materials data."

In this 2023 article published in Science and Technology of Advanced Materials: Methods, Ishii et al. describe their cross-institutional efforts towards integrating X-ray absorption fine structure (XAFS) spectra and associated metadata across multiple databases to improve materials informatics processes and research methods of materials discovery and analysis. Their new public database, MDR XAFS DB, was developed towards this goal, while addressing two main issues with unifying such data: the difficulties of "designing and collecting metadata describing spectra and sample details, and unifying the vocabulary used in the metadata, including not only metadata items (keys) but also descriptions (values)." After discussing the system's construction and contents, the authors conclude their system "has achieved seamless cross searchability with the use of sample nomenclature so that database users do not have to be aware of the differences in the local metadata of the facilities that provide the data." Though still with some challenges to address, the authors add that while the culture of open data hasn't truly taken hold in materials science, they hope "that this initiative will be a trigger to promote the utilization of materials data."

Forensic laboratories are largely bound to performing their actions with quality highly in mind. In fact, standards like ISO/IEC 17025 help such labs focus more on quality with the help of a quality management system (QMS). But even with a strong focus on quality, inconsistencies in recording, managing, and reporting quality issues can be problematic. To that point, Heavey et al. examine government-based forensic service provider agencies in Australia and New Zealand in this 2023 journal article, using the survey format to gain insight into potential QMS weaknesses. After thoroughly reviewing the methodology and results for their survey, the authors conclude that "the need for further research into the standardization of systems underpinning the management of quality issues in forensic science" is evident from their research, with a greater need "to support transparent and reliable justice outcomes" through improving "evidence-based standard terminology for quality issues in forensic science to support data sharing and reporting, enhance understanding of quality issues, and promote transparency in forensic science."

Forensic laboratories are largely bound to performing their actions with quality highly in mind. In fact, standards like ISO/IEC 17025 help such labs focus more on quality with the help of a quality management system (QMS). But even with a strong focus on quality, inconsistencies in recording, managing, and reporting quality issues can be problematic. To that point, Heavey et al. examine government-based forensic service provider agencies in Australia and New Zealand in this 2023 journal article, using the survey format to gain insight into potential QMS weaknesses. After thoroughly reviewing the methodology and results for their survey, the authors conclude that "the need for further research into the standardization of systems underpinning the management of quality issues in forensic science" is evident from their research, with a greater need "to support transparent and reliable justice outcomes" through improving "evidence-based standard terminology for quality issues in forensic science to support data sharing and reporting, enhance understanding of quality issues, and promote transparency in forensic science."

In this brief 2023 article published in the journal Tomography, Michele Larobina of Consiglio Nazionale delle Ricerche provides background on "the innovation, advantages, and limitations of adopting DICOM and its possible future directions." After an introduction on the Digital Imaging and Communications in Medicine standard, Larobina then emphasizes the benefits of the standard's approach to handling image metadata, followed by the exchange component of the standard. Larobina then examines the strengths and weaknesses of the DICOM standard, before concluding that DICOM has not only demonstrated "the positive influence and added value that a standard can have in a specific field," but also "encouraged and facilitated data exchange between researchers, creating added value for research." Despite this, "the time is ripe to review some of the initial directives" of DICOM, says Larobina, in order for the the standard to remain relevant going forward.

In this brief 2023 article published in the journal Tomography, Michele Larobina of Consiglio Nazionale delle Ricerche provides background on "the innovation, advantages, and limitations of adopting DICOM and its possible future directions." After an introduction on the Digital Imaging and Communications in Medicine standard, Larobina then emphasizes the benefits of the standard's approach to handling image metadata, followed by the exchange component of the standard. Larobina then examines the strengths and weaknesses of the DICOM standard, before concluding that DICOM has not only demonstrated "the positive influence and added value that a standard can have in a specific field," but also "encouraged and facilitated data exchange between researchers, creating added value for research." Despite this, "the time is ripe to review some of the initial directives" of DICOM, says Larobina, in order for the the standard to remain relevant going forward.

In this article published in BMC Medical Education, Xu et al. present their four-stage clinical biochemistry teaching methodology which incorporates elements of the International Organization for Standardization's ISO 15189 standard. Noting a lack of student awareness of ISO 15189 Medical laboratories — Requirements for quality and competence and the value its emphasis on quality management brings to laboratories, the authors set upon incorporating the standard into a case-based learning (CBL) approach. After the authors describe the details of their approach and the survey-based results analysis of its outcomes, the authors conclude that outside of improving student outcomes in clinical biochemistry, "ISO 15189 concepts of continuous improvement were implanted, thereby putting the concept of plan–do–check–act (PDCA) into practice, developing recording habits, and improving communication skills."

In this article published in BMC Medical Education, Xu et al. present their four-stage clinical biochemistry teaching methodology which incorporates elements of the International Organization for Standardization's ISO 15189 standard. Noting a lack of student awareness of ISO 15189 Medical laboratories — Requirements for quality and competence and the value its emphasis on quality management brings to laboratories, the authors set upon incorporating the standard into a case-based learning (CBL) approach. After the authors describe the details of their approach and the survey-based results analysis of its outcomes, the authors conclude that outside of improving student outcomes in clinical biochemistry, "ISO 15189 concepts of continuous improvement were implanted, thereby putting the concept of plan–do–check–act (PDCA) into practice, developing recording habits, and improving communication skills."

In this 2023 article published in the journal Water, Marebesi et al. of the University of Georgia present their findings on phytoremediation of Cadmium (Cd)-contaminated soils using day-neutral and photoperiod-sensitive hemp plants. After a thorough introduction on the topic of using Cannabis plants for phytoremediation (including the flowers), the authors present their materials and methods, focusing on Apricot Auto, Alpha Explorer, Von, and T1 varieties of hemp. They then present the results of their experiments, addressing plant height, biomass yield, Cd concentrations in various plant tissues, nutrient partitioning, and THC/CBD concentrations. They conclude noting that "while Cd concentration was significantly higher in roots, all four varieties were efficient in translocating Cd from roots to shoots." They also conclude that THC/CBD loss is affected by remediation in some cases, as was height and biomass. Finally, they highlight the importance of Cd testing of flowers for medical markets where the plant is used.

In this 2023 article published in the journal Water, Marebesi et al. of the University of Georgia present their findings on phytoremediation of Cadmium (Cd)-contaminated soils using day-neutral and photoperiod-sensitive hemp plants. After a thorough introduction on the topic of using Cannabis plants for phytoremediation (including the flowers), the authors present their materials and methods, focusing on Apricot Auto, Alpha Explorer, Von, and T1 varieties of hemp. They then present the results of their experiments, addressing plant height, biomass yield, Cd concentrations in various plant tissues, nutrient partitioning, and THC/CBD concentrations. They conclude noting that "while Cd concentration was significantly higher in roots, all four varieties were efficient in translocating Cd from roots to shoots." They also conclude that THC/CBD loss is affected by remediation in some cases, as was height and biomass. Finally, they highlight the importance of Cd testing of flowers for medical markets where the plant is used.

As technological, cultural, and environmental shifts continue to put pressure on the ways academic and private research is conducted, researchers must further strive to get the most out of their work. This holds true across a broad swath of scientific fields, including materials science. Noting the continuing expansion of laboratory automation and software-based tools like artificial intelligence (AI) and machine learning (ML), Tamura et al. emphasize that materials science and novel materials research can benefit greatly from adopting these tools. In this 2023 paper, the authors present their take on this trend, in the form of NIMS-OS, a Python-based system developed to take advantage of completely automated material discovery experiments. After presenting an introduction on the topic, the authors discuss the development philosophy and approach to making NIMS-OS, while also describing use of both the Python command-line tool and the graphical user interface (GUI)-driven version of the software. The authors conclude that their system demonstrates how "various problems for automated materials exploration to be commonly performed," while at the same time being "designed to be easily adaptable to a variety of original robotic systems in materials science."

As technological, cultural, and environmental shifts continue to put pressure on the ways academic and private research is conducted, researchers must further strive to get the most out of their work. This holds true across a broad swath of scientific fields, including materials science. Noting the continuing expansion of laboratory automation and software-based tools like artificial intelligence (AI) and machine learning (ML), Tamura et al. emphasize that materials science and novel materials research can benefit greatly from adopting these tools. In this 2023 paper, the authors present their take on this trend, in the form of NIMS-OS, a Python-based system developed to take advantage of completely automated material discovery experiments. After presenting an introduction on the topic, the authors discuss the development philosophy and approach to making NIMS-OS, while also describing use of both the Python command-line tool and the graphical user interface (GUI)-driven version of the software. The authors conclude that their system demonstrates how "various problems for automated materials exploration to be commonly performed," while at the same time being "designed to be easily adaptable to a variety of original robotic systems in materials science."

In this 2023 article published in the journal Sensors, Lehmann et al. of the Mannheim University of Applied Sciences present their efforts towards a research data management (RDM) system for their Center for Mass Spectrometry and Optical Spectroscopy (CeMOS). The proof-of-concept RDM leans on digital twin (DT) technology to answer the challenges posed by RDM of multiple research instruments in their institute, while at the same time addressing the principles of making data more findable, accessible, interoperable, and reusable (FAIR). After introducing the topic and presenting related work as it relates to their efforts, the authors lay out their conceptual architecture and discuss use cases within the context of their spectrometry and spectroscopy work. Then they propose an implementation, proof of concept, and evaluation of the proof of concept within the implementation's framework. After discussing their results, the authors conclude that their efforts contribute to the literature in five different ways, addressing RDM within FAIR, highlighting the value of DTs, developing a top-level knowledge graph, elaborating on reactive vs. proactive DTs, and providing a practical approach to DTs in the RDM at larger research institutes. They also provide caveats and future work that still needs to be done.

In this 2023 article published in the journal Sensors, Lehmann et al. of the Mannheim University of Applied Sciences present their efforts towards a research data management (RDM) system for their Center for Mass Spectrometry and Optical Spectroscopy (CeMOS). The proof-of-concept RDM leans on digital twin (DT) technology to answer the challenges posed by RDM of multiple research instruments in their institute, while at the same time addressing the principles of making data more findable, accessible, interoperable, and reusable (FAIR). After introducing the topic and presenting related work as it relates to their efforts, the authors lay out their conceptual architecture and discuss use cases within the context of their spectrometry and spectroscopy work. Then they propose an implementation, proof of concept, and evaluation of the proof of concept within the implementation's framework. After discussing their results, the authors conclude that their efforts contribute to the literature in five different ways, addressing RDM within FAIR, highlighting the value of DTs, developing a top-level knowledge graph, elaborating on reactive vs. proactive DTs, and providing a practical approach to DTs in the RDM at larger research institutes. They also provide caveats and future work that still needs to be done.

Materials science has been and continues to be an important study, particularly as we strive to overcome challenges such as climate change, pollution, and world hunger. And like many other sciences, the expanding paradigm of autonomous systems, machine learning (ML), and even artificial intelligence (AI) stands to leave a positive mark on how we conduct research on materials of all types. Ishizuki et al. of the Tokyo Institute of Technology and The University of Tokyo echo this sentiment in their 2023 paper published in the journal Science and Technology of Advanced Materials: Methods, which discusses the state of the art of autonomous experimental systems (AESs) and their benefits to material science laboratories. After introducing the topic, the authors explore the state of the art through other researchers' efforts, drawing conclusions based upon how AESs can be put to use in the field. After characterizing AESs' use and addressing sociocultural factors such as inventorship and authorship with AI and autonomous systems, the authors conclude that while AES will provide strong value to materials science labs, "human researchers will always be the main players," and this hybrid automation-human approach "will exponentially accelerate creative research and development, contributing to the future development of science, technology, and industry."

Materials science has been and continues to be an important study, particularly as we strive to overcome challenges such as climate change, pollution, and world hunger. And like many other sciences, the expanding paradigm of autonomous systems, machine learning (ML), and even artificial intelligence (AI) stands to leave a positive mark on how we conduct research on materials of all types. Ishizuki et al. of the Tokyo Institute of Technology and The University of Tokyo echo this sentiment in their 2023 paper published in the journal Science and Technology of Advanced Materials: Methods, which discusses the state of the art of autonomous experimental systems (AESs) and their benefits to material science laboratories. After introducing the topic, the authors explore the state of the art through other researchers' efforts, drawing conclusions based upon how AESs can be put to use in the field. After characterizing AESs' use and addressing sociocultural factors such as inventorship and authorship with AI and autonomous systems, the authors conclude that while AES will provide strong value to materials science labs, "human researchers will always be the main players," and this hybrid automation-human approach "will exponentially accelerate creative research and development, contributing to the future development of science, technology, and industry."

In this brief paper published in Journal of Cannabis Research, Gagnon et al. present the results of applying a new analytical technique to quantifying hundreds of pesticides at the same time in cannabis samples. Using a combination of gas chromatography—triple quadrupole mass spectrometry (GC–MS/MS) and liquid chromatography—triple quadrupole mass spectrometry (LC–MS/MS), the authors tested not only cannabis samples from licensed retailers in Canada but also illicit cannabis samples acquired from Health Canada Cannabis Laboratory. Their methodology was fully able to manage the search for hundreds of pesticides, discovering only a handful of semi-unregulated pesticides in legal Canadian cannabis, but a surprising amount of pesticides in illicit samples. The authors conclude that their methodology, as demonstrated, has practical uses going forward towards a wider regulatory approach to ensuring Canadian cannabis is as pesticide-free as possible.

In this brief paper published in Journal of Cannabis Research, Gagnon et al. present the results of applying a new analytical technique to quantifying hundreds of pesticides at the same time in cannabis samples. Using a combination of gas chromatography—triple quadrupole mass spectrometry (GC–MS/MS) and liquid chromatography—triple quadrupole mass spectrometry (LC–MS/MS), the authors tested not only cannabis samples from licensed retailers in Canada but also illicit cannabis samples acquired from Health Canada Cannabis Laboratory. Their methodology was fully able to manage the search for hundreds of pesticides, discovering only a handful of semi-unregulated pesticides in legal Canadian cannabis, but a surprising amount of pesticides in illicit samples. The authors conclude that their methodology, as demonstrated, has practical uses going forward towards a wider regulatory approach to ensuring Canadian cannabis is as pesticide-free as possible.

In this 2023 journal article published in Healthcare, Ayaz et al. present their work on a "data analytic framework that supports clinical statistics and analysis by leveraging ... Fast Healthcare Interoperability Resources (FHIR)." After providing significant background on the topic, as well as a review of the literature on the topic, the authors discuss the initial preparations that went into developing their framework, followed by the results of their implementation. The authors also present a two-part example with use cases that puts the framework to a test. After discussing the limitations and results of their framework, the authors conclude that it effectively "empowers healthcare users (patients, practitioners, healthcare providers, etc.) to perform advanced data analysis on patient data used in healthcare settings and represented in the FHIR-based standard."

In this 2023 journal article published in Healthcare, Ayaz et al. present their work on a "data analytic framework that supports clinical statistics and analysis by leveraging ... Fast Healthcare Interoperability Resources (FHIR)." After providing significant background on the topic, as well as a review of the literature on the topic, the authors discuss the initial preparations that went into developing their framework, followed by the results of their implementation. The authors also present a two-part example with use cases that puts the framework to a test. After discussing the limitations and results of their framework, the authors conclude that it effectively "empowers healthcare users (patients, practitioners, healthcare providers, etc.) to perform advanced data analysis on patient data used in healthcare settings and represented in the FHIR-based standard."

In this 2023 journal article published in Frontiers in Cellular and Infection Microbiology, Mencacci et al. examine laboratory automation requirements and demands within the context of clinical microbiology laboratories. Using concepts from “total laboratory automation” (TLA), artificial intelligence (AI), and clinical laboratory informatics, the authors address not only the impact of these technologies on laboratory management and workflows but also on patients and hospital systems. Discussion of the state of the art leads the authors to note that the "implementation of laboratory automation and laboratory informatics can support integration into routine practice monitoring specimens’ quality, isolation of specific pathogens, alert reports for infection control practitioners, and real-time collection of lab trend data, all essential for the prevention and control of infections and epidemiological studies." The authors conclude that "prioritizing laboratory practices in a patient-oriented approach can be used to optimize technology advances for improved patient care."

In this 2023 journal article published in Frontiers in Cellular and Infection Microbiology, Mencacci et al. examine laboratory automation requirements and demands within the context of clinical microbiology laboratories. Using concepts from “total laboratory automation” (TLA), artificial intelligence (AI), and clinical laboratory informatics, the authors address not only the impact of these technologies on laboratory management and workflows but also on patients and hospital systems. Discussion of the state of the art leads the authors to note that the "implementation of laboratory automation and laboratory informatics can support integration into routine practice monitoring specimens’ quality, isolation of specific pathogens, alert reports for infection control practitioners, and real-time collection of lab trend data, all essential for the prevention and control of infections and epidemiological studies." The authors conclude that "prioritizing laboratory practices in a patient-oriented approach can be used to optimize technology advances for improved patient care."

"Islands" of information and data that are dispersed across an organization irregularly can lead to inefficiencies in workflows and missed opportunities to improve or expand the organization. This statement also holds true for college and university laboratories, who equally must face down issues with untimely data, lackadaisical data security, and ownership-related issues. Zheng et al. tackle this issue with their data-ownership-based security architecture, presented in this journal article published in Electronics. After providing an introduction and related work, the authors provide in-depth details of the methodology used to develop their secure lab data management architecture, followed by example cases using four different algorithms to find the most suitable solution for authorized encryption and decryption of shared data across the proposed system., which allows "relevant units, universities, and laboratories to encrypt data with public keys and upload them to the data register center to form a data directory." They conclude "that the proposed strategy is secure and efficient for lab data sharing across [multiple] domains."

"Islands" of information and data that are dispersed across an organization irregularly can lead to inefficiencies in workflows and missed opportunities to improve or expand the organization. This statement also holds true for college and university laboratories, who equally must face down issues with untimely data, lackadaisical data security, and ownership-related issues. Zheng et al. tackle this issue with their data-ownership-based security architecture, presented in this journal article published in Electronics. After providing an introduction and related work, the authors provide in-depth details of the methodology used to develop their secure lab data management architecture, followed by example cases using four different algorithms to find the most suitable solution for authorized encryption and decryption of shared data across the proposed system., which allows "relevant units, universities, and laboratories to encrypt data with public keys and upload them to the data register center to form a data directory." They conclude "that the proposed strategy is secure and efficient for lab data sharing across [multiple] domains."

In this 2023 article published in the journal Healthcare, Siddiqi et al. propose a "health informatics framework that supports data acquisition from various sources in real-time, correlates these data from various sources among each other and to the domain-specific terminologies, and supports querying and analyses." After providing background information about the need for such a framework, as well as discussing the state of the art, the authors describe their methodology in detail, followed by a experimental use case demonstrating the framework's use. The authors conclude that the "framework is extendable, encapsulates the abilities to include other domains, provides support for semantic querying at the governance level (i.e., at the data assets and type assets level), and is designed towards a data and computation economic model," while at the same time supporting "evidence-based decision tracking ... which follows the FAIR principles."

In this 2023 article published in the journal Healthcare, Siddiqi et al. propose a "health informatics framework that supports data acquisition from various sources in real-time, correlates these data from various sources among each other and to the domain-specific terminologies, and supports querying and analyses." After providing background information about the need for such a framework, as well as discussing the state of the art, the authors describe their methodology in detail, followed by a experimental use case demonstrating the framework's use. The authors conclude that the "framework is extendable, encapsulates the abilities to include other domains, provides support for semantic querying at the governance level (i.e., at the data assets and type assets level), and is designed towards a data and computation economic model," while at the same time supporting "evidence-based decision tracking ... which follows the FAIR principles."

In this 2022 journal article published in iScience, Hauschild et al. present a set of guidelines for practicing the academic software life cycle (SLC) within medical labs and research institutes, where commercial industry SLC standards are less applicable. As part of their guidelines, the authors "propose a subset of elements that we are convinced will provide a significant benefit to research settings while keeping the development effort within a feasible range." After a solid introduction, the authors get into the details of their guidelines, addressing all the various stages within the SLC and how they are best applied in the medical academic settings. They close with a brief discussion, concluding that their guideline "lowers the barriers to a potential technology transfer toward the medical industry," while providing "a comprehensive checklist for a successful SLC."

In this 2022 journal article published in iScience, Hauschild et al. present a set of guidelines for practicing the academic software life cycle (SLC) within medical labs and research institutes, where commercial industry SLC standards are less applicable. As part of their guidelines, the authors "propose a subset of elements that we are convinced will provide a significant benefit to research settings while keeping the development effort within a feasible range." After a solid introduction, the authors get into the details of their guidelines, addressing all the various stages within the SLC and how they are best applied in the medical academic settings. They close with a brief discussion, concluding that their guideline "lowers the barriers to a potential technology transfer toward the medical industry," while providing "a comprehensive checklist for a successful SLC."