Posted on March 8, 2021

By LabLynx

Journal articles

A data management plan (DMP) is a formally developed document that describes how data shall be handled both during research efforts and afterwards. However, as Weng and Thoben point out in this 2020 paper, "it a problem that DMPs [typically] do not include a structured approach for the identification or mitigation of risks" to that research data. The authors develop and propose a generic risk catalog for researchers to use in the development of their DMP, while demonstrating that catalog's value. After providing and introduction and related work, they present their catalog, developed from interviews with researchers across multiple disciplines. They then compare that catalog to 13 DMPs published in the journal

RIO. They conclude that their approach is "useful for identifying general risks in DMPs" and that it may also be useful to research "funders, since it makes it possible for them to evaluate basic investment risks of proposed projects."

Posted on March 1, 2021

By LabLynx

Journal articles

Cannabis-related businesses (CRBs) in U.S. states where cannabis is legal have long had difficulties finding the banking and financial services they require in a market where the federal government continues to consider cannabis illegal. Working in cash-only situations while trying to keep careful accounting, all while trying to keep the business afloat and clear of legal troubles, remains a challenge. This late 2020 paper by Owens-Ott recognizes that challenge and examines it further, delving into the world of the certified public accountant (CPA) and the role—or lack thereof—they are willing to play working with CRBs. The author conducted a qualitative study asking 1. why some CPAs are unwilling to provide services to CRBs, 2. how CRBs compensate for the lack of those services, and 3. what CPAs need to know in order to provide effective services to CRBs. Owens-Ott concludes that "competent and knowledgeable CPAs" willing to work with CRBs are out there, but the CPAs should be carefully vetted. And interested CPAs "must commit to acquiring and maintaining substantial specialized knowledge related to Tax Code Section 280E, internal controls for a cash-only or cash-intensive business, and the workings of the cannabis industry under the current regulatory conditions."

Posted on February 24, 2021

By LabLynx

Journal articles

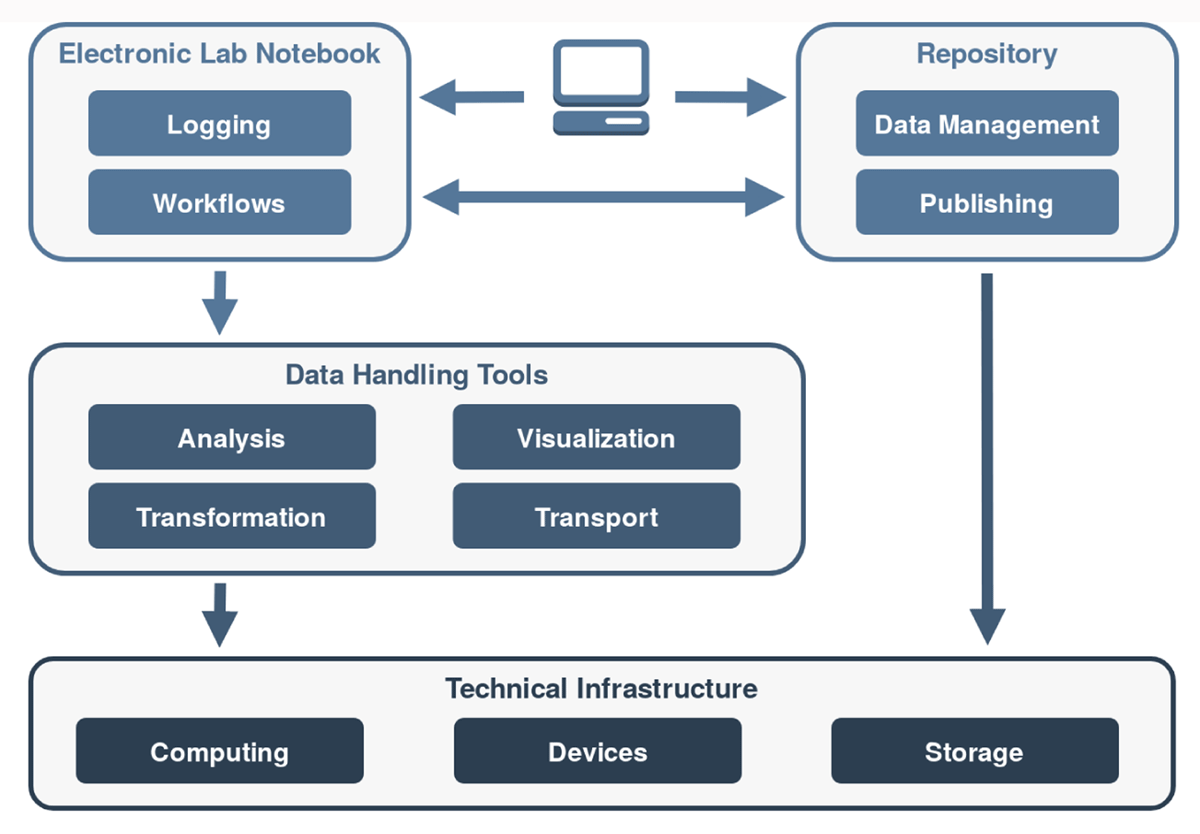

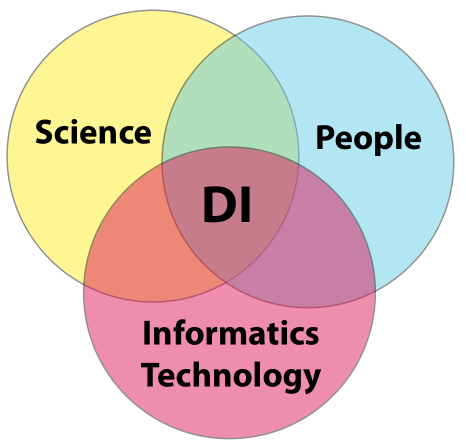

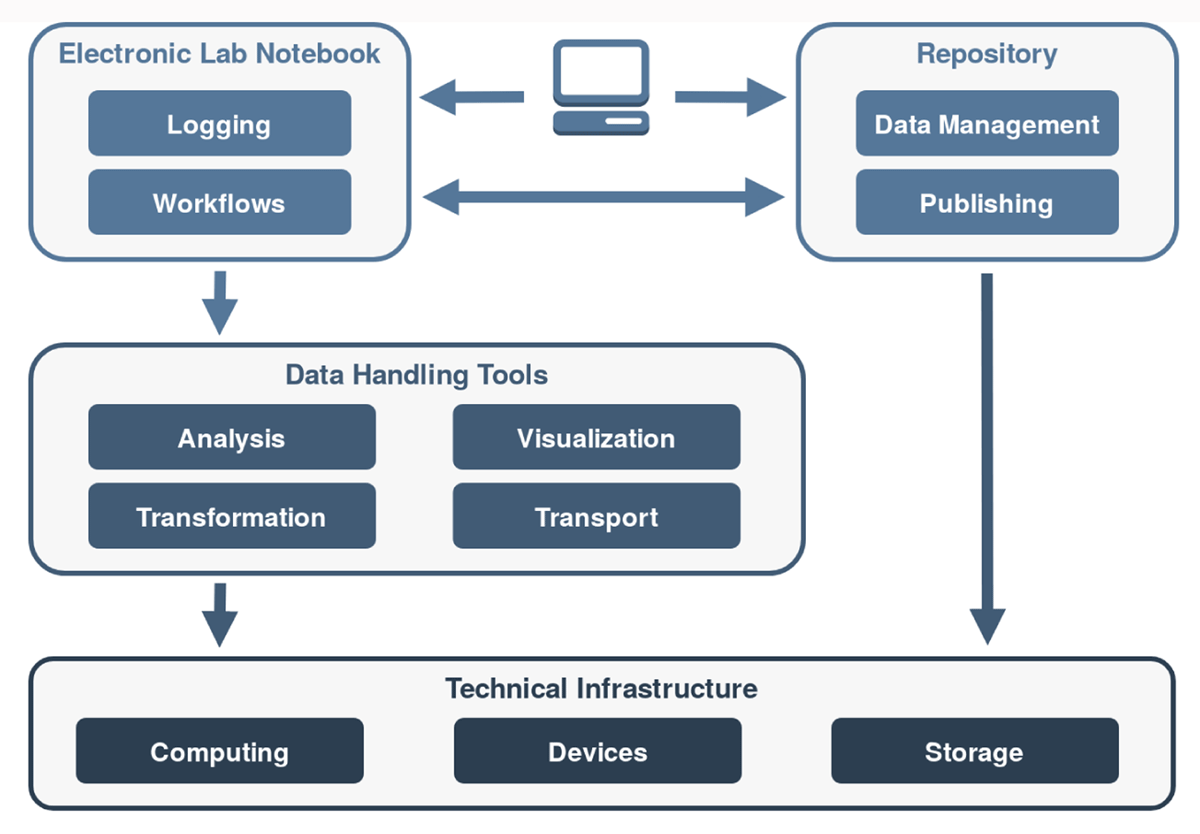

This 2021 paper published in

Data Science Journal explains Kadi4Mat, a combination electronic laboratory notebook (ELN) and data repository for better managing research and analyses in materials sciences. Noting the difficulty of extracting and using data obtained from experiments and simulations in the materials sciences, as well as the increasing levels of complexity in those experiments and simulations, Brandt

et al. sought to develop a comprehensive informatics solution capable of handling structured data storage and exchange in combination with documented and reproducible data analysis and visualization. Noting a wealth of open-source components for such an informatics project, the team provides a conceptual overview of the system and then dig into the details of its development using various open-source components. They then provide screenshots of records and data (and metadata) management, as well as workflow management. The authors conclude their solution helps manage "heterogeneous use cases of materials science disciplines," while being extensible enough to extend "the research data infrastructure to other disciplines" in the future.

Posted on February 15, 2021

By LabLynx

Journal articles

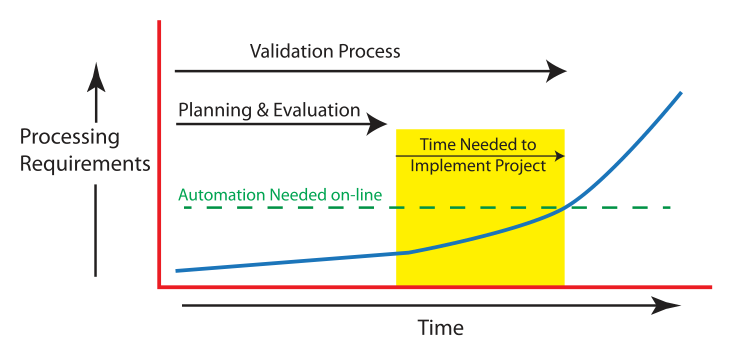

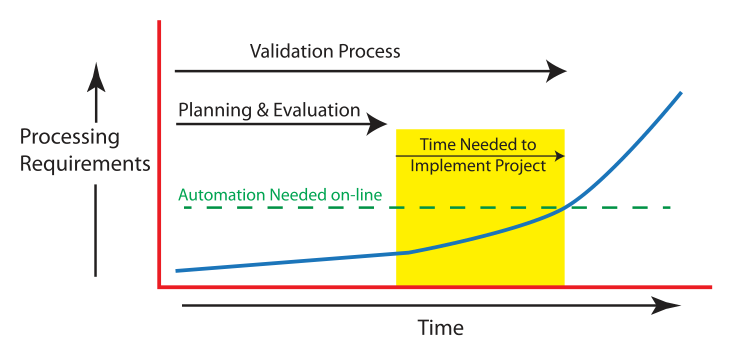

This guide by laboratory automation veteran Joe Liscouski takes an updated look at the state of implementing laboratory automation, with a strong emphasis on the importance of planning and management as early as possible. It also addresses the need for laboratory systems engineers (LSEs)—an update from laboratory automation engineers (LAEs)—and the institutional push required to develop educational opportunities for those LSEs. After looking at labs then and now, the author examines the transition to more automated labs, followed by seven detailed goals required for planning and managing that transition. He then closes by discussing LSEs and their education, concluding finally that "laboratory technology challenges can be addressed through planning activities using a series of goals," and that the lab of the future will implement those goals as early as possible, with the help of well-qualified LSEs fluent in both laboratory science and IT.

Posted on February 8, 2021

By LabLynx

Journal articles

This white paper by laboratory automation veteran Joe Liscouski examines what it takes to fully realize a fully integrated laboratory informatics infrastructure, a strong pivot for many labs. He begins by discussing the worries and concerns of stakeholders about automation job losses, how AI and machine learning can play a role, and what equipment may be used, He then goes into lengthy discussion about how to specifically plan for automation, and at what level (no, partial, or full automation), as well as what it might cost. The author wraps up with a brief look at the steps required for actual project management. The conclusion? "Just as the manufacturing industries transitioned from cottage industries to production lines and then to integrated production-information systems, the execution of laboratory science has to tread a similar path if the demands for laboratory results are going to be met in a financially responsible manner." This makes "laboratory automation engineering" and planning a critical component of laboratories from the start.

Posted on February 1, 2021

By LabLynx

Journal articles

In this brief journal article published in

Data Intelligence, Weigel

et al. make their case for improving data object findability in order to benefit data and workflow management procedures for researchers. They argue that even with findable, accessible, interoperable, and reusable (FAIR) principles getting more attention, findability is the the starting point before anything else can be done, and that "support at the data infrastructure level for better automation of the processes dealing with data and workflows" is required. They succinctly describe the essential requirements for automating those processes, and then provide the basic building blocks that make up a potential solution, including—in the long-term—the application of machine learning. They conclude that adding automation to improve findability means that "researchers producing data can spend less time on data management and documentation, researchers reusing data and workflows will have access to metadata on a wider range of objects, and research administrators and funders may benefit from deeper insight into the impact of data-generating workflows."

Posted on January 25, 2021

By LabLynx

Journal articles

This companion piece to last week's article on laboratory turnaround time (TAT), sees Cassim

et al. take their experience developing and implementing a system for tracking TAT in a high-volume laboratory and assesses the actual impact such a system has. Using a retrospective study design and root cause analyses, the group looked at TAT outcomes over 122 weeks from a busy clinical pathology laboratory in a hospital in South Africa. After describing their methodology and results, the authors discussed the nuances of their results, including significant lessons learned. They conclude that not only is monitoring TAT to ensure timely reporting of results important, but also "vertical audits" of the results help identify bottlenecks for correction. They also emphasize "the importance of documenting and following through on corrective actions" associated with both those audits and the related quality management system in place at the lab.

Posted on January 18, 2021

By LabLynx

Journal articles

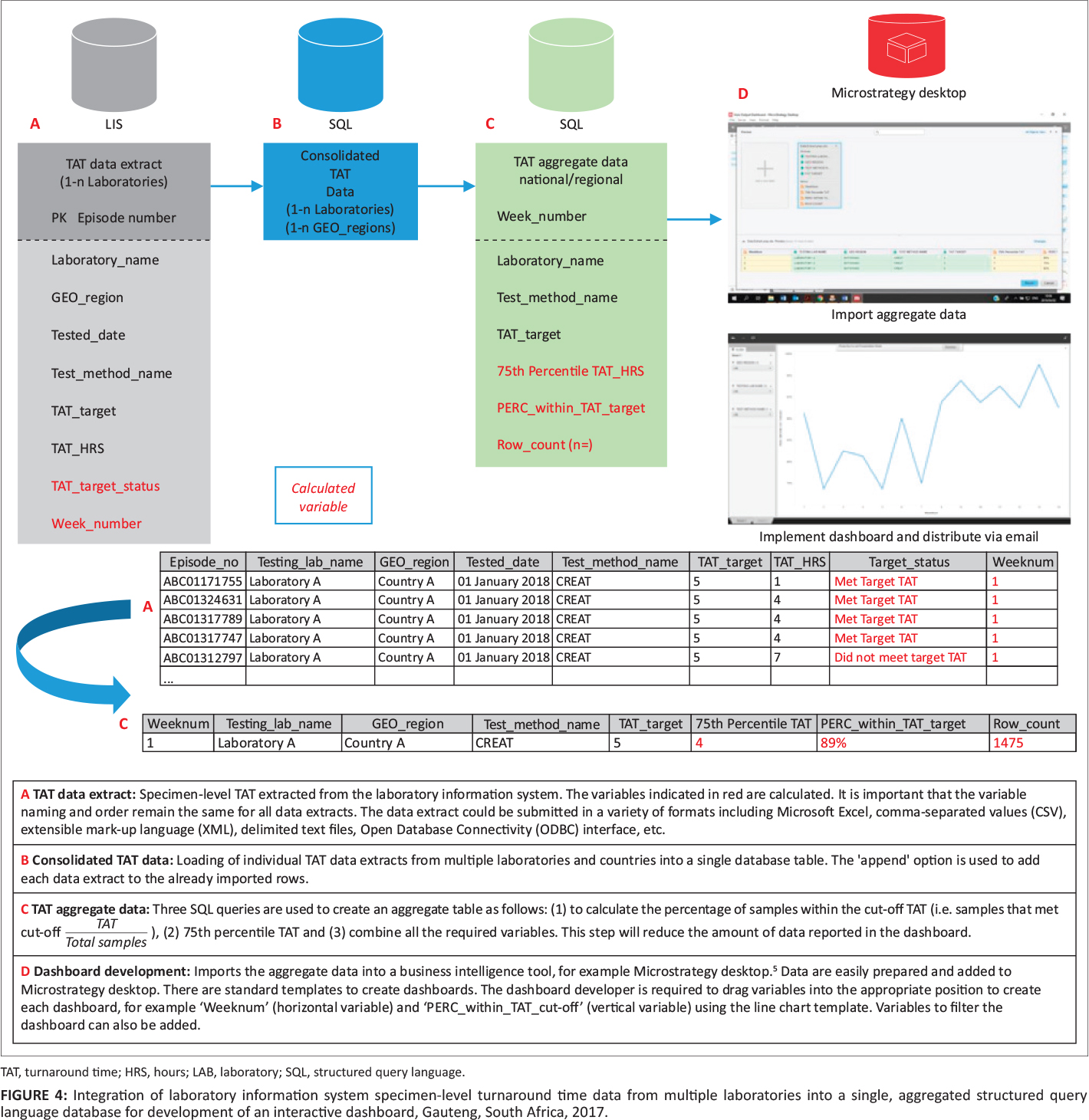

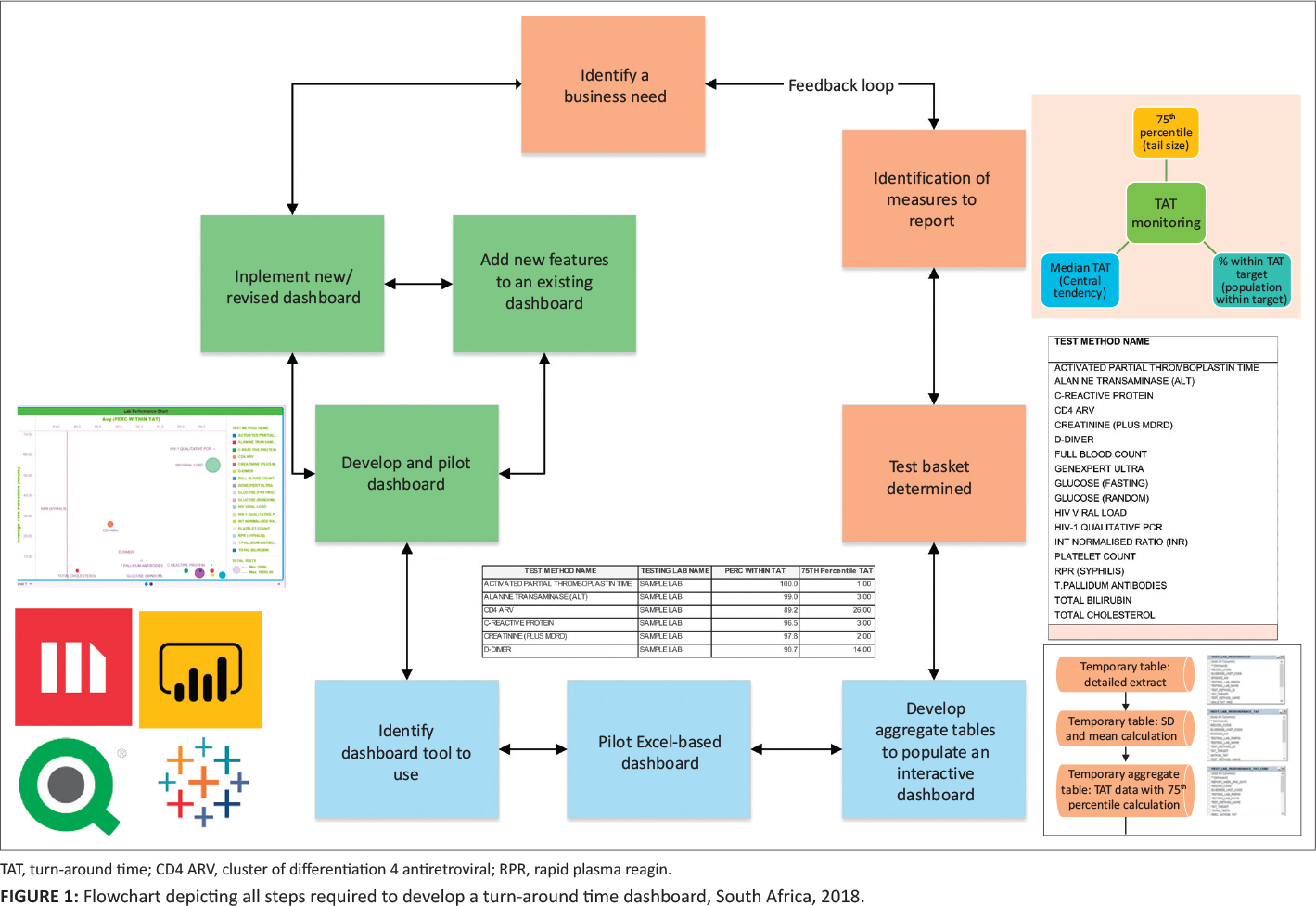

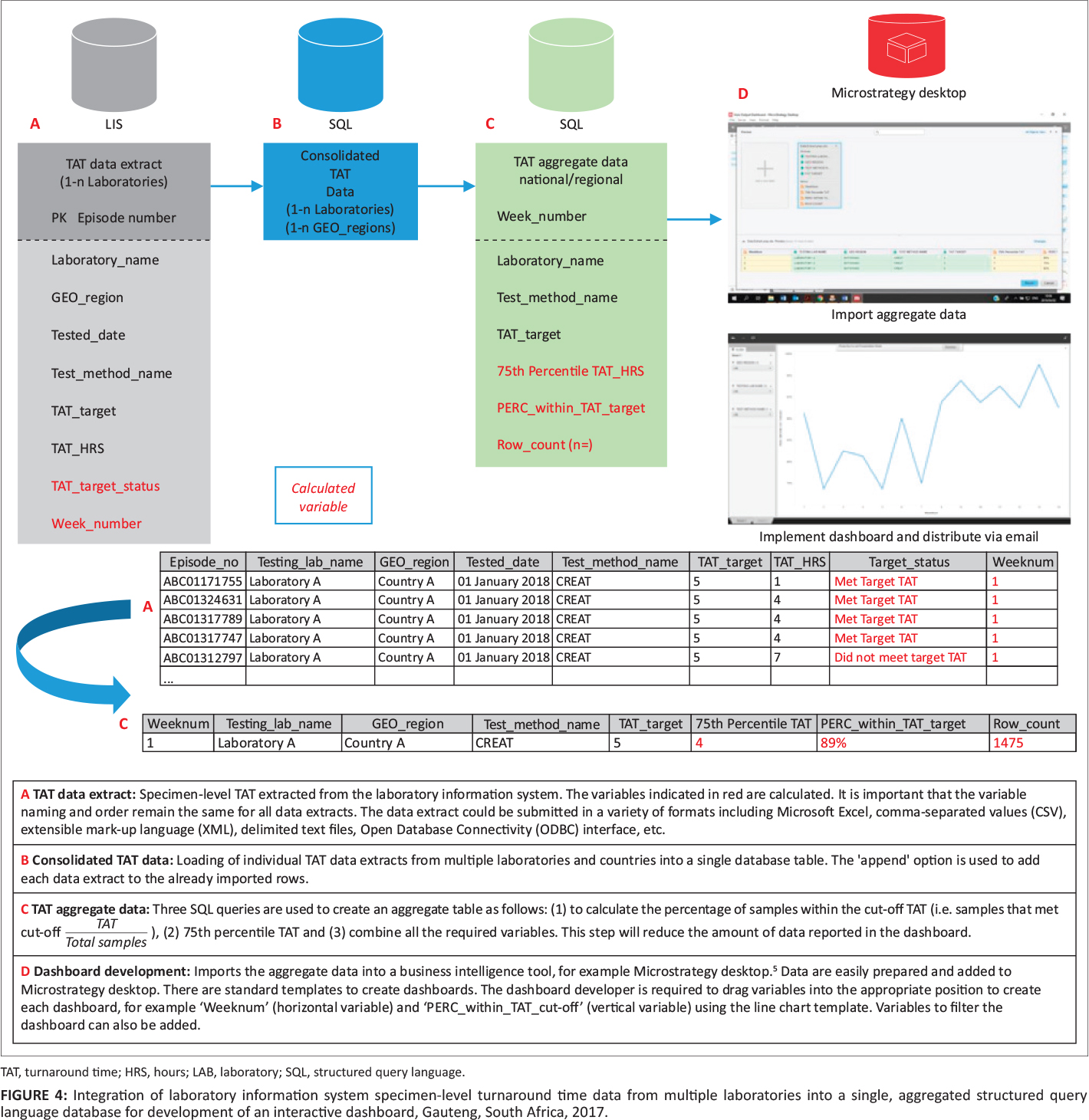

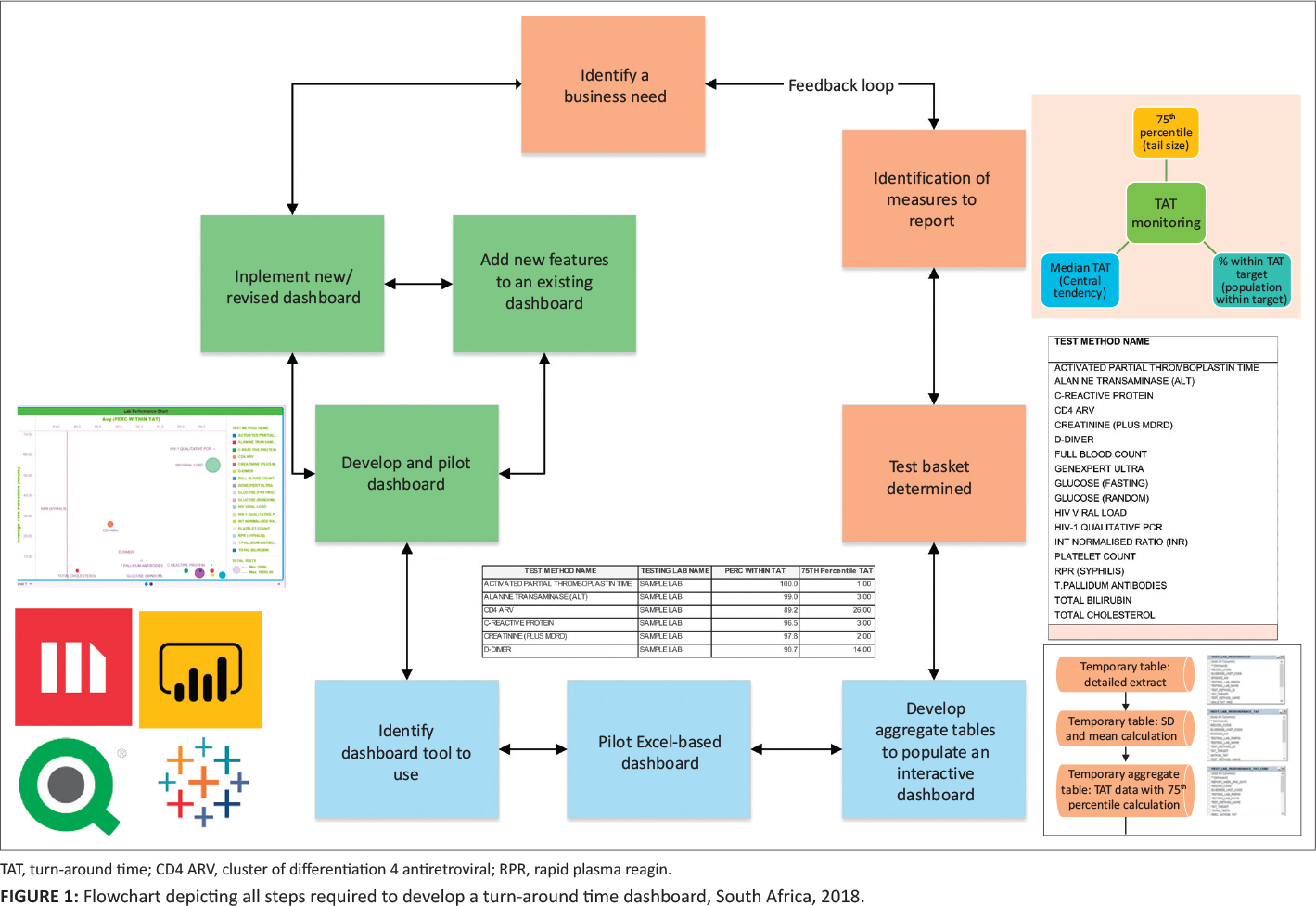

In this 2020 article published in the

African Journal of Laboratory Medicine, Cassim

et al. describe their experience developing and implementing a system for tracking turnaround time (TAT) in a high-volume laboratory. Seeking to have weekly reports of turnaround time—to better react to changes and improve policy—the authors developed a dashboard-based system for the South African National Health Laboratory Service and tested it in one if their higher-volume labs to assess the TAT monitoring system's performance. They conclude that while their dashboard "enables presentation of weekly TAT data to relevant business and laboratory managers, as part of the overall quality management portfolio of the organization," the actual act of "providing tools to assess TAT performance does not in itself imply corrective action or improvement." They emphasize that training on the system, as well as system performance measurements, gradual quality improvements, and the encouragement of a leadership-promoted business culture that supports the use of such data, are all required for the success of such a tool to be ensured.

Posted on January 11, 2021

By LabLynx

Journal articles

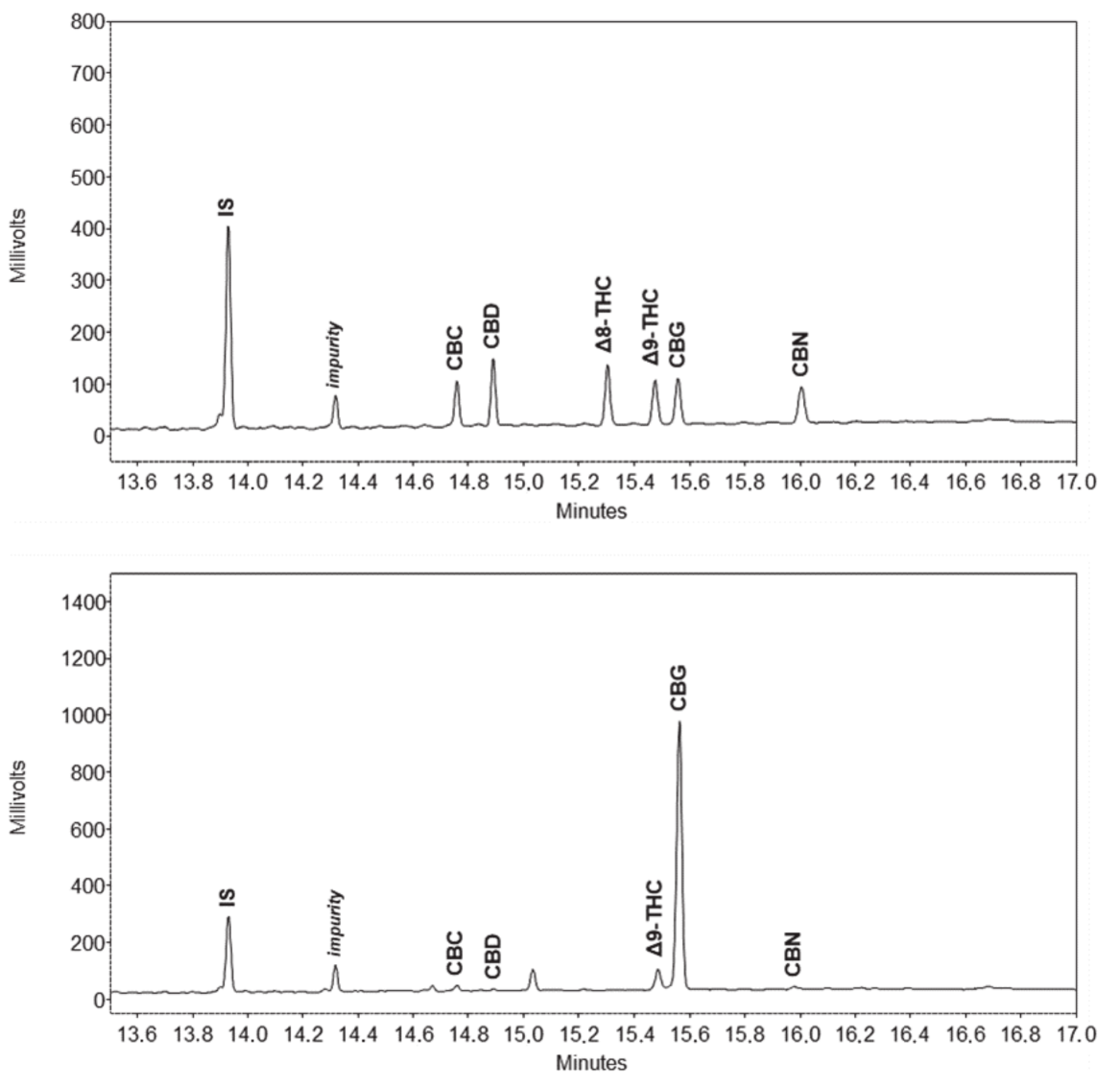

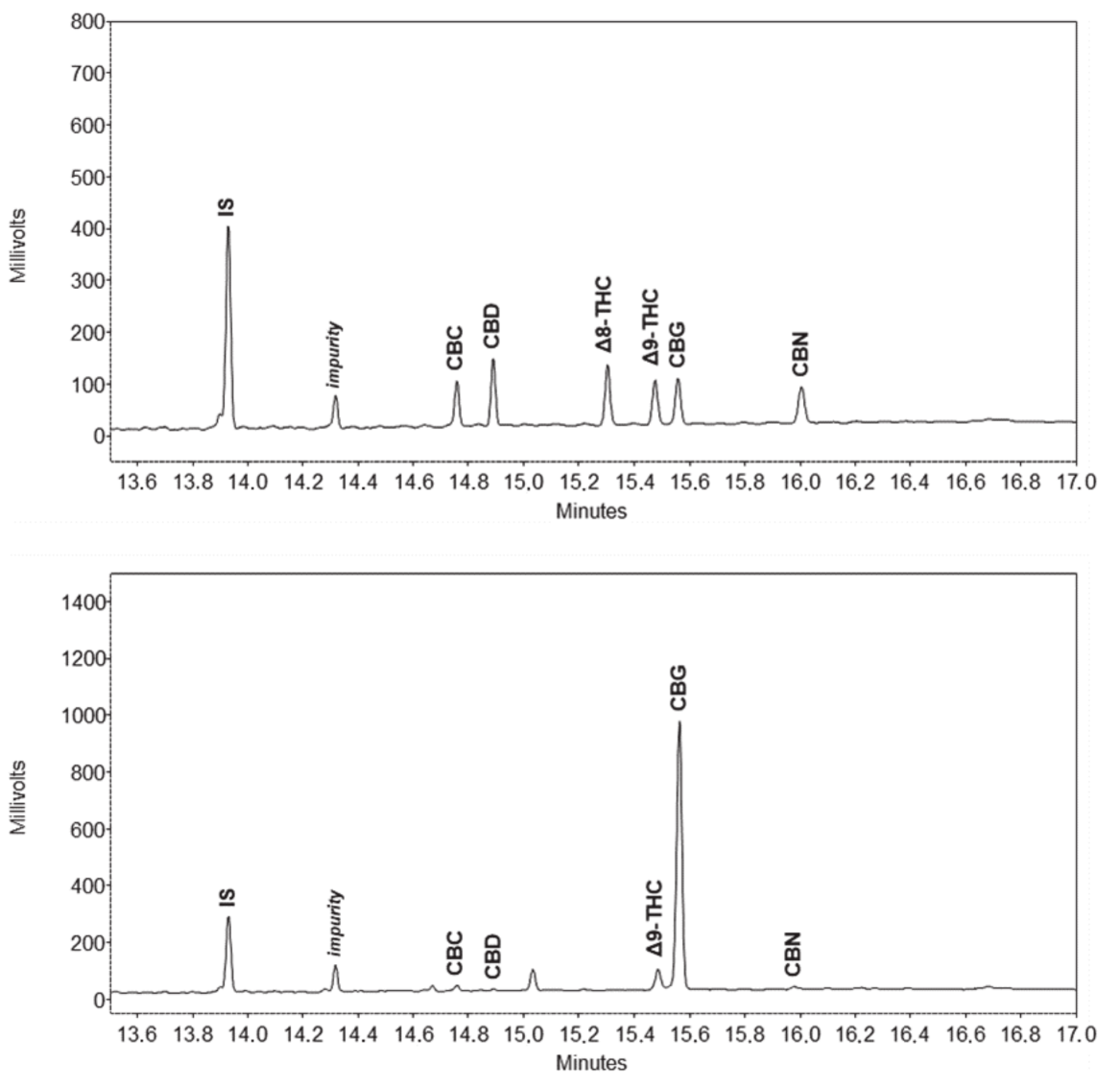

Over the years, a number of methods for detecting cannabinoids and terpenes in

Cannabis plant and related samples have been developed, recognizing the base problem of decarboxylation in gas chromatography. Some methods have proved better than others for cannabinoids, though typically differing methods are used for terpenes. However, could a method clearly detect both at the same time? Zekič and Križman demonstrate their gas chromatography method doing just that in this 2020 article published in

Molecules. After introducing their problem, the duo present their method, as an attempt "to find appropriate conditions, mainly in terms of sample preparation, for a simultaneous analysis of both groups of compounds, while keeping the overall experimental and instrumental setups simple." After discussing the method details and results, they conclude that their gas chromatography–flame ionization detection (GC-FID) method "provides a robust tool for simultaneous quantitative analysis of these two chemically different groups of analytes."

Posted on January 5, 2021

By LabLynx

Journal articles

In this 2020 paper published in

Developments in the Built Environment, Hartmann and Trappey share their years of experience working within the field of advanced engineering informatics, the pairing of "adequate computational tools" with modern engineering work, with the goal of improving the management of and collaboration related to increasingly complex engineering projects. While the duo don't directly address the specifics of such computational tools, they acknowledge engineering work as "knowledge-intensive" and detail the importance of formalizing that knowledge and its representation within various computational systems. They discuss the importance of knowledge representation and formation, as it informs research into advanced engineering informatics, and provide four practical examples provides by other researchers in the field. They then discuss the methodological approaches of implementing those more philosophical aspects towards developing knowledge representations, as well as how they should be verified and validated. They conclude that "knowledge representation is the main research effort that is required to develop technologies that not only automate mundane engineering tasks, but also provide engineers with tools that will allow them to do things they were not able to do before."

Posted on December 29, 2020

By LabLynx

Journal articles

Artificial intelligence (AI) is increasingly making its way into many aspects of health informatics, backed by a vision of improving healthcare outcomes and lowering healthcare costs. However, like many other such technologies, it comes with legal, ethical, and societal questions that deserve further exploration. Amann

et al. do just that in this 2020 paper published in

BMC Medical Informatics and Decision Making, examining the concept of "explainability," or why the AI came to the conclusion that it did in its task. The authors provide a brief amount of background before then examining AI-based clinical decision support systems (CDDSs) to provide various perspectives on the value of explainability in AI. They examine what explainability is from the perspective of technological perspective, then examine the legal, medical, and patient perspectives of explainability's importance. Finally, they examine the ethical implications of explainability using Beauchamp and Childress'

Principles of Biomedical Ethics. The authors conclude "that omitting explainability in CDDSs poses a threat to core ethical values in medicine and may have detrimental consequences for individual and public health."

Posted on December 22, 2020

By LabLynx

Journal articles

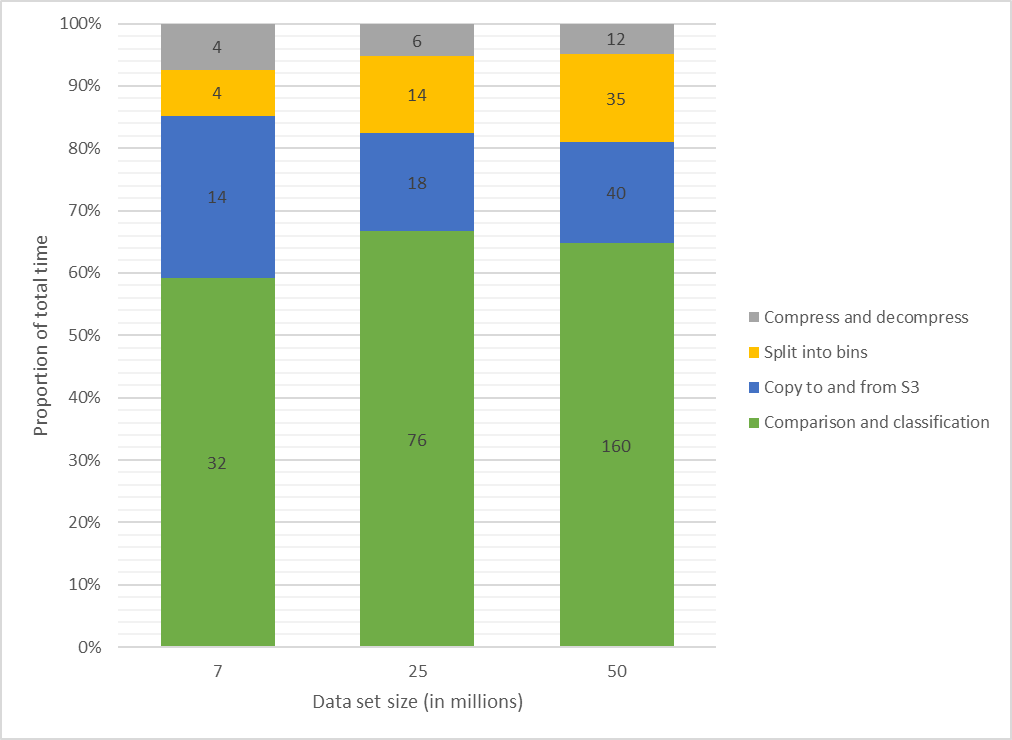

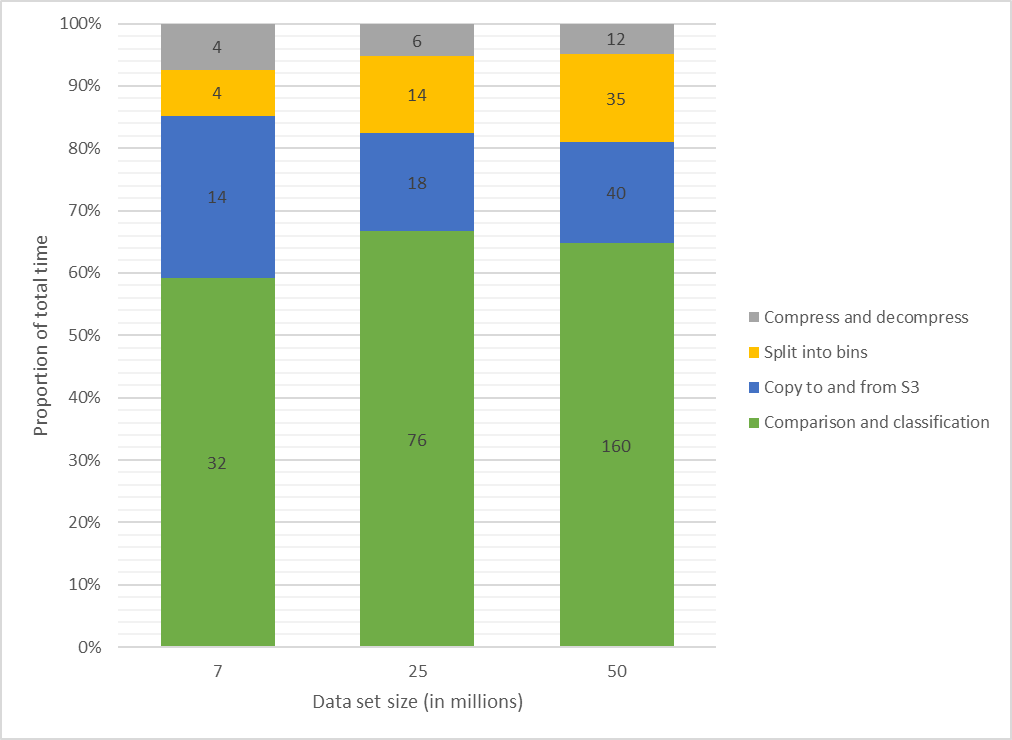

This 2020 paper published in

JMIR Medical Informatics examines both the usefulness of linked data (connecting data points across multiple data sets) for investigating health and social issues, and a cloud-based means for enabling linked data. Brown and Randall briefly review the state of cloud computing in general, and in particular with relation to data linking, noting a dearth of research on the practical use of secure, privacy-respecting cloud computing technologies for record linkage. Then the authors describe their own attempt to demonstrate a cloud-based model for record linkage that respects data privacy and integrity requirements, using three synthetically generated data sets of varying sizes and complexities as test data. They discuss their findings and then conclude that through the use of "privacy-preserving record linkage" methods over the cloud, data "privacy is maintained while taking advantage of the considerable scalability offered by cloud solutions," all while having "the ability to process increasingly larger data sets without impacting data release protocols and individual patient privacy" policies.

Posted on December 15, 2020

By LabLynx

Journal articles

In this 2020 paper published in

Data Science Journal, Mayernik

et al. present their take on assessing risks to digital and physical data collections and archives, such that the appropriate resource allocation and priorities may be set to ensure those collections' and archives' future use. In particular, they present their data risk assessment matrix and its use in three different use cases. Noting that the trust placed in a data repository and how it's run is "separate and distinct" from trust placed in the data itself, the authors lay out the steps for assessing those repositories' risks, and then making that task easier through their risk assessment matrix. After presenting their use cases, they conclude that their matrix proves "a lightweight method for data collections to be reviewed, documented, and evaluated against a set of known data risk factors."

Posted on December 8, 2020

By LabLynx

Journal articles

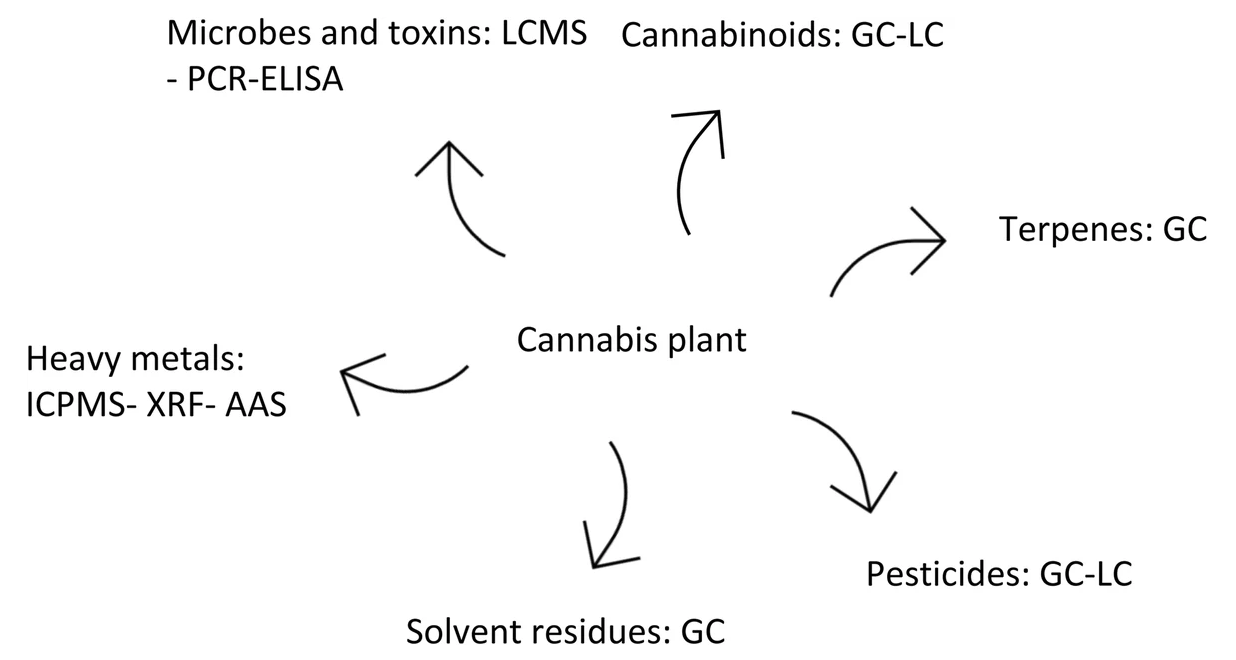

While the push for the decriminalization of cannabis, its constituents, and the products made from them continues in the United States, issues of quality control testing and standardization persist. Consistent and accurate laboratory testing of these products is required to better ensure human health outcomes, to be sure. However, with the legalization of hemp, it's not just products intended for humans that are being created from hemp constituents such as cannabidiol (CBD); products marketed for our pets and other animals are appearing. And with them an inconsistent, sometimes dangerous lack of testing controls, or so finds Wakshlag et al. in this 2020 paper published in Veterinary Medicine: Research and Reports. The researchers review the regulatory atmosphere (or lack thereof) and then present the results of analytically testing 29 cannabis products marketed for dog use. They found wide-swinging variances in actual cannabinoid and contaminant content, straying often from labeled contents and contaminant standards. They conclude "the range and variability of [cannabis-derived] products in the veterinary market is alarming," and given the current state of regulation and standardization, "veterinary professionals should only consider manufacturers providing product safety data such as COAs, pharmacokinetic data, and clinical application data when clients solicit information regarding product selection."

Posted on December 1, 2020

By LabLynx

Journal articles

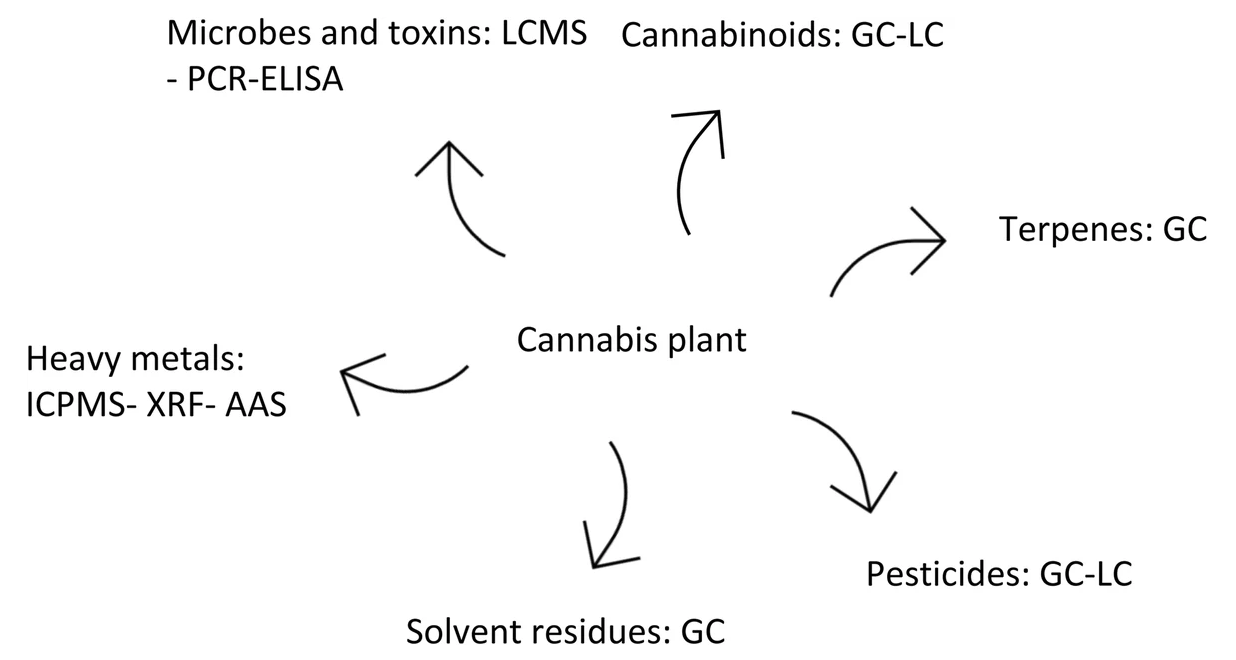

Many papers have been published over the years describing different methods for quantifying the amount of cannabinoids in

Cannabis sativa and other phenotypes, as well as the products made from them. Few have taken to the task of reviewing all such methods while weighing their pros and cons. This 2020 paper by Lazarjani

et al. makes that effort, landing on approximately 60 relevant papers for their review. From gas chromatography to liquid chromatography, as well as various spectrometry and spectroscopy methods, the authors give brief review of many methods and their combinations. They conclude that high-performance liquid chromatography-tandem mass spectrometry (HPLC-MS/MS) likely has the most benefits when compared to other methods reviewed in this paper, including the ability to "differentiate between acidic and neutral cannabinoids" and "differentiate between different cannabinoids based on the m/z value of their molecular ion," while having "more specificity when compared to ultraviolet detectors," particularly when dealing with complex matrices.

Posted on November 25, 2020

By LabLynx

Journal articles

In this 2020 paper published in

Molecules, Izzo

et al. describe one of the first efforts to comprehensively analyze the polyphenol content of the inflorescences of

Cannabis sativa L. Noting the rise of polyphenol-containing products due to polyphenols' reported health benefits, the researchers found a dearth of research analyzing them in the flowers of

Cannabis sativa L. The authors describe their use of ultra high-performance liquid chromatography–quadrupole–orbitrap high-resolution mass spectrometry, as well as other spectrophotometric methods, to identify and quantify the polyphenols from four major cultivars in Italy. Of note was the Selected Carmagnola (CS) cultivar, which reliably contained the highest amount of many of the discovered polyphenols. They conclude that their results highlight the need for further research into the inflorescences of

Cannabis sativa L. cultivars for polyphenols "to estimate their efficacy for future applications for nutraceutical purposes."

Posted on November 17, 2020

By LabLynx

Journal articles

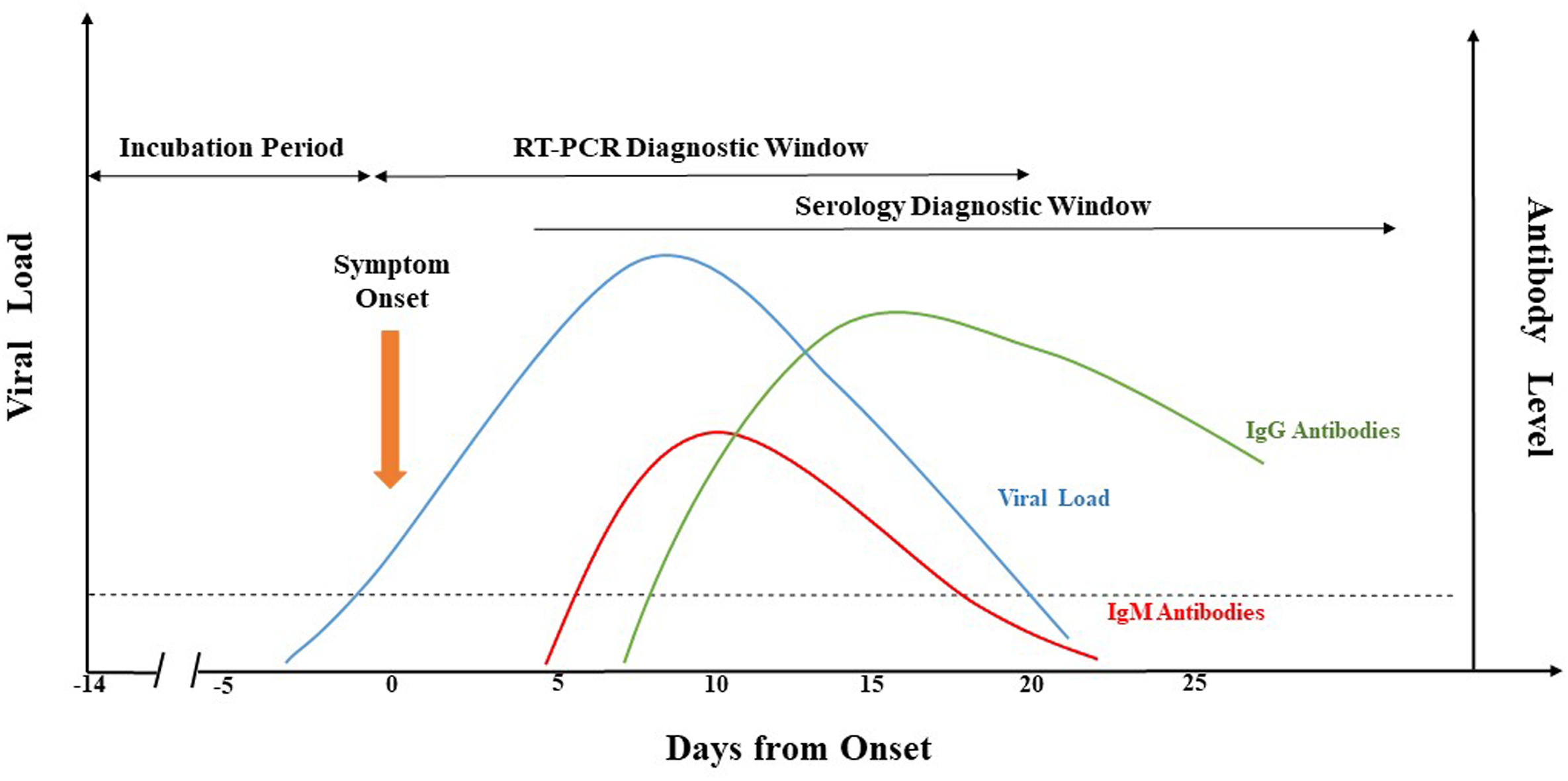

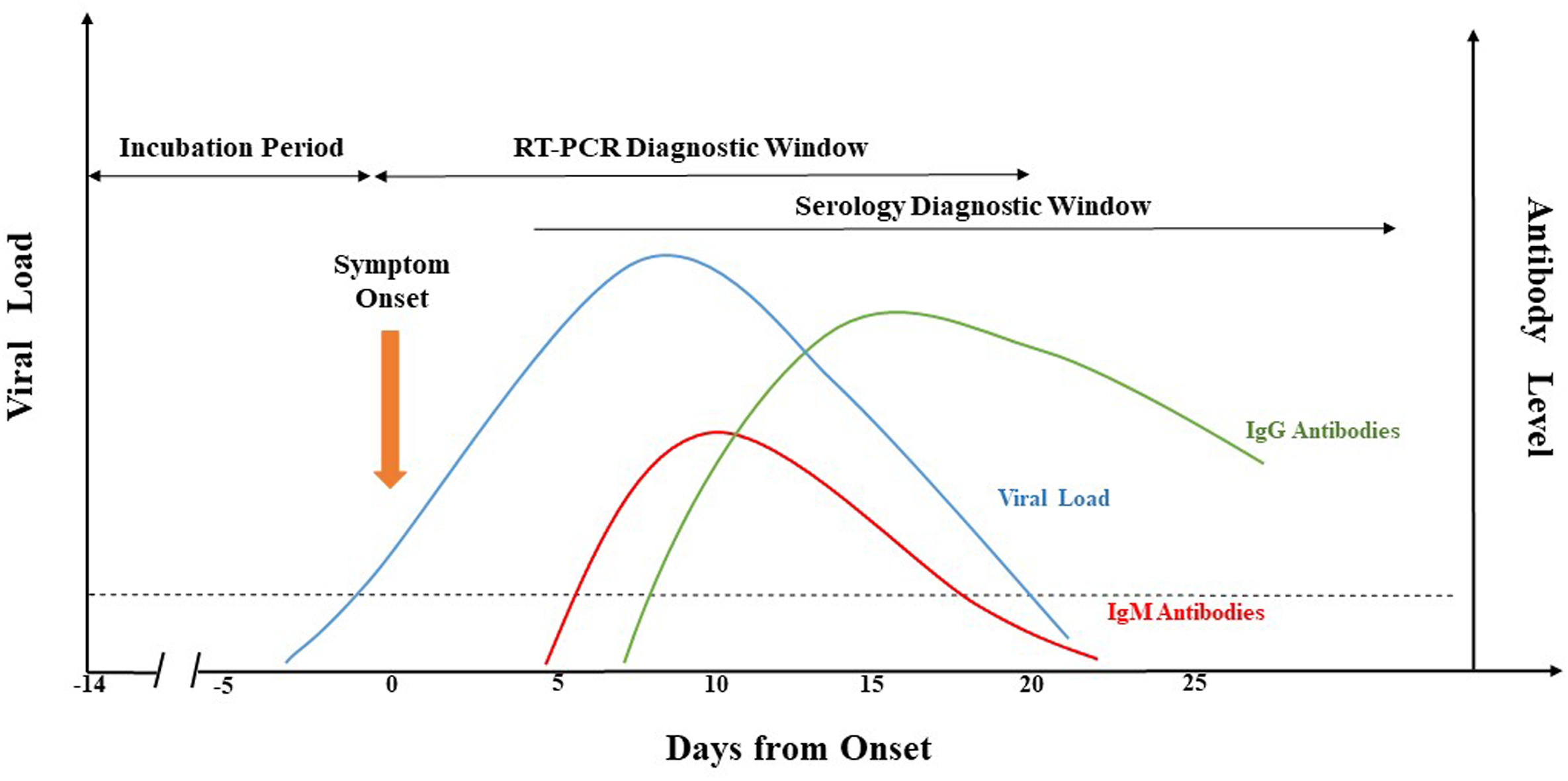

What is the current state of laboratory testing methods for SARS-CoV-2, the virus that causes COVID-19? This perspective paper from China (submitted for approval in September 2020), published in

Journal of Microbiology, Immunology and Infection, analyzes and describes the latest with how laboratories in China approach testing for the virus in the human population. Jing

et al. first introduce background about the pandemic, as well as the etiological characteristics and genome organization of SARS-CoV-2. Then they dive into the common molecular methods used, including qRT-PCR, LAMP, CRISPR, genome sequencing, and nucleic acid mass spectrometry. The authors then analyze the various challenges with keeping such testing consistent, categorically addressing seven areas where false-negative results arise. They close with the benefits (and drawbacks) of supplemental serological testing, before concluding "more comprehensive analysis and/or further evaluation of different diagnostic methods" is still required to improve identification rates.

Posted on November 10, 2020

By LabLynx

Journal articles

In this 2020 article published in

Practical Laboratory Medicine, Angela Fung of St. Paul’s Hospital and the University of British Columbia reviews how point-of-care testing (POCT) in healthcare organizations can be improved with informatics tools, as well as how those tools aid those organizations in maintaining regulatory compliance. After a brief introduction to the concept of POCT, Fung discusses how not only electronic medical record (EMR) systems and POCT devices go hand-in-hand, but also how interfacing to other systems such as laboratory information systems (LIS) and hospital information systems (HIS) reduces documentation and data entry errors. She also discusses at length the important features required of any data management system (DMS) used in combination with POCT devices, as well as the resources required to support those DMSs. Fung ends with the personnel side of POCT, and how such POCT programs could be better managed under the context of DMSs. The conclusion? "Connectivity and DMS are essential tools in improving the accessibility and ability to manage POCT programs efficiently," and " effective management of POCT programs ultimately relies on building relationships, collaborations, and partnerships" among stakeholders.

Posted on November 2, 2020

By LabLynx

Journal articles

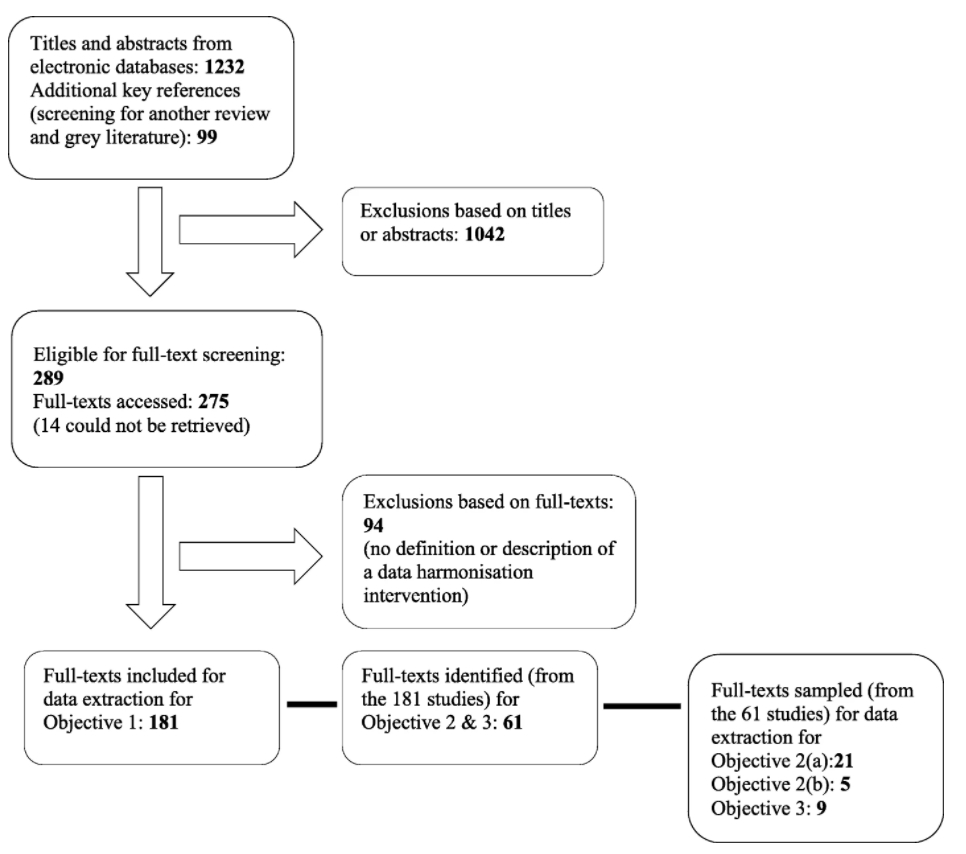

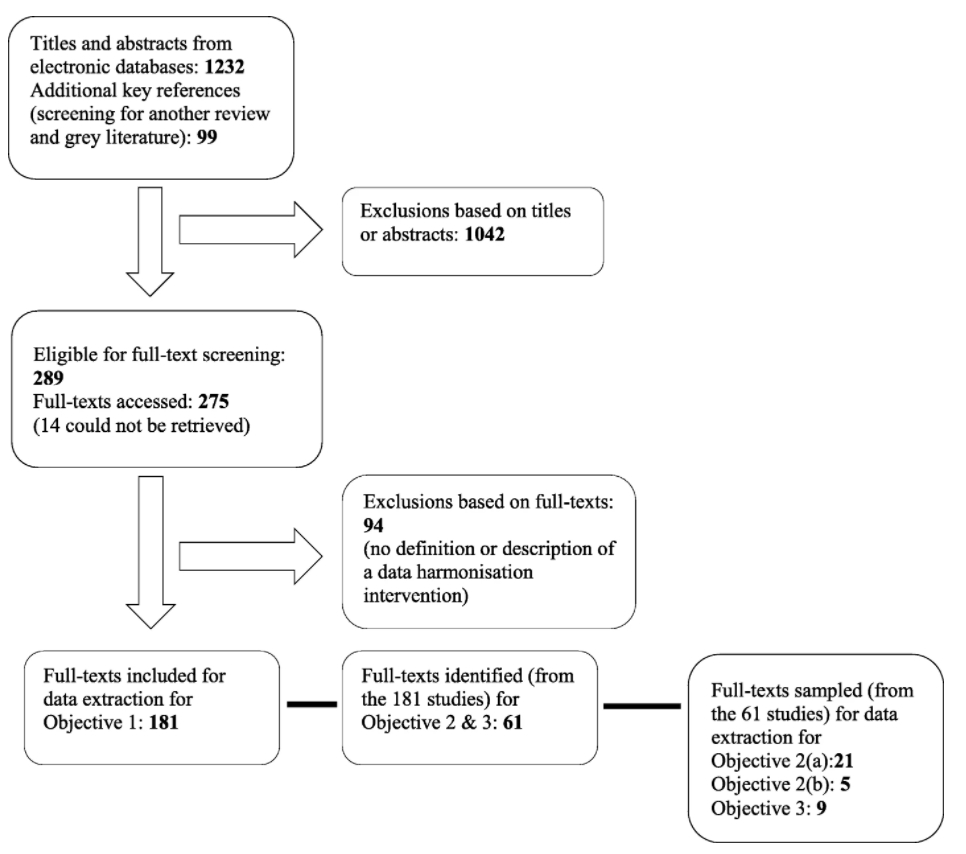

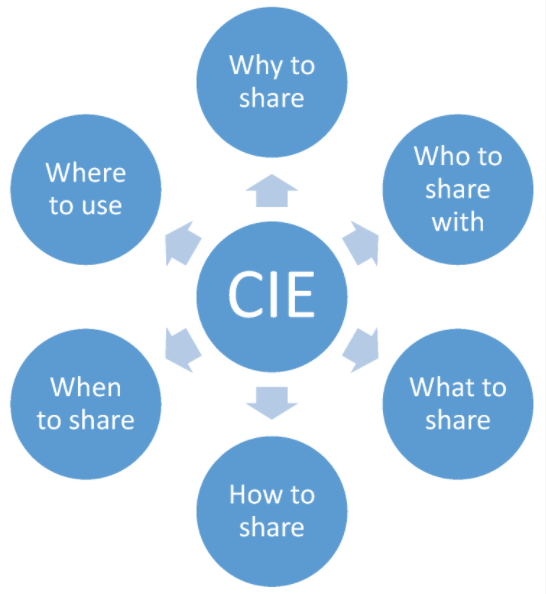

In this 2020 review article published in

BMC Medical Informatics and Decision Making, Schmidt

et al. detail the state of healthcare data harmonization (DH) literature, analyze commonalities among various DH terms, and determine "the causal relationship between DH and health management decision-making." Noting the value of organizing and integrating healthcare data in order to strengthen many aspects of how the healthcare system runs, the authors lay out the methodology and results of their scoping review of the topic. The group had three primary objectives: identifying the key components and processes of healthcare DH, synthesizing the various related definitions of DH, and documenting relationships between DH interventions and healthcare management decision-making. They conclude that "health information exchange" is the most commonly used term among seven key terms, and that there are nine vital characteristics to making DH work well. They also add that DH, when conducted well, positively contributes to clinic, operational, and population surveillance decision-making in healthcare settings.

Posted on October 27, 2020

By LabLynx

Journal articles

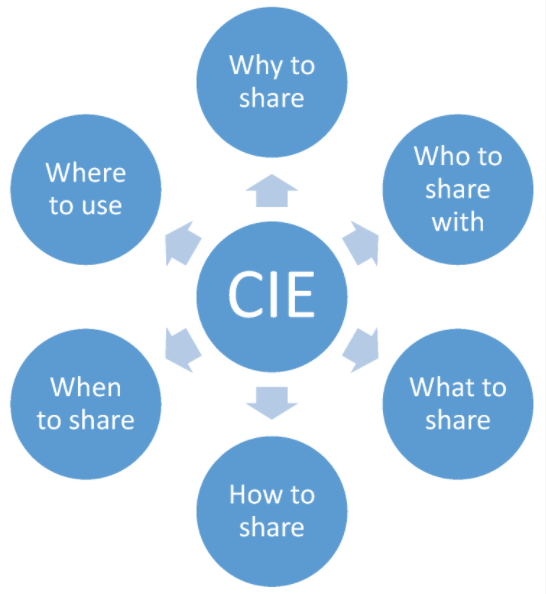

Cybersecurity and how it's handled by an organization has many facets, from examining existing systems, setting organizational goals, and investing in training, to probing for weaknesses, monitoring access, and containing breaches. An additional and vital element an organization must consider is how to conduct "effective and interoperable cybersecurity information sharing." As Rantos

et al. note, while the adoption of standards, best practices, and policies prove useful to incorporating shared cybersecurity threat information, "a holistic approach to the interoperability problem" of sharing cyber threat information and intelligence (CTII) is required. The authors lay out their case for this approach in their 2020 paper published in

Computers, concluding that their method effectively "addresses all those factors that can affect the exchange of cybersecurity information among stakeholders."

This 2021 paper published in Data Science Journal explains Kadi4Mat, a combination electronic laboratory notebook (ELN) and data repository for better managing research and analyses in materials sciences. Noting the difficulty of extracting and using data obtained from experiments and simulations in the materials sciences, as well as the increasing levels of complexity in those experiments and simulations, Brandt et al. sought to develop a comprehensive informatics solution capable of handling structured data storage and exchange in combination with documented and reproducible data analysis and visualization. Noting a wealth of open-source components for such an informatics project, the team provides a conceptual overview of the system and then dig into the details of its development using various open-source components. They then provide screenshots of records and data (and metadata) management, as well as workflow management. The authors conclude their solution helps manage "heterogeneous use cases of materials science disciplines," while being extensible enough to extend "the research data infrastructure to other disciplines" in the future.

This 2021 paper published in Data Science Journal explains Kadi4Mat, a combination electronic laboratory notebook (ELN) and data repository for better managing research and analyses in materials sciences. Noting the difficulty of extracting and using data obtained from experiments and simulations in the materials sciences, as well as the increasing levels of complexity in those experiments and simulations, Brandt et al. sought to develop a comprehensive informatics solution capable of handling structured data storage and exchange in combination with documented and reproducible data analysis and visualization. Noting a wealth of open-source components for such an informatics project, the team provides a conceptual overview of the system and then dig into the details of its development using various open-source components. They then provide screenshots of records and data (and metadata) management, as well as workflow management. The authors conclude their solution helps manage "heterogeneous use cases of materials science disciplines," while being extensible enough to extend "the research data infrastructure to other disciplines" in the future.

This guide by laboratory automation veteran Joe Liscouski takes an updated look at the state of implementing laboratory automation, with a strong emphasis on the importance of planning and management as early as possible. It also addresses the need for laboratory systems engineers (LSEs)—an update from laboratory automation engineers (LAEs)—and the institutional push required to develop educational opportunities for those LSEs. After looking at labs then and now, the author examines the transition to more automated labs, followed by seven detailed goals required for planning and managing that transition. He then closes by discussing LSEs and their education, concluding finally that "laboratory technology challenges can be addressed through planning activities using a series of goals," and that the lab of the future will implement those goals as early as possible, with the help of well-qualified LSEs fluent in both laboratory science and IT.

This guide by laboratory automation veteran Joe Liscouski takes an updated look at the state of implementing laboratory automation, with a strong emphasis on the importance of planning and management as early as possible. It also addresses the need for laboratory systems engineers (LSEs)—an update from laboratory automation engineers (LAEs)—and the institutional push required to develop educational opportunities for those LSEs. After looking at labs then and now, the author examines the transition to more automated labs, followed by seven detailed goals required for planning and managing that transition. He then closes by discussing LSEs and their education, concluding finally that "laboratory technology challenges can be addressed through planning activities using a series of goals," and that the lab of the future will implement those goals as early as possible, with the help of well-qualified LSEs fluent in both laboratory science and IT.

This white paper by laboratory automation veteran Joe Liscouski examines what it takes to fully realize a fully integrated laboratory informatics infrastructure, a strong pivot for many labs. He begins by discussing the worries and concerns of stakeholders about automation job losses, how AI and machine learning can play a role, and what equipment may be used, He then goes into lengthy discussion about how to specifically plan for automation, and at what level (no, partial, or full automation), as well as what it might cost. The author wraps up with a brief look at the steps required for actual project management. The conclusion? "Just as the manufacturing industries transitioned from cottage industries to production lines and then to integrated production-information systems, the execution of laboratory science has to tread a similar path if the demands for laboratory results are going to be met in a financially responsible manner." This makes "laboratory automation engineering" and planning a critical component of laboratories from the start.

This white paper by laboratory automation veteran Joe Liscouski examines what it takes to fully realize a fully integrated laboratory informatics infrastructure, a strong pivot for many labs. He begins by discussing the worries and concerns of stakeholders about automation job losses, how AI and machine learning can play a role, and what equipment may be used, He then goes into lengthy discussion about how to specifically plan for automation, and at what level (no, partial, or full automation), as well as what it might cost. The author wraps up with a brief look at the steps required for actual project management. The conclusion? "Just as the manufacturing industries transitioned from cottage industries to production lines and then to integrated production-information systems, the execution of laboratory science has to tread a similar path if the demands for laboratory results are going to be met in a financially responsible manner." This makes "laboratory automation engineering" and planning a critical component of laboratories from the start.

This companion piece to last week's article on laboratory turnaround time (TAT), sees Cassim et al. take their experience developing and implementing a system for tracking TAT in a high-volume laboratory and assesses the actual impact such a system has. Using a retrospective study design and root cause analyses, the group looked at TAT outcomes over 122 weeks from a busy clinical pathology laboratory in a hospital in South Africa. After describing their methodology and results, the authors discussed the nuances of their results, including significant lessons learned. They conclude that not only is monitoring TAT to ensure timely reporting of results important, but also "vertical audits" of the results help identify bottlenecks for correction. They also emphasize "the importance of documenting and following through on corrective actions" associated with both those audits and the related quality management system in place at the lab.

This companion piece to last week's article on laboratory turnaround time (TAT), sees Cassim et al. take their experience developing and implementing a system for tracking TAT in a high-volume laboratory and assesses the actual impact such a system has. Using a retrospective study design and root cause analyses, the group looked at TAT outcomes over 122 weeks from a busy clinical pathology laboratory in a hospital in South Africa. After describing their methodology and results, the authors discussed the nuances of their results, including significant lessons learned. They conclude that not only is monitoring TAT to ensure timely reporting of results important, but also "vertical audits" of the results help identify bottlenecks for correction. They also emphasize "the importance of documenting and following through on corrective actions" associated with both those audits and the related quality management system in place at the lab.

In this 2020 article published in the African Journal of Laboratory Medicine, Cassim et al. describe their experience developing and implementing a system for tracking turnaround time (TAT) in a high-volume laboratory. Seeking to have weekly reports of turnaround time—to better react to changes and improve policy—the authors developed a dashboard-based system for the South African National Health Laboratory Service and tested it in one if their higher-volume labs to assess the TAT monitoring system's performance. They conclude that while their dashboard "enables presentation of weekly TAT data to relevant business and laboratory managers, as part of the overall quality management portfolio of the organization," the actual act of "providing tools to assess TAT performance does not in itself imply corrective action or improvement." They emphasize that training on the system, as well as system performance measurements, gradual quality improvements, and the encouragement of a leadership-promoted business culture that supports the use of such data, are all required for the success of such a tool to be ensured.

In this 2020 article published in the African Journal of Laboratory Medicine, Cassim et al. describe their experience developing and implementing a system for tracking turnaround time (TAT) in a high-volume laboratory. Seeking to have weekly reports of turnaround time—to better react to changes and improve policy—the authors developed a dashboard-based system for the South African National Health Laboratory Service and tested it in one if their higher-volume labs to assess the TAT monitoring system's performance. They conclude that while their dashboard "enables presentation of weekly TAT data to relevant business and laboratory managers, as part of the overall quality management portfolio of the organization," the actual act of "providing tools to assess TAT performance does not in itself imply corrective action or improvement." They emphasize that training on the system, as well as system performance measurements, gradual quality improvements, and the encouragement of a leadership-promoted business culture that supports the use of such data, are all required for the success of such a tool to be ensured.

Over the years, a number of methods for detecting cannabinoids and terpenes in Cannabis plant and related samples have been developed, recognizing the base problem of decarboxylation in gas chromatography. Some methods have proved better than others for cannabinoids, though typically differing methods are used for terpenes. However, could a method clearly detect both at the same time? Zekič and Križman demonstrate their gas chromatography method doing just that in this 2020 article published in Molecules. After introducing their problem, the duo present their method, as an attempt "to find appropriate conditions, mainly in terms of sample preparation, for a simultaneous analysis of both groups of compounds, while keeping the overall experimental and instrumental setups simple." After discussing the method details and results, they conclude that their gas chromatography–flame ionization detection (GC-FID) method "provides a robust tool for simultaneous quantitative analysis of these two chemically different groups of analytes."

Over the years, a number of methods for detecting cannabinoids and terpenes in Cannabis plant and related samples have been developed, recognizing the base problem of decarboxylation in gas chromatography. Some methods have proved better than others for cannabinoids, though typically differing methods are used for terpenes. However, could a method clearly detect both at the same time? Zekič and Križman demonstrate their gas chromatography method doing just that in this 2020 article published in Molecules. After introducing their problem, the duo present their method, as an attempt "to find appropriate conditions, mainly in terms of sample preparation, for a simultaneous analysis of both groups of compounds, while keeping the overall experimental and instrumental setups simple." After discussing the method details and results, they conclude that their gas chromatography–flame ionization detection (GC-FID) method "provides a robust tool for simultaneous quantitative analysis of these two chemically different groups of analytes."

This 2020 paper published in JMIR Medical Informatics examines both the usefulness of linked data (connecting data points across multiple data sets) for investigating health and social issues, and a cloud-based means for enabling linked data. Brown and Randall briefly review the state of cloud computing in general, and in particular with relation to data linking, noting a dearth of research on the practical use of secure, privacy-respecting cloud computing technologies for record linkage. Then the authors describe their own attempt to demonstrate a cloud-based model for record linkage that respects data privacy and integrity requirements, using three synthetically generated data sets of varying sizes and complexities as test data. They discuss their findings and then conclude that through the use of "privacy-preserving record linkage" methods over the cloud, data "privacy is maintained while taking advantage of the considerable scalability offered by cloud solutions," all while having "the ability to process increasingly larger data sets without impacting data release protocols and individual patient privacy" policies.

This 2020 paper published in JMIR Medical Informatics examines both the usefulness of linked data (connecting data points across multiple data sets) for investigating health and social issues, and a cloud-based means for enabling linked data. Brown and Randall briefly review the state of cloud computing in general, and in particular with relation to data linking, noting a dearth of research on the practical use of secure, privacy-respecting cloud computing technologies for record linkage. Then the authors describe their own attempt to demonstrate a cloud-based model for record linkage that respects data privacy and integrity requirements, using three synthetically generated data sets of varying sizes and complexities as test data. They discuss their findings and then conclude that through the use of "privacy-preserving record linkage" methods over the cloud, data "privacy is maintained while taking advantage of the considerable scalability offered by cloud solutions," all while having "the ability to process increasingly larger data sets without impacting data release protocols and individual patient privacy" policies.

Many papers have been published over the years describing different methods for quantifying the amount of cannabinoids in Cannabis sativa and other phenotypes, as well as the products made from them. Few have taken to the task of reviewing all such methods while weighing their pros and cons. This 2020 paper by Lazarjani et al. makes that effort, landing on approximately 60 relevant papers for their review. From gas chromatography to liquid chromatography, as well as various spectrometry and spectroscopy methods, the authors give brief review of many methods and their combinations. They conclude that high-performance liquid chromatography-tandem mass spectrometry (HPLC-MS/MS) likely has the most benefits when compared to other methods reviewed in this paper, including the ability to "differentiate between acidic and neutral cannabinoids" and "differentiate between different cannabinoids based on the m/z value of their molecular ion," while having "more specificity when compared to ultraviolet detectors," particularly when dealing with complex matrices.

Many papers have been published over the years describing different methods for quantifying the amount of cannabinoids in Cannabis sativa and other phenotypes, as well as the products made from them. Few have taken to the task of reviewing all such methods while weighing their pros and cons. This 2020 paper by Lazarjani et al. makes that effort, landing on approximately 60 relevant papers for their review. From gas chromatography to liquid chromatography, as well as various spectrometry and spectroscopy methods, the authors give brief review of many methods and their combinations. They conclude that high-performance liquid chromatography-tandem mass spectrometry (HPLC-MS/MS) likely has the most benefits when compared to other methods reviewed in this paper, including the ability to "differentiate between acidic and neutral cannabinoids" and "differentiate between different cannabinoids based on the m/z value of their molecular ion," while having "more specificity when compared to ultraviolet detectors," particularly when dealing with complex matrices.

What is the current state of laboratory testing methods for SARS-CoV-2, the virus that causes COVID-19? This perspective paper from China (submitted for approval in September 2020), published in Journal of Microbiology, Immunology and Infection, analyzes and describes the latest with how laboratories in China approach testing for the virus in the human population. Jing et al. first introduce background about the pandemic, as well as the etiological characteristics and genome organization of SARS-CoV-2. Then they dive into the common molecular methods used, including qRT-PCR, LAMP, CRISPR, genome sequencing, and nucleic acid mass spectrometry. The authors then analyze the various challenges with keeping such testing consistent, categorically addressing seven areas where false-negative results arise. They close with the benefits (and drawbacks) of supplemental serological testing, before concluding "more comprehensive analysis and/or further evaluation of different diagnostic methods" is still required to improve identification rates.

What is the current state of laboratory testing methods for SARS-CoV-2, the virus that causes COVID-19? This perspective paper from China (submitted for approval in September 2020), published in Journal of Microbiology, Immunology and Infection, analyzes and describes the latest with how laboratories in China approach testing for the virus in the human population. Jing et al. first introduce background about the pandemic, as well as the etiological characteristics and genome organization of SARS-CoV-2. Then they dive into the common molecular methods used, including qRT-PCR, LAMP, CRISPR, genome sequencing, and nucleic acid mass spectrometry. The authors then analyze the various challenges with keeping such testing consistent, categorically addressing seven areas where false-negative results arise. They close with the benefits (and drawbacks) of supplemental serological testing, before concluding "more comprehensive analysis and/or further evaluation of different diagnostic methods" is still required to improve identification rates.

In this 2020 review article published in BMC Medical Informatics and Decision Making, Schmidt et al. detail the state of healthcare data harmonization (DH) literature, analyze commonalities among various DH terms, and determine "the causal relationship between DH and health management decision-making." Noting the value of organizing and integrating healthcare data in order to strengthen many aspects of how the healthcare system runs, the authors lay out the methodology and results of their scoping review of the topic. The group had three primary objectives: identifying the key components and processes of healthcare DH, synthesizing the various related definitions of DH, and documenting relationships between DH interventions and healthcare management decision-making. They conclude that "health information exchange" is the most commonly used term among seven key terms, and that there are nine vital characteristics to making DH work well. They also add that DH, when conducted well, positively contributes to clinic, operational, and population surveillance decision-making in healthcare settings.

In this 2020 review article published in BMC Medical Informatics and Decision Making, Schmidt et al. detail the state of healthcare data harmonization (DH) literature, analyze commonalities among various DH terms, and determine "the causal relationship between DH and health management decision-making." Noting the value of organizing and integrating healthcare data in order to strengthen many aspects of how the healthcare system runs, the authors lay out the methodology and results of their scoping review of the topic. The group had three primary objectives: identifying the key components and processes of healthcare DH, synthesizing the various related definitions of DH, and documenting relationships between DH interventions and healthcare management decision-making. They conclude that "health information exchange" is the most commonly used term among seven key terms, and that there are nine vital characteristics to making DH work well. They also add that DH, when conducted well, positively contributes to clinic, operational, and population surveillance decision-making in healthcare settings.

Cybersecurity and how it's handled by an organization has many facets, from examining existing systems, setting organizational goals, and investing in training, to probing for weaknesses, monitoring access, and containing breaches. An additional and vital element an organization must consider is how to conduct "effective and interoperable cybersecurity information sharing." As Rantos et al. note, while the adoption of standards, best practices, and policies prove useful to incorporating shared cybersecurity threat information, "a holistic approach to the interoperability problem" of sharing cyber threat information and intelligence (CTII) is required. The authors lay out their case for this approach in their 2020 paper published in Computers, concluding that their method effectively "addresses all those factors that can affect the exchange of cybersecurity information among stakeholders."

Cybersecurity and how it's handled by an organization has many facets, from examining existing systems, setting organizational goals, and investing in training, to probing for weaknesses, monitoring access, and containing breaches. An additional and vital element an organization must consider is how to conduct "effective and interoperable cybersecurity information sharing." As Rantos et al. note, while the adoption of standards, best practices, and policies prove useful to incorporating shared cybersecurity threat information, "a holistic approach to the interoperability problem" of sharing cyber threat information and intelligence (CTII) is required. The authors lay out their case for this approach in their 2020 paper published in Computers, concluding that their method effectively "addresses all those factors that can affect the exchange of cybersecurity information among stakeholders."