Posted on October 27, 2020

By LabLynx

Journal articles

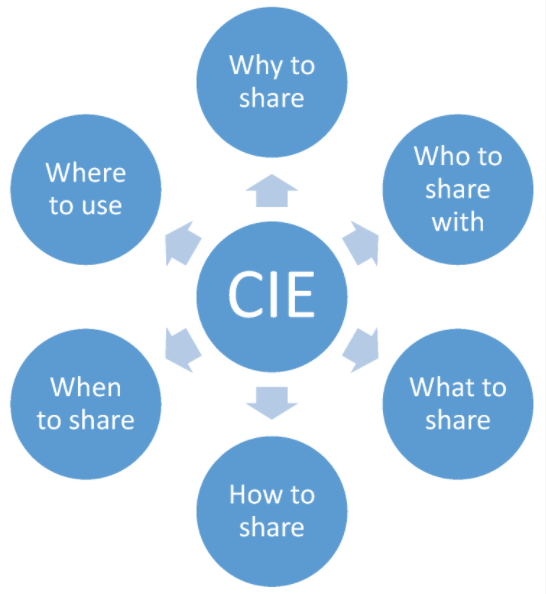

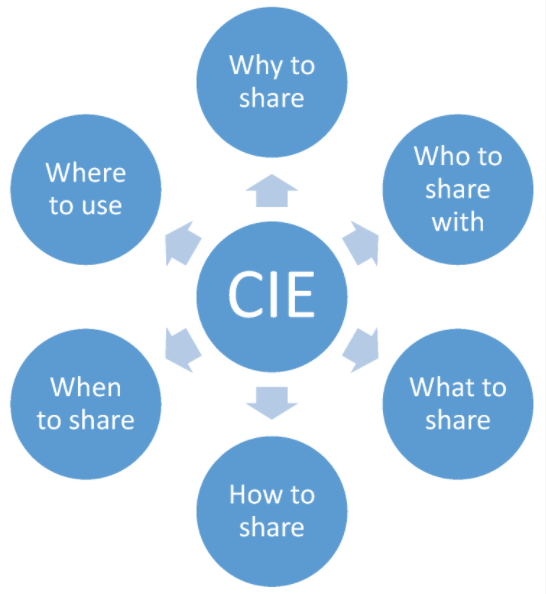

Cybersecurity and how it's handled by an organization has many facets, from examining existing systems, setting organizational goals, and investing in training, to probing for weaknesses, monitoring access, and containing breaches. An additional and vital element an organization must consider is how to conduct "effective and interoperable cybersecurity information sharing." As Rantos

et al. note, while the adoption of standards, best practices, and policies prove useful to incorporating shared cybersecurity threat information, "a holistic approach to the interoperability problem" of sharing cyber threat information and intelligence (CTII) is required. The authors lay out their case for this approach in their 2020 paper published in

Computers, concluding that their method effectively "addresses all those factors that can affect the exchange of cybersecurity information among stakeholders."

Posted on October 19, 2020

By LabLynx

Journal articles

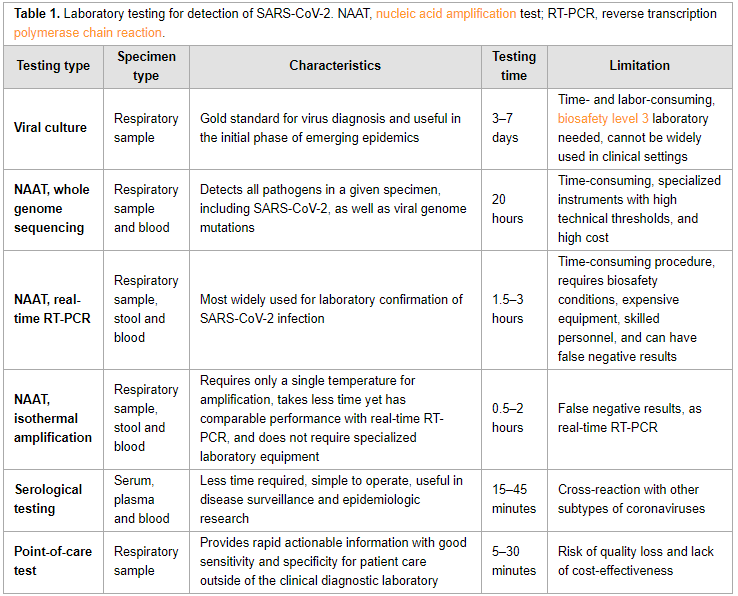

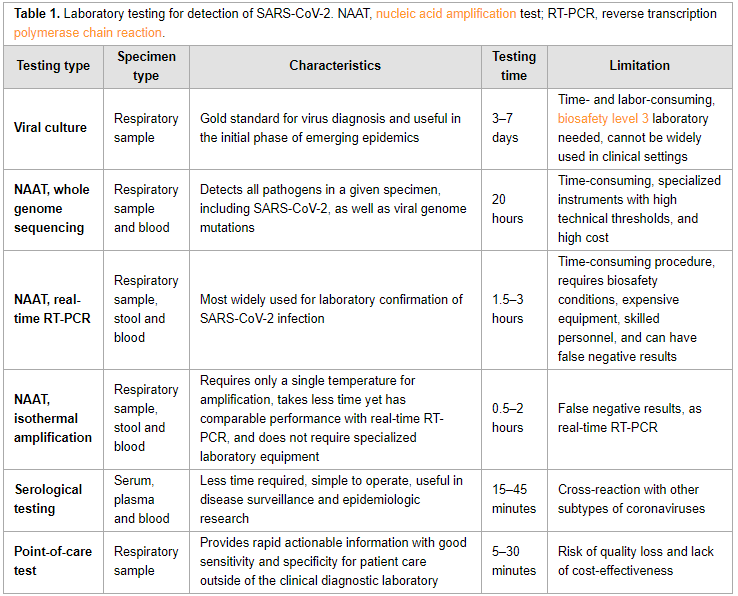

In this "short communication" from the

International Journal of Infectious Diseases, Xu

et al. provide a succinct review of the currently available laboratory testing technologies used to verify COVID-19 infection in patients. The authors review viral cultures, whole genome sequencing, real-time RT-PCR, isothermal amplification, and serological testing, as well as point-of-care testing utilizing one of those methods. They also discuss the sample types that should be collected, and at what stage they are most effective. They close by noting that choosing "a diagnostic assay for COVID-19 should take the characteristics and advantages of various technologies, as well as different clinical scenarios and requirements, into full consideration."

Posted on October 12, 2020

By LabLynx

Journal articles

The quality of data used in not only research centers but also clinical and environmental testing laboratories of all kinds is of great import. For experimental researchers, it means a higher likelihood of reproducible results by others. For testing labs, it means greater assurances of accuracy and outcomes. However, ensuring quality data is more than simply implementing a few good data practices. Stefano Canali, a researcher at Leibniz University Hannover, argues that more must be done, including changing how we conceptualize what "high-quality data" actually entails. In Canali's 2020 essay, published in the journal

Data, a context-based approach to understanding data quality is argued as necessary, "whereby quality should be seen as a result of the context where a dataset is used, and not only of the intrinsic features of the data." After reviewing three philosophical areas of research into data quality, Canali lays out his plan for a more contextual approach of data quality and discusses three practical cases that demonstrate the value of such an approach. He concludes that despite the value of older approaches in some cases, "discussions of quality should also take into account specific contexts and be flexible in connection to these contexts, as opposed to setting up categorizations and hierarchies that are intended be applied to all and any contexts of research practices."

Posted on October 6, 2020

By LabLynx

Journal articles

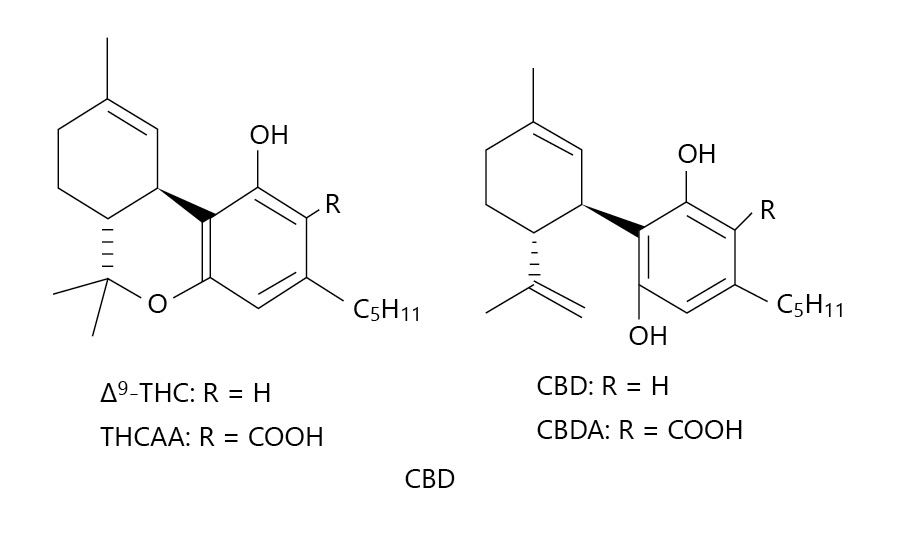

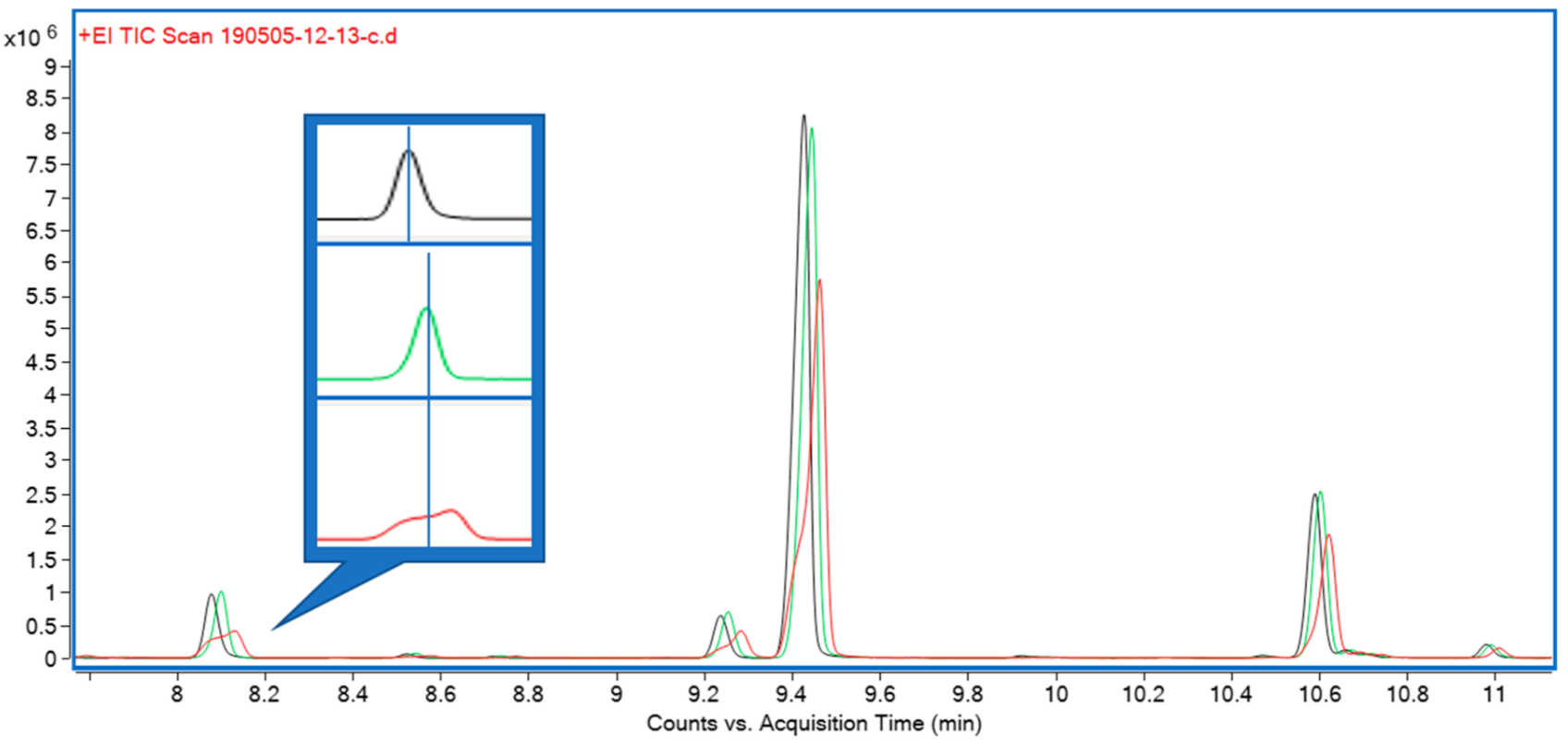

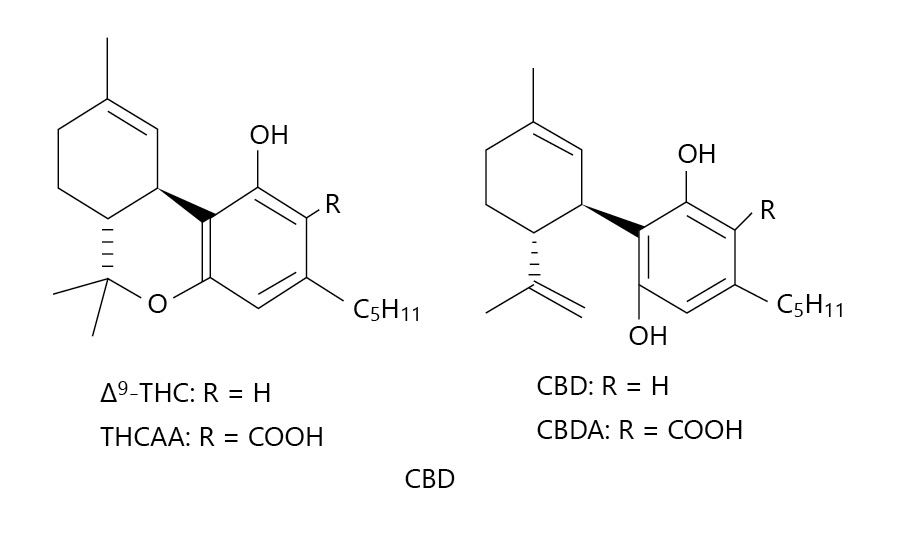

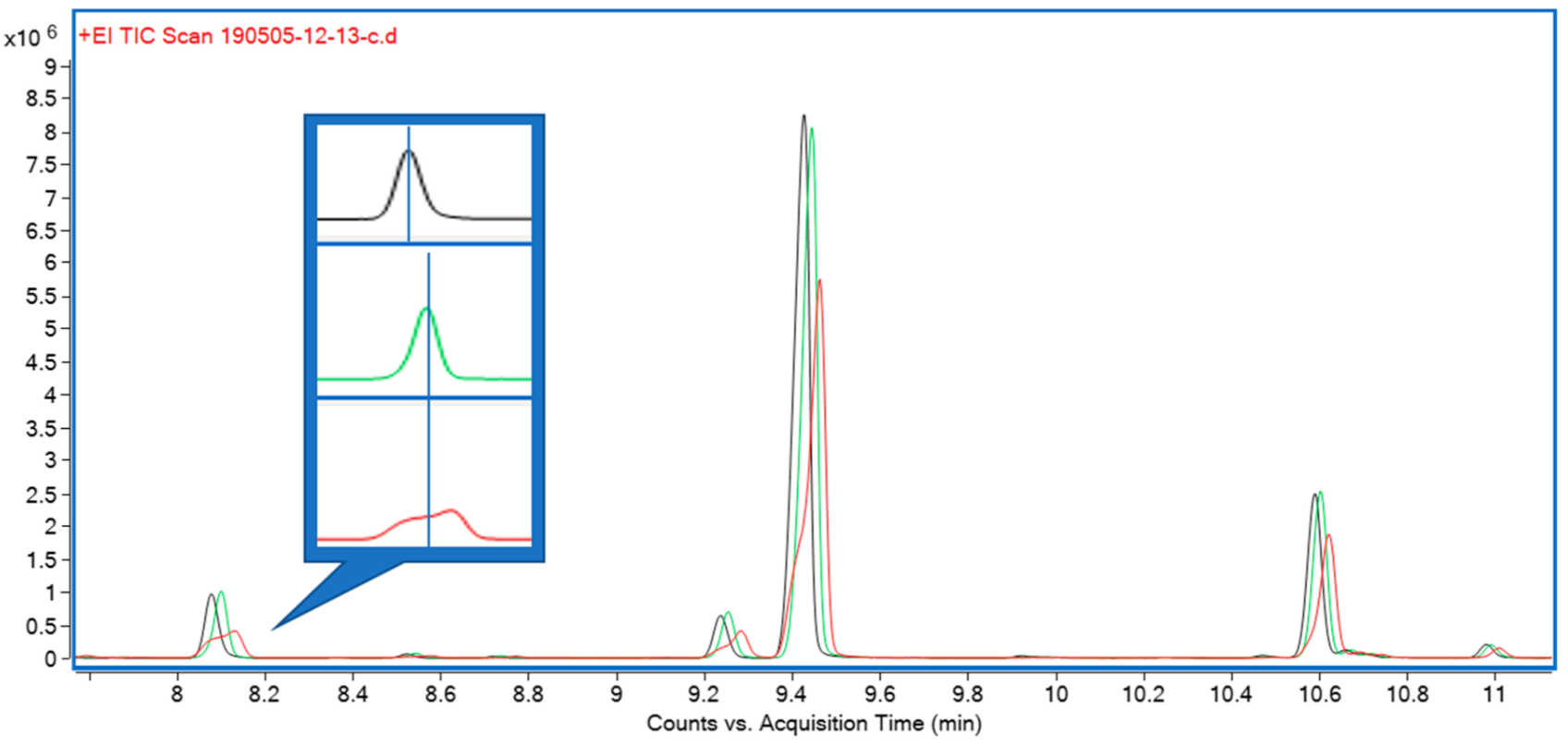

In this 2020 paper published in

Medical Cannabis and Cannabinoids, ElSohly

et al. present the results from an effort to demonstrate the use of a relatively basic gas chromatography–mass spectrometry (GC-MS) method for accurately measuring the cannabidiol, tetrahydrocannabinol, cannabidiolic acid, and tetrahydrocannabinol acid content of CBD oil and hemp oil products. Noting the problems with inaccurate labels and the proliferation of CBD products suddenly for sale, the authors emphasize the importance of a precise and reproducible method for ensuring those products' cannabinoid and acid precursor concentration claims are accurate. From their results, they conclude their validated method achieves that goal.

Posted on October 1, 2020

By LabLynx

Journal articles

A growing trend in producing academic research is abiding by the FAIR principles, which state that produced research data be findable, accessible, interoperable, and re-usable. These, in theory, lend to the important concept of reproducibility. However, what about the software used to generate the data? Often that software is a home-grown solution, and the software and its developers are rarely cited in academic research. This does not lend to reproducibility. As such, researchers such as Davenport

et al. have written on the topic of improving research output reproducibility by addressing good software development and citation practices. In their 2020 paper published in

Data Science Journal, they present a brief essay on the topic, offering background and suggestions to researchers on how to improve research software development, use, and citation. They conclude that "[e]ncouraging the use of modern methods and professional training will improve the quality of research software" and by extension the reproducibility of research results themselves.

Posted on September 21, 2020

By LabLynx

Journal articles

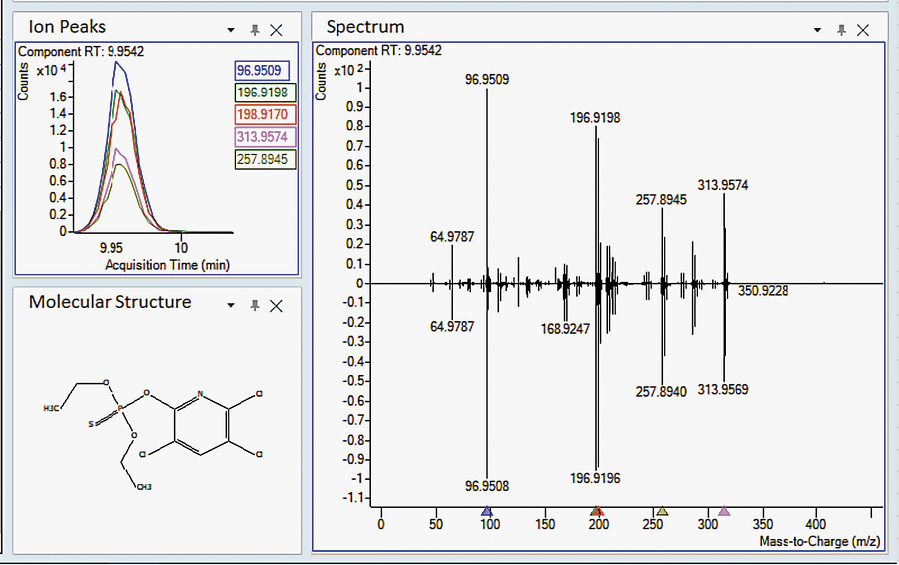

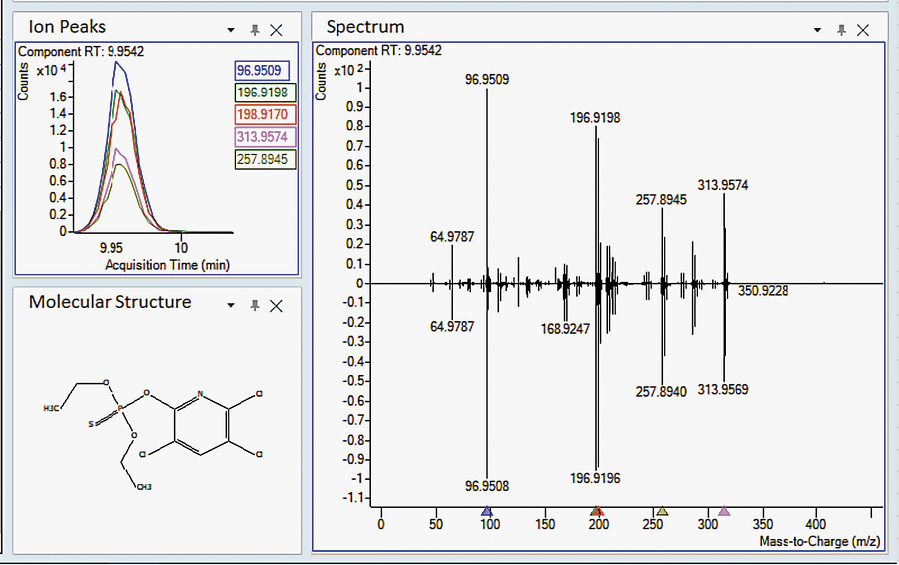

Sure, there are laboratory methods for looking for a small number of specific contaminates in cannabis substrates (target screening), but what about more than a thousand at one time (suspect screening)? In this 2020 paper published in

Medical Cannabis and Cannabinoids, Wylie

et al. demonstrate a method to screen cannabis extracts for more than 1,000 pesticides, herbicides, fungicides, and other pollutants using gas chromatography paired with a high-resolution accurate mass quadrupole time-of-flight mass spectrometer (GC/Q-TOF), in conjunction with several databases. They note that while some governmental bodies are mandating a specific subset of contaminates to be tested for in cannabis products, some cultivators may still use unapproved pesticides and such that aren't officially tested for, putting medical cannabis and recreational users alike at risk. As proof-of-concept, the authors describe their suspect screening materials, methods, and results of using ever-improving mass spectrometry methods to find hundreds of pollutants at one time. Rather than make specific statements about this method, the authors instead let the results of testing confiscated cannabis samples largely speak to the viability of the method.

Posted on September 16, 2020

By LabLynx

Journal articles

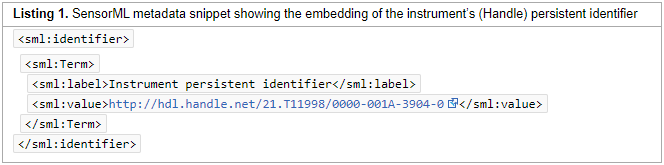

In this 2020 paper published in

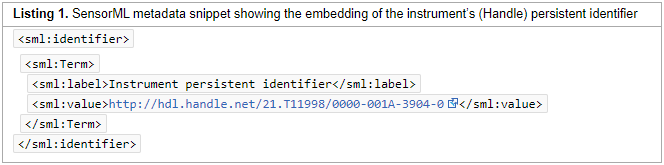

Data Science Journal, Stocker et al. present their initial attempts at generating a schema for persistently identifying scientific measuring instruments, much in the same way journal articles and data sets can be persistently identified using digital object identifiers (DOIs). They argue that "a persistent identifier for instruments would enable research data to be persistently associated with" vital metadata associated with instruments used to produce research data, "helping to set data into context." As such, they demonstrate the development and implementation of a schema to address the managements of instruments and the data they produce. After discussing their methodology, results, those results' interpretation, and adopted uses of the schema, the authors conclude by declaring the "practical viability" of the schema "for citation, cross-linking, and retrieval purposes," and promoting the schema's future development and adoption as a necessary task,

Posted on September 15, 2020

By LabLynx

Journal articles

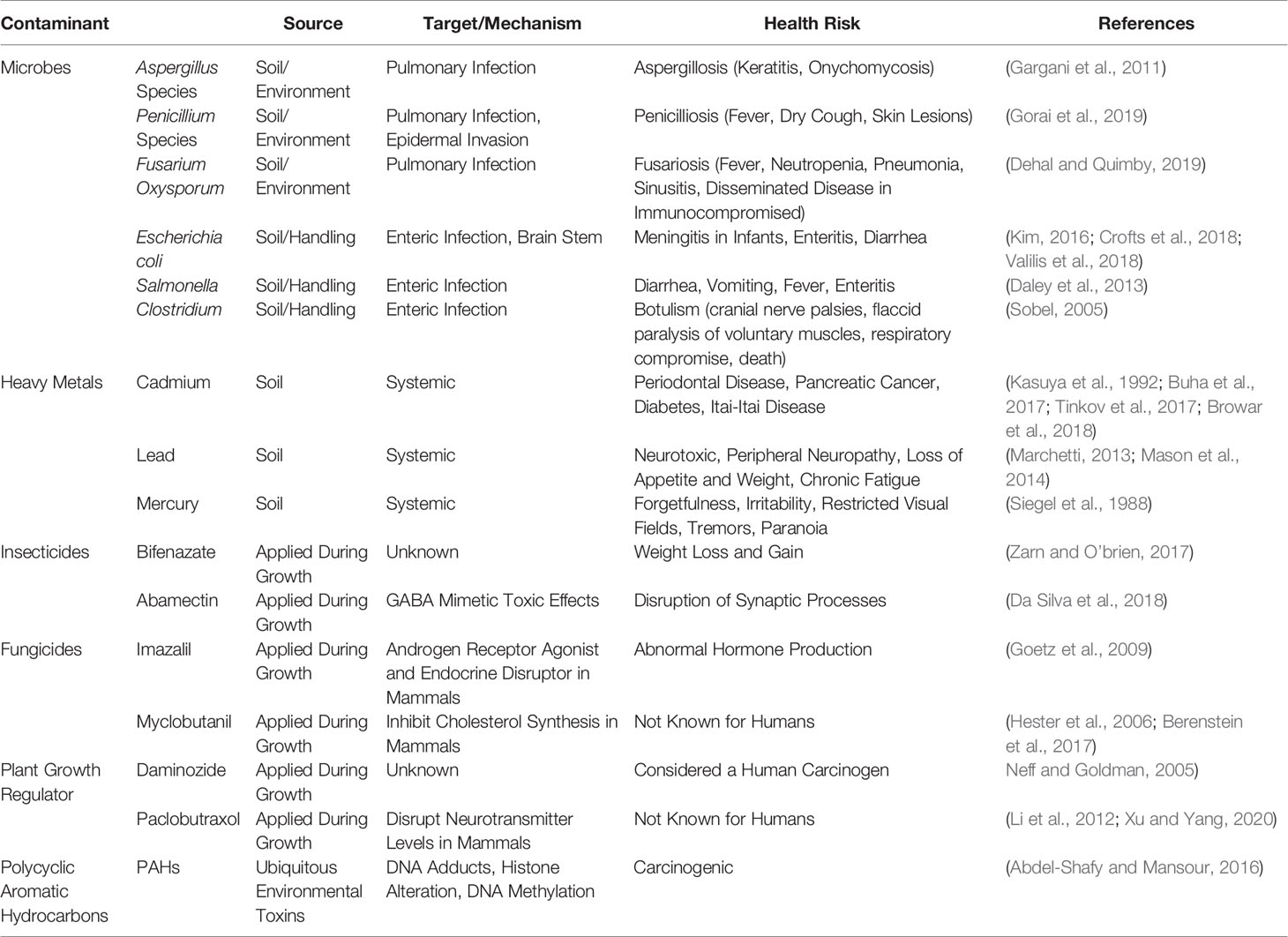

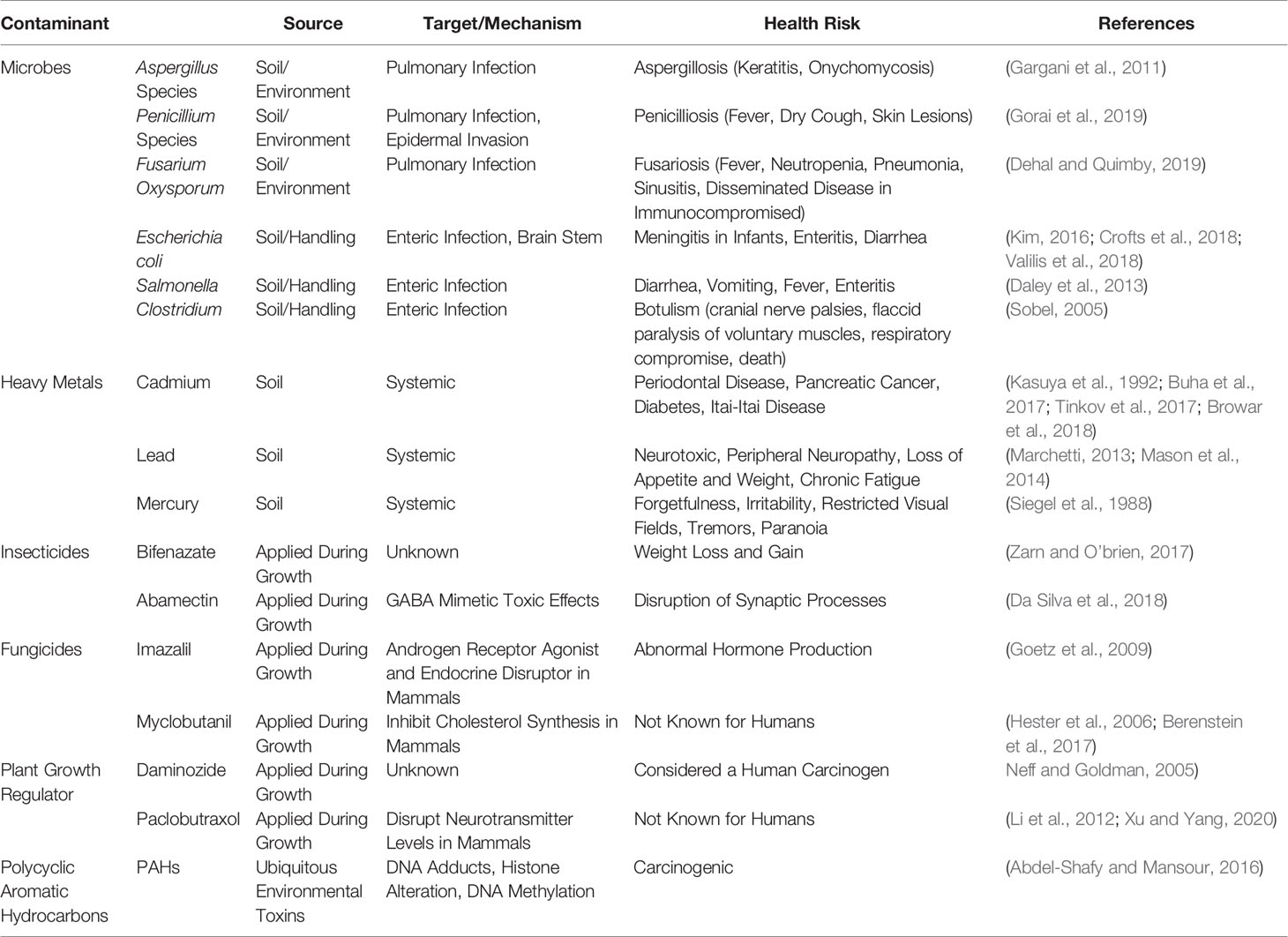

In this 2020 review published in the journal

Frontiers in Pharmacology, Montoya

et al. discuss the various potential contaminants found in cannabis products and how those contaminants may create negative consequences medically, particularly for immunocompromised individuals. Though the authors take a largely global perspective on the topic, they note at several points the lack of consistent standards—particularly in the United States—and what that means for the long-term health of cannabis users, especially as legalization efforts continue to move forward. In addition to contaminants such as microbes, heavy metals, pesticides, plant growth regulators, and polycyclic aromatic hydrocarbons, the authors also address the dangers that come with inaccurate laboratory analyses and labeling of cannabinoid content in cannabidiol (CBD)-based products. They conclude that "it is imperative to develop universal standards for cultivation and testing of products to protect those who consume cannabis."

Posted on September 13, 2020

By LabLynx

Journal articles

Appearing originally in a 2019 issue of

F1000Research, this recently revised second version sees Navale

et al. expand on their development, implementation, and various uses of the Biomedical Research Informatics Computing System (BRICS), "designed to address the wide-ranging needs of several biomedical research programs" in the U.S. With a focus on using common data elements (CDEs) for improving data quality, consistency, and reuse, the authors explain their approach to BRICS development, how it accepts and processes data, and how users can effectively access and share data for improved translational research. The authors also provide examples of how the open-source BRICS software is being used by the National Institutes of Health and other organizations. They conclude that not only does BRICS further biomedical digital data stewardship under the FAIR principles, but it also "results in sustainable digital biomedical repositories that ensure higher data quality."

Posted on September 2, 2020

By LabLynx

Journal articles

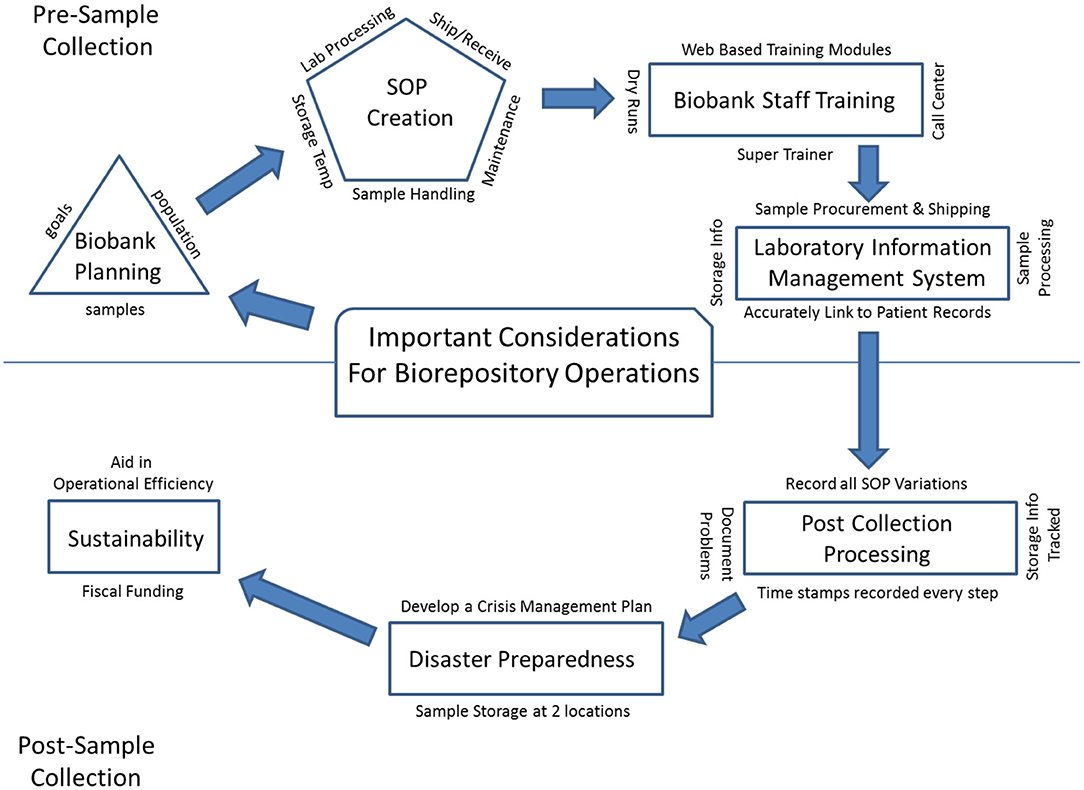

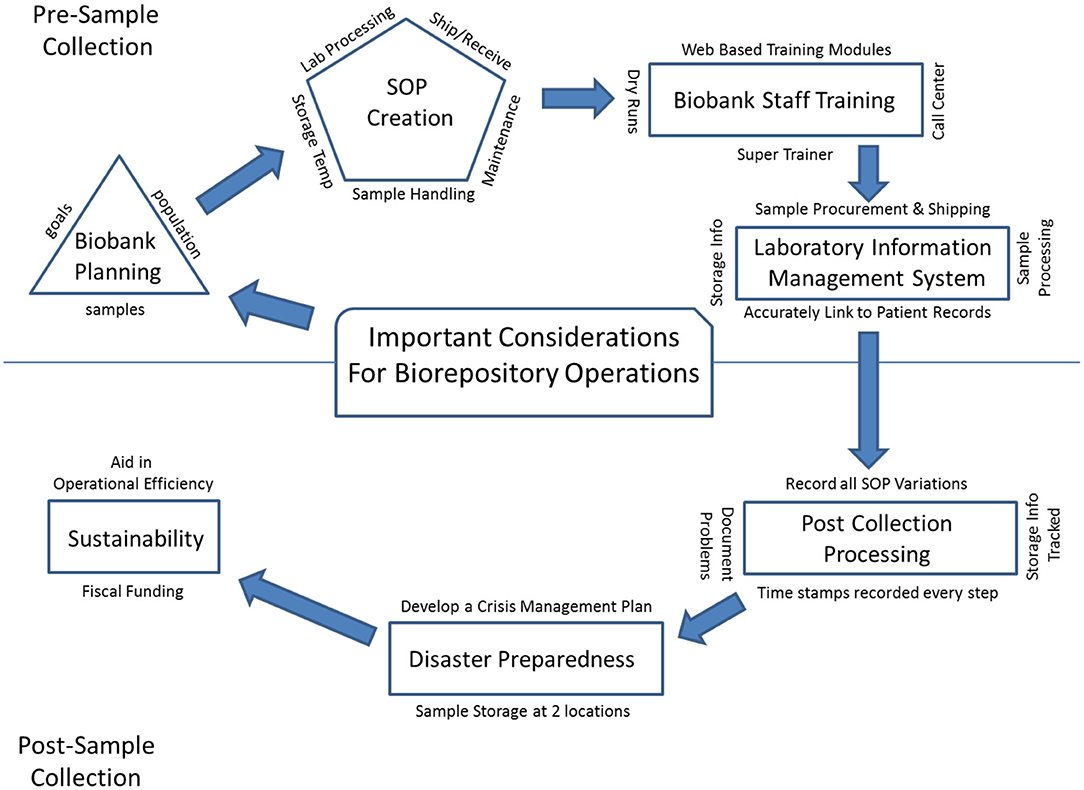

In this 2020 article published in

Frontiers in Public Health, Cicek and Olson of Mayo Clinic discuss the importance of biorepository operations and their focus on the storage and management of biospecimens. In particular, they address the importance of maintaining quality biospecimens and enacting processes and procedures that fulfill the long-term goals of biobanking institutions. After a brief introduction of biobanks and their relation to translational research, the authors discuss the various aspects that go into long-term planning for a successful biobank, including the development of standard operating procedures and staff training programs, as well as the implementation of informatics tools such as the laboratory information management system (LIMS). They conclude by emphasizing that "[b]iorepository operations require an enormous amount of support, from lab and storage space, information technology expertise, and a LIMS to logistics for biospecimen tracking, quality management systems, and appropriate facilities" in order to be most effective in their goals.

Posted on August 25, 2020

By LabLynx

Journal articles

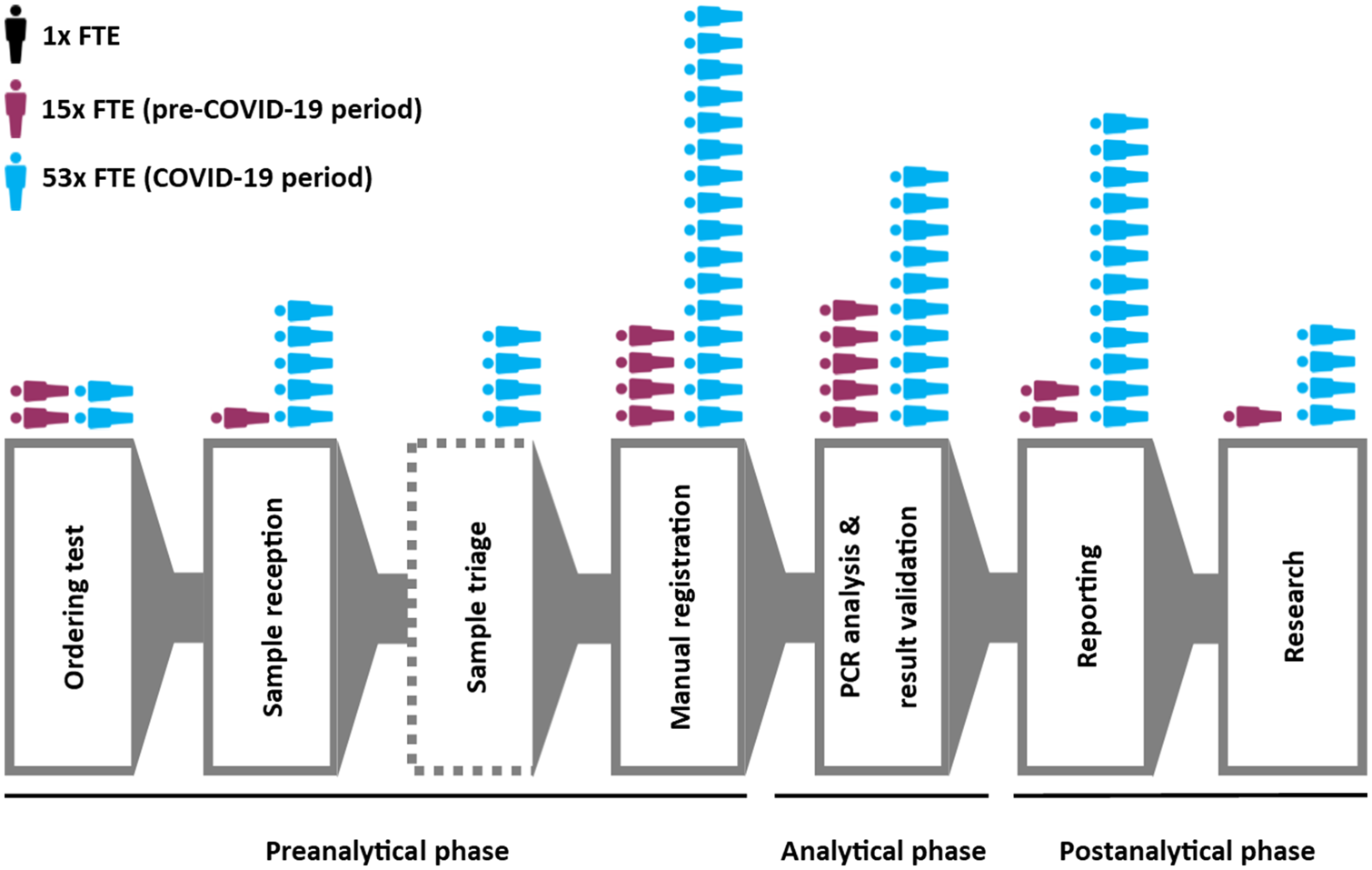

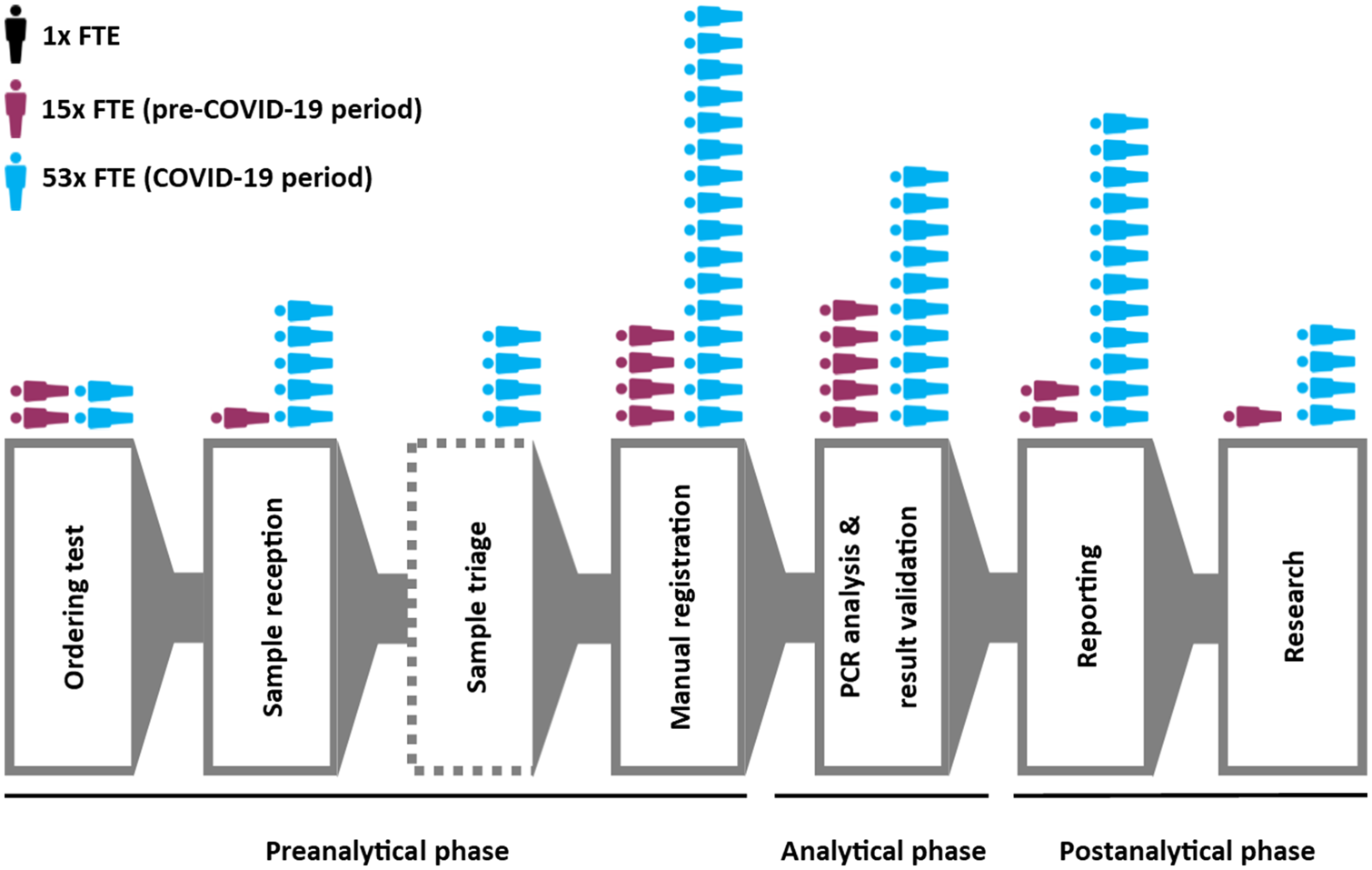

Though the COVID-19 pandemic has been in force for months, seemingly little has been published (even ahead of print) in academic journals of the technological challenges of managing the growing mountain of research and clinical diagnostic data. Weemaes

et al. are one of the exceptions, with this pre-print June publication in the

Journal of the American Medical Informatics Association. The authors, from Belgium's University Hospitals Leuven, present their approach to rapidly expanding their laboratory's laboratory information system (LIS) to address the sudden workflow bottlenecks associated with COVID-19 testing. They describe how using a change management framework to rapidly drive them forward, the authors were able "to streamline sample ordering through a CPOE system, and streamline reporting by developing a database with contact details of all laboratories in Belgium," while improving epidemiological reporting and exploratory data mining of the data for scientific research. They also address the element of "meaningful use" of their LIS, which often gets overlooked.

Posted on August 18, 2020

By LabLynx

Journal articles

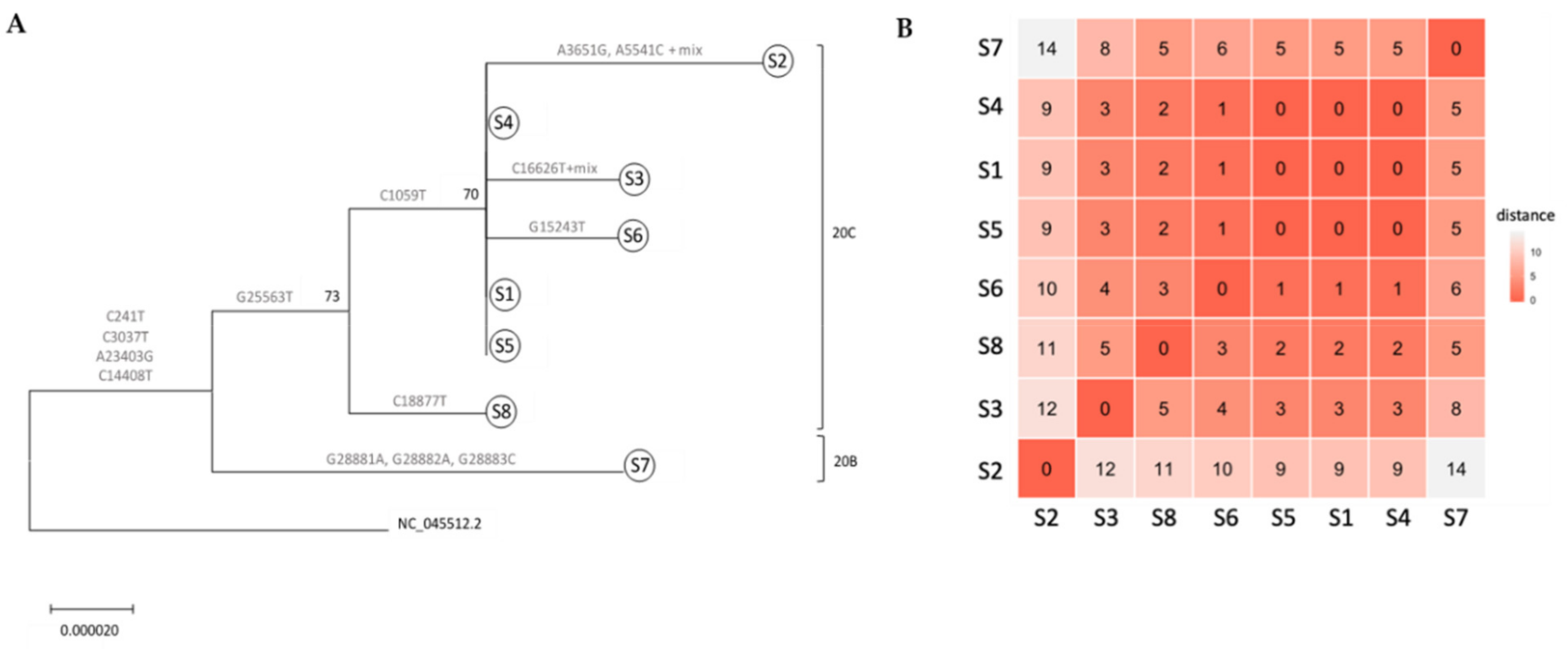

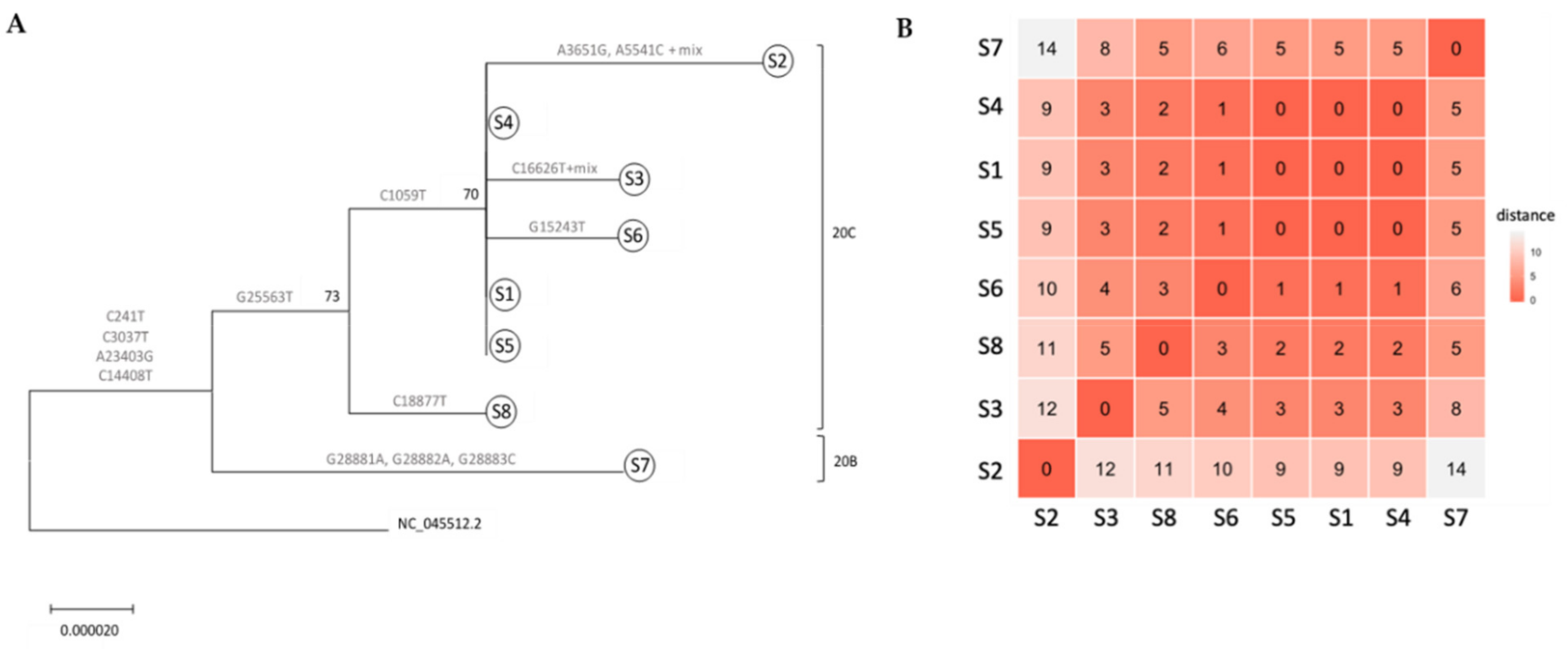

With COVID-19, we think of small outbreaks occurring from humans being in close proximity at restaurants, parties, and other events. But what about laboratories? Zuckerman

et al. share their tale of a small COVID-19 outbreak at Israel’s Central Virology Laboratory (ICVL) in mid-March 2020 and how they used their lab tech to determine the transmission sources. With eight known individuals testing positive for the SARS-CoV-2 virus overall, the researchers walk step by step through their quarantine and testing processes to "elucidate person-to-person transmission events, map individual and common mutations, and examine suspicions regarding contaminated surfaces." Their analyses found person-to-person contact—not contaminated surface contact—was the transmission path. They conclude that their overall analysis verifies the value of molecular testing and capturing complete viral genomes towards determining transmission vectors "and confirms that the strict safety regulations observed in ICVL most likely prevented further spread of the virus."

Posted on August 11, 2020

By LabLynx

Journal articles

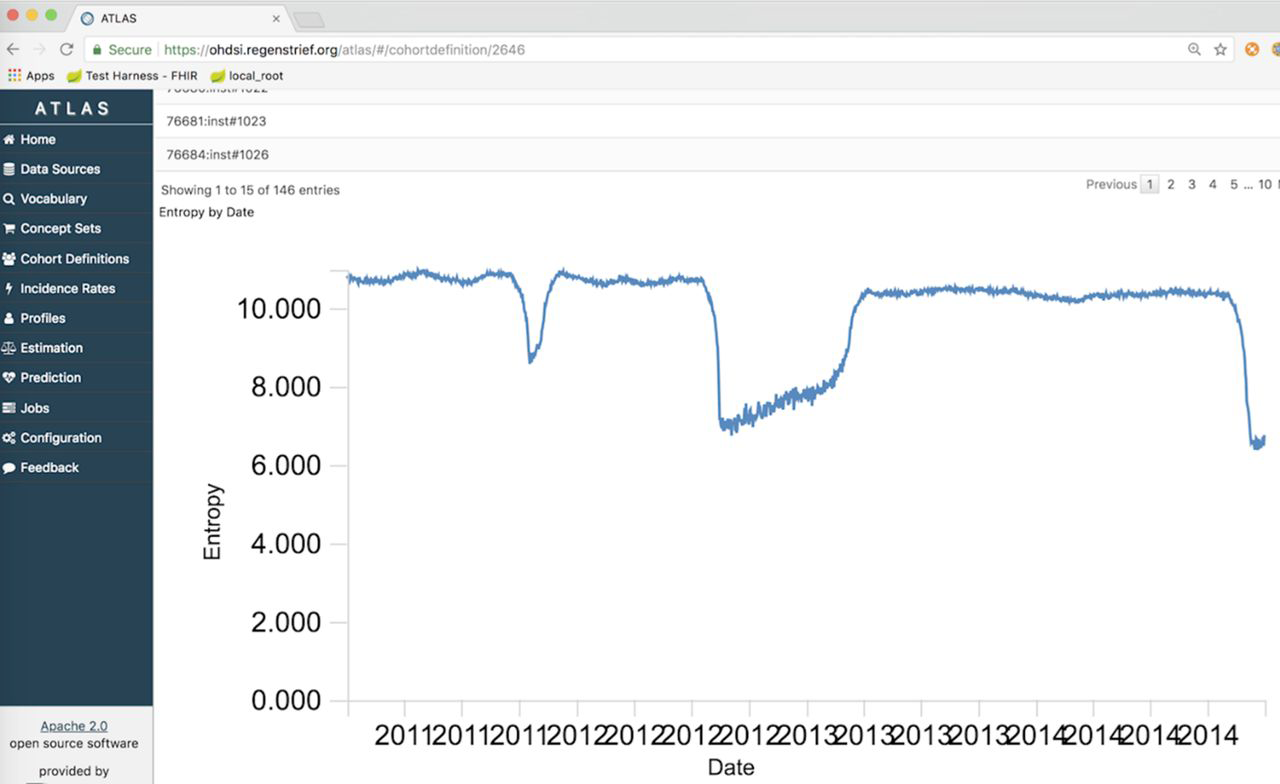

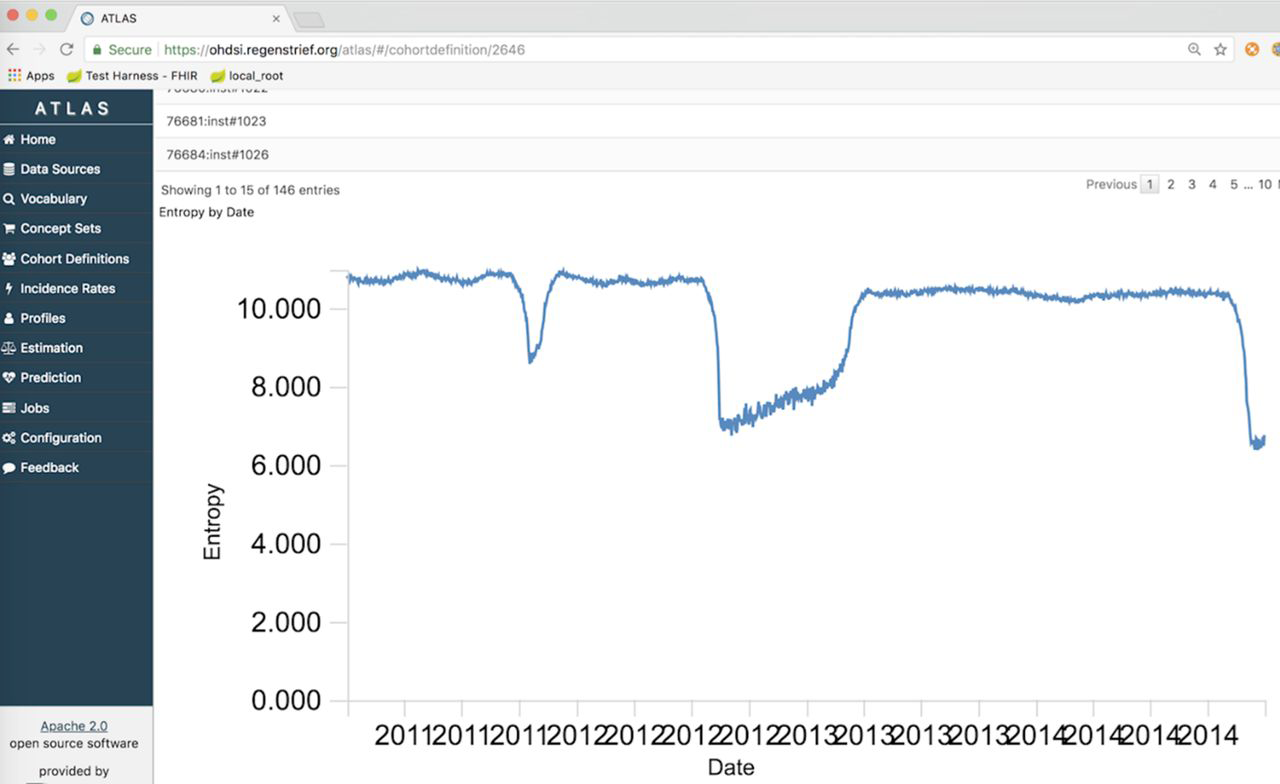

In this 2020 short report from Dixon

et al., lessons learned from attempts to expand data quality measures in the open-source tool Observational Health Data Science and Informatics (OHDSI) are presented. Noting a lack of data quality assessment and improvement mechanisms in health information systems in general, the researchers sought to improve OHDSI for public health surveillance use cases. After explaining the practical uses of OHDSI, the authors state their case for why measuring completeness, timeliness, and information entropy within OHDSI would be useful. Though not getting approval to add timeliness to the system, they conclude that high value remains "in adapting existing infrastructure and tools to support expanded use cases rather than to just create independent tools for use by a niche group."

Posted on August 4, 2020

By LabLynx

Journal articles

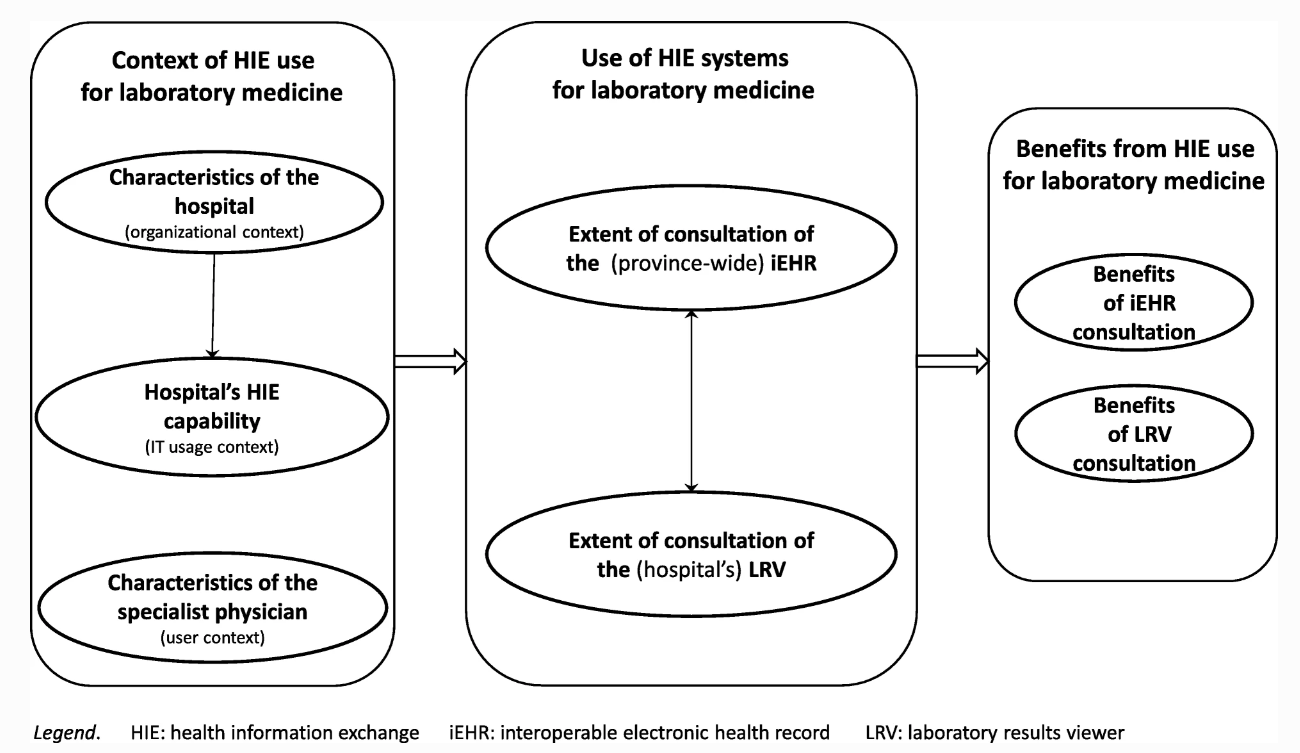

This February 2020 paper published in

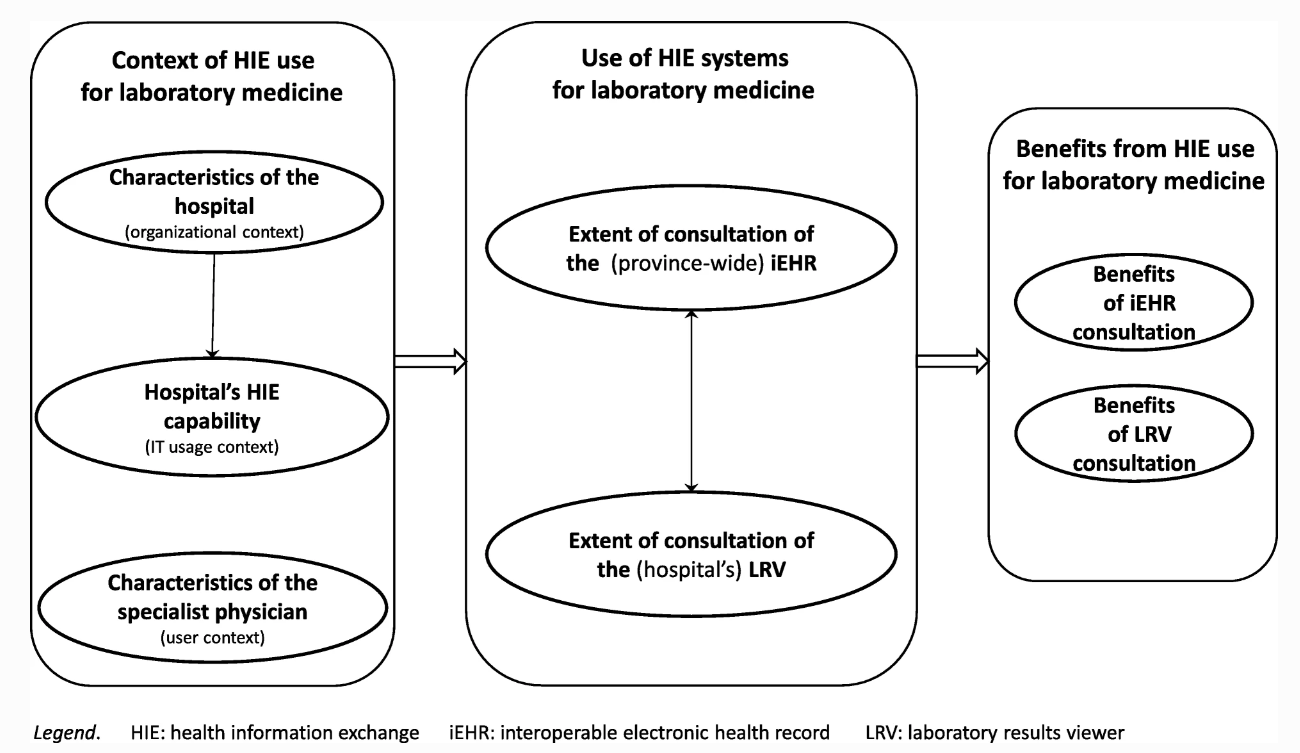

BMC Medical Informatics and Decision Making examines the state of health information exchange in Québec and other parts of Canada and how its application to laboratory medicine might be improved. In particular, laboratory information exchange (LIE) systems that "improve the reliability of the laboratory testing process" and integrate "with other clinical information systems (CISs) physicians use in hospitals" are examined in this work. Surveying hospital-based specialist physicians, Raymond

et al. paint a picture of how varying clinical information management solutions are used, what functionality is being used and not used, and how physicians view the potential benefits of the clinical systems they use. They conclude that there is very much a "complementary nature" between systems and that "system designers should take a step back to imagine a way to design systems as part of an interconnected network of features."

Posted on July 28, 2020

By LabLynx

Journal articles

In this 2020 paper published in the journal

Matabolites, Krill

et al. of Australia's AgriBio present their findings in an attempt to reduce runtimes and extraction complexity associated with quantifying terpenes in cannabis biomass. Noting the evolving needs of high-throughput cannabis breeding programs, the researchers present a method "based on a simple hexane extract from 40 mg of biomass, with 50 μg/mL dodecane as internal standard, and a gradient of less than 30 minutes." After presenting current background on terpene extraction, the researchers discuss the various aspects of their method and provide the details of materials and equipment used. They conclude that their method "covers a large cross-section of commonly detected cannabis volatiles, is validated for a large proportion of compounds it covers, and offers significant improvement in terms of sample preparation and sample throughput over previously published studies."

Posted on July 21, 2020

By LabLynx

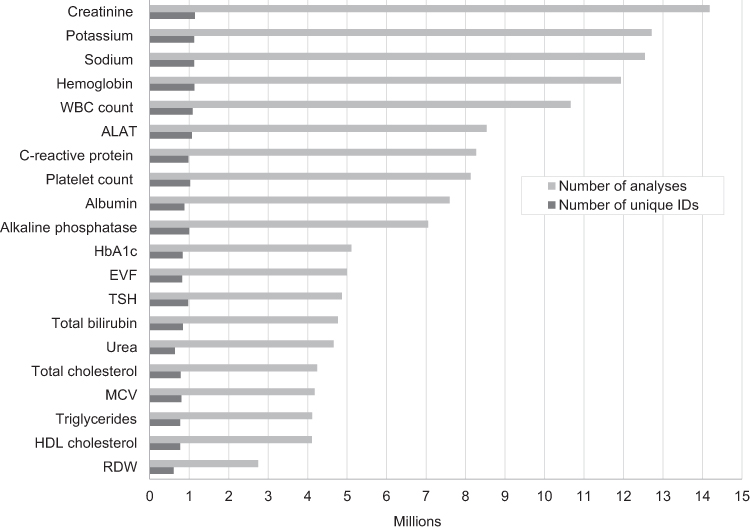

Journal articles

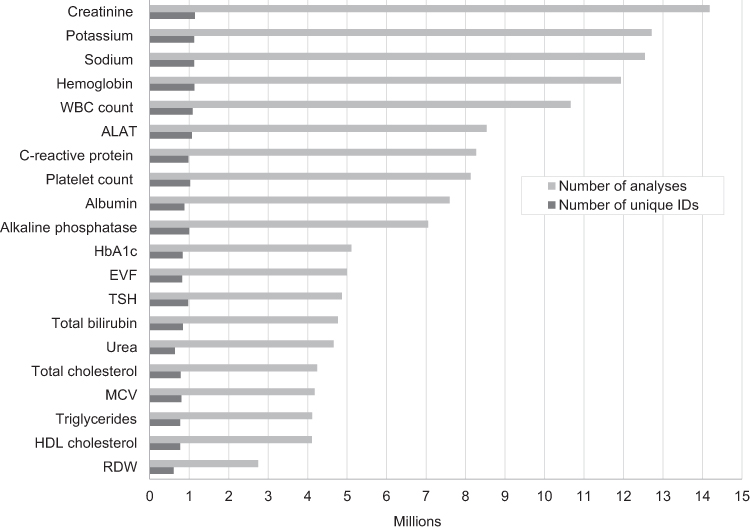

In this data resource review, researchers at several Danish hospitals discuss how the country puts two laboratory information systems (LISs) that collect routine biomarker data to use, as well as how it can be accessed for research. The researchers explain how data is collected into the LISs, how data quality is managed, and how it is used, providing several real-world examples. They then discuss the strengths and weaknesses of their data as they relate to epidemiology, as well as how the data can be accessed. They emphasize "that access to data on routine biomarkers expands the detailed biological and clinical information available on patients in the Danish healthcare system," while the "full potential is enabled through linkage to other Danish healthcare registries."

Posted on July 14, 2020

By LabLynx

Journal articles

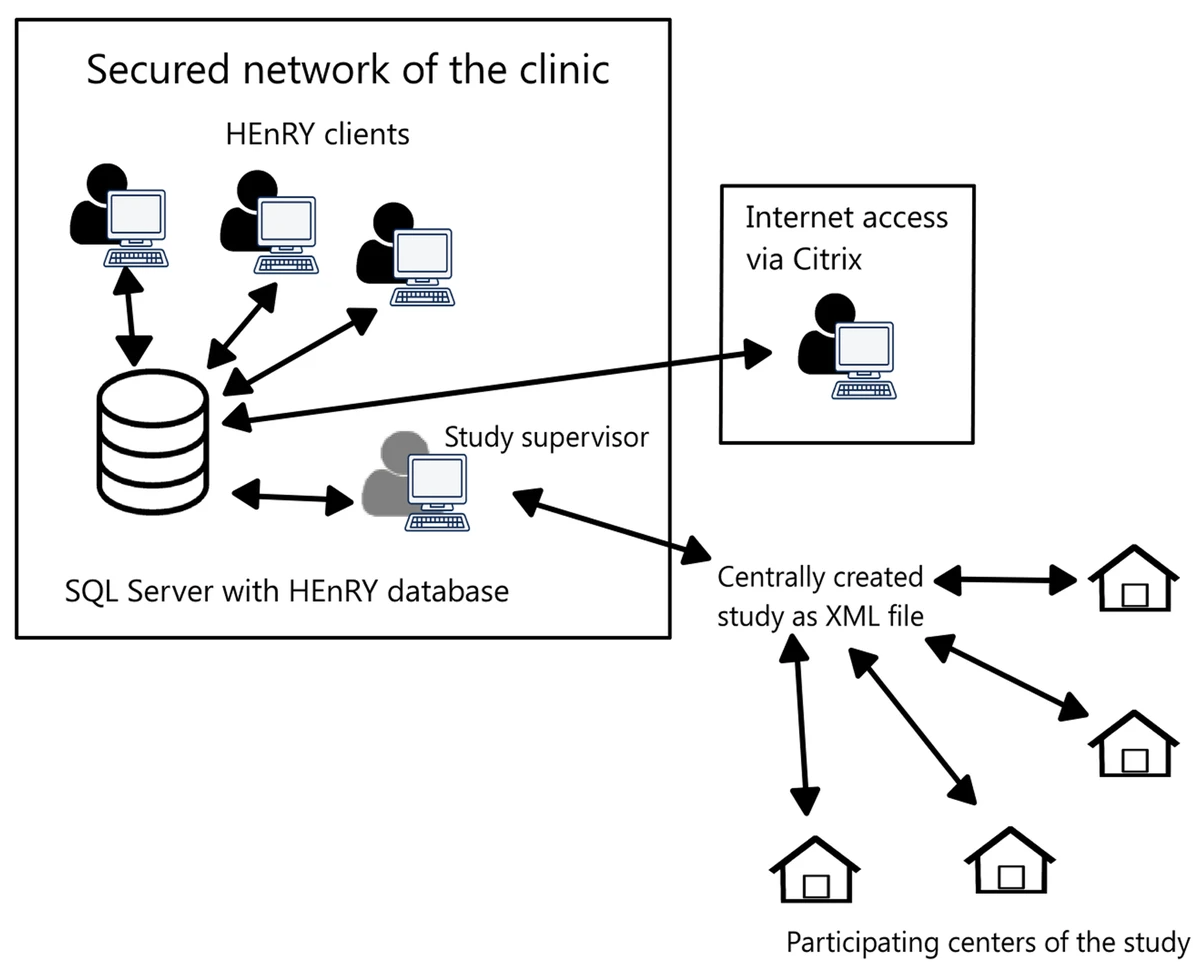

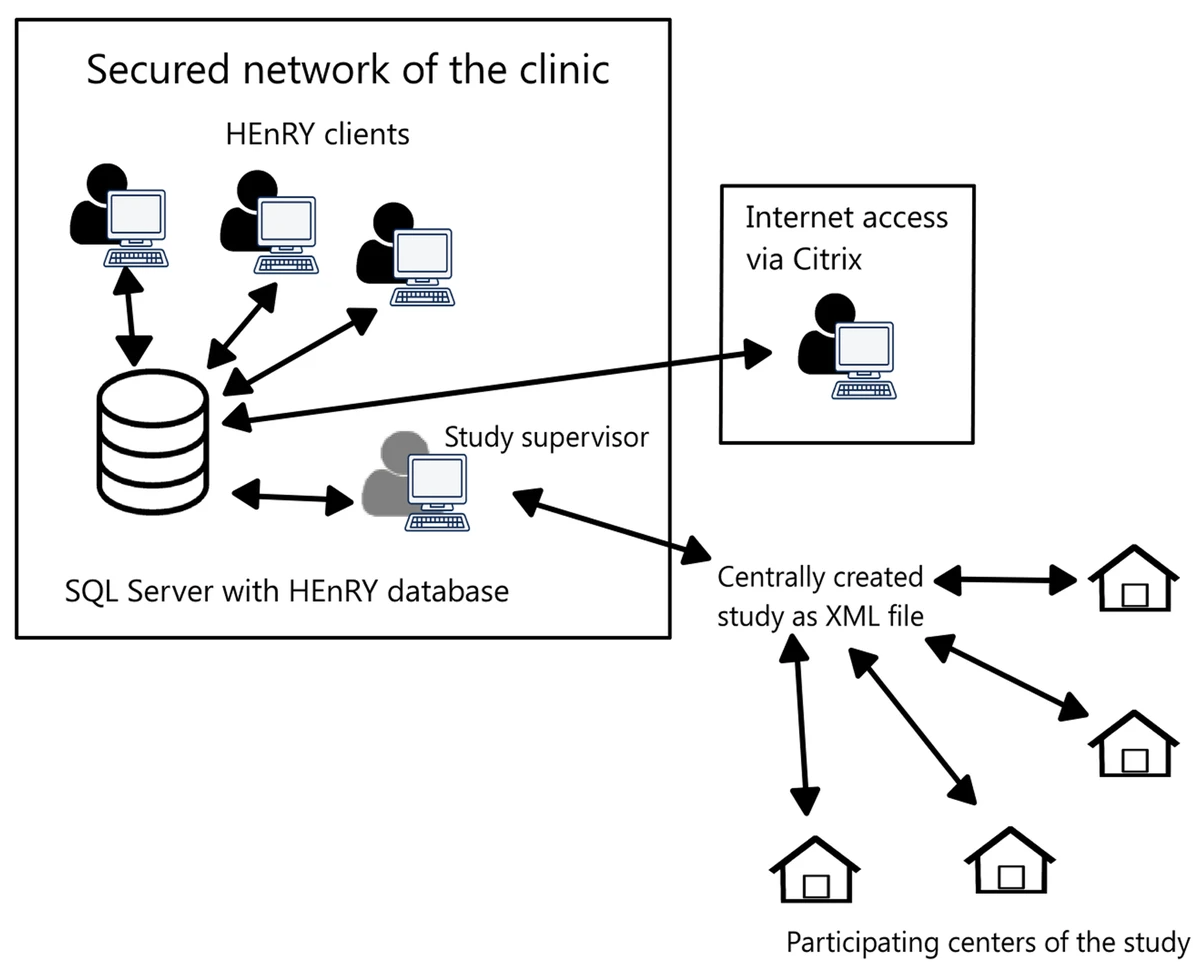

Effective biobanking of biospecimens for multicenter studies today requires more than spreadsheets and paper documents; a software system capable of improving workflows and sharing while keeping critical personal information deidentified is critical. Both commercial off-the-shelf (COTS) and open-source biobanking laboratory information management systems (LIMS) are available, but, as the University of Cologne found out, those options may be too complex to implement or cost-intensive for multicenter research networks. The university took matters into their own hands and developed the HIV Engaged Research Technology (HEnRY) LIMS, which has since expanded into a broader, open-source biobanking solution that can be applied to contexts beyond HIV research. This 2020 paper discusses the LIMS and its development and application, concluding that it offers "immense potential for emerging research groups, especially in the setting of limited resources and/or complex multicenter studies."

Posted on July 10, 2020

By LabLynx

Journal articles

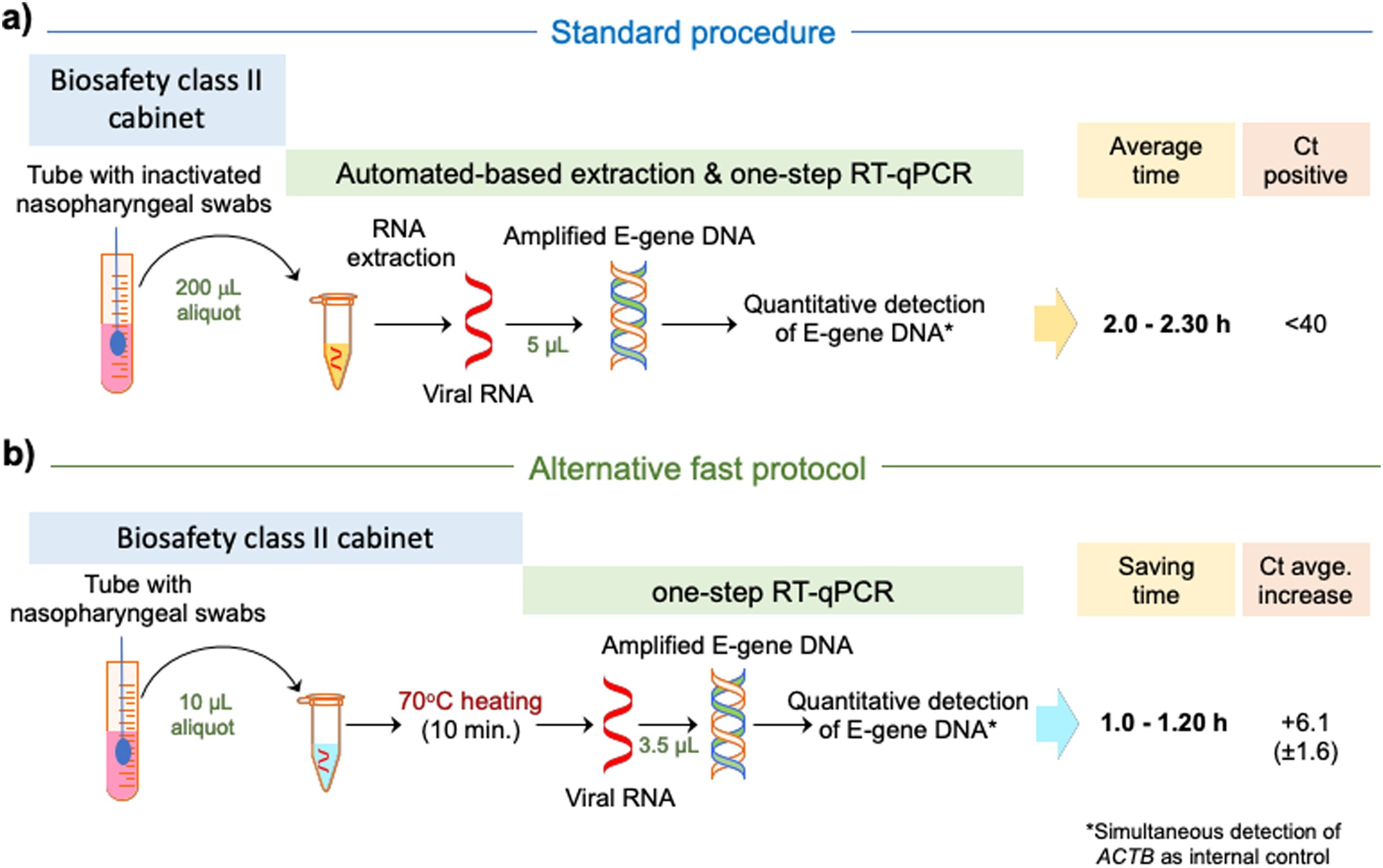

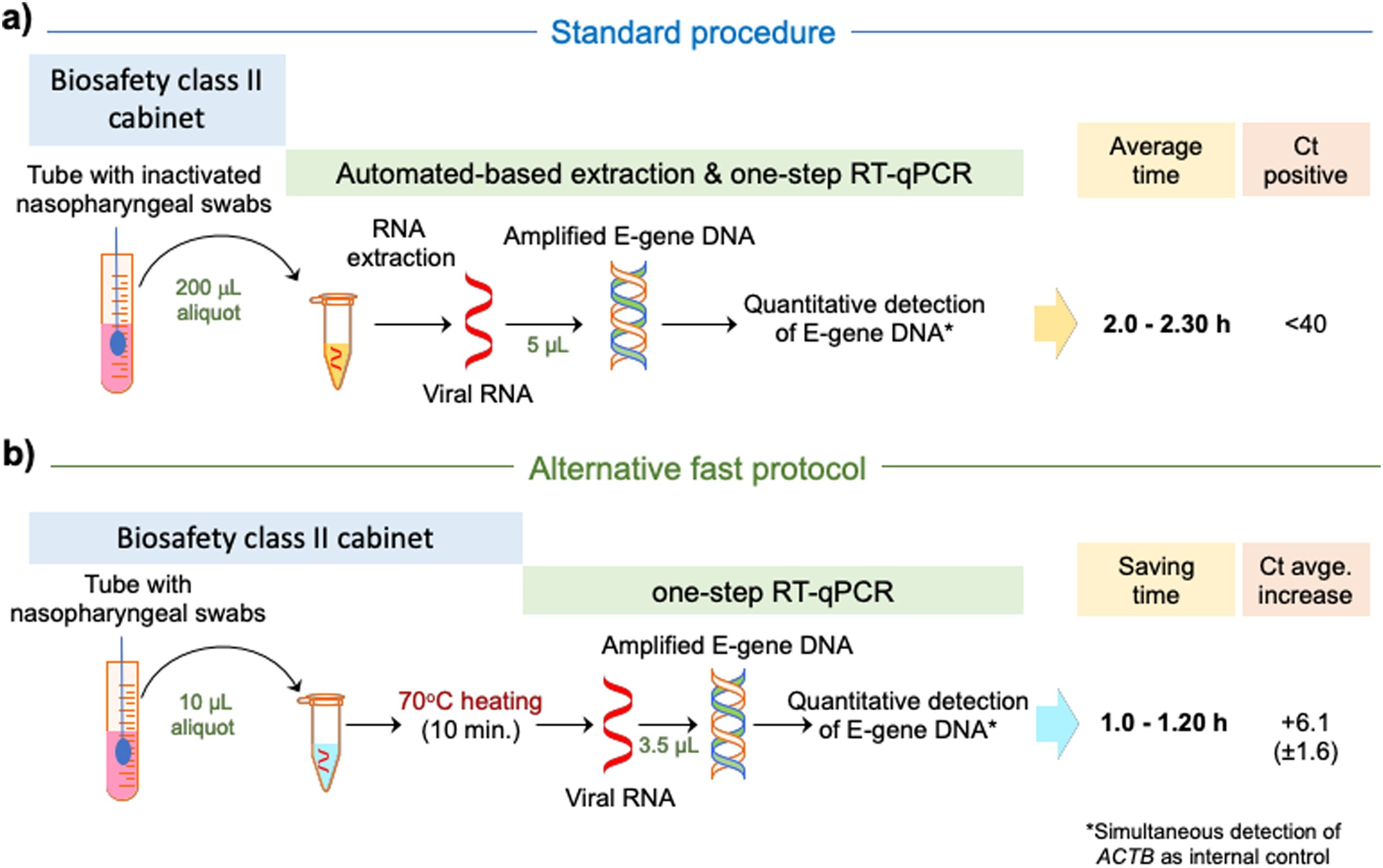

This "short communications" paper published in the

International Journal of Infectious Diseases describes the results of simplifying reverse transcription and real-time quantitative PCR (RT-qPCR) analyses for SARS-CoV-2 by skipping the RNA extraction step and instead testing three different types of direct heating of the nasopharyngeal swab. Among the three methods tested—heating directly without additives, heating with a formamide-EDTA buffer, and heating with an RNAsnap buffer—the direct heating method "provided the best results, which were highly consistent with the SARS-CoV-2 infection diagnosis based on standard RNA extraction," while also processing nearly half the time. The authors warn, however, that "choice of RT-qPCR kits might have an impact on the sensitivity of the Direct protocol" when trying to replicate their results.

Posted on July 6, 2020

By LabLynx

Journal articles

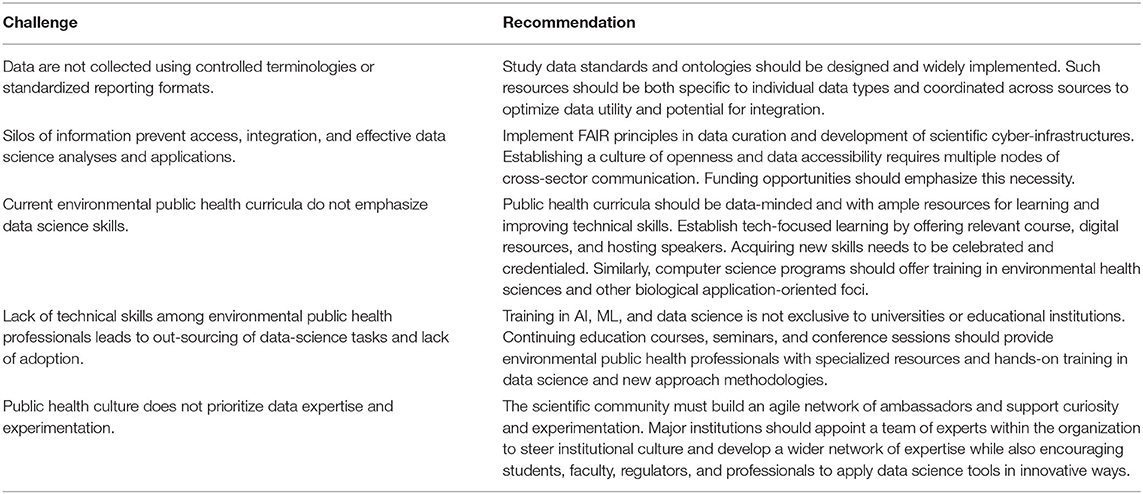

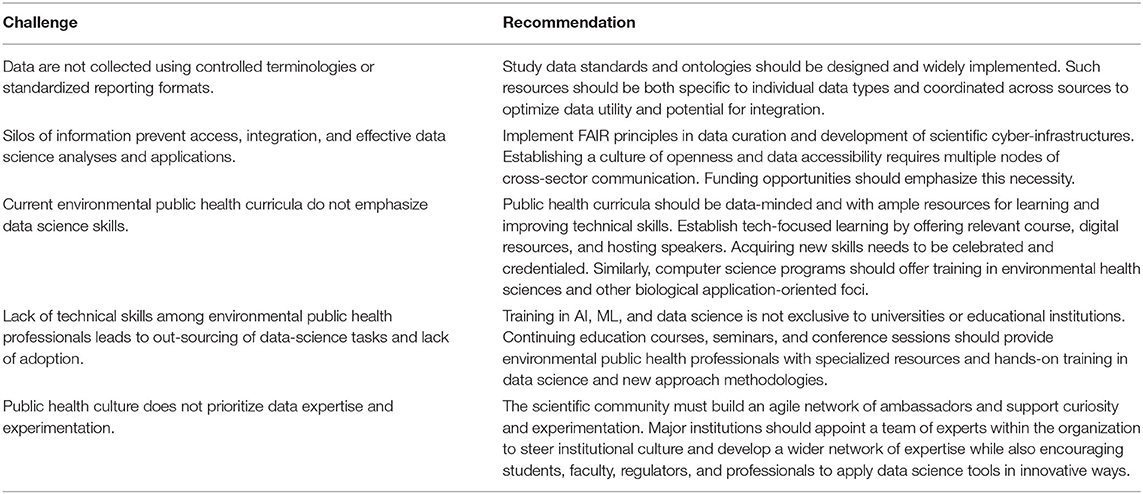

When it comes to analyzing the effects on humans of chemicals and other substances that make their way into the environment, "large, complex data sets" are often required. However, those data sets are often disparate and inconsistent. This has made epidemiological studies of air pollution and other types of environmental contamination difficult and limited in effectiveness. This problem has compounded in the age of big data. Comess

et al. address the challenges associated with today's environmental public health data in this recent paper published in

Frontiers in Artificial Intelligence and discuss how augmented intelligence, artificial intelligence (AI), and machine learning can enhance understanding of that data. However, they note, additional work must be put into improving not only analysis but also into how data is collected and shared, how researchers are trained in scientific computing and data science, and how familiarity with the benefits of AI and machine learning must be expanded. They conclude their paper with a table of five distinct challenges and their recommended way to address them so as to "create an environment that will foster the data revolution" in environmental public health and beyond.

Posted on June 23, 2020

By LabLynx

Journal articles

In this 2020 paper published in the journal

Toxins, Di Nardo

et al. of the University of Turin present their findings from adapting an existing enzyme immunoassay to cannabis testing for purposes of more accurately detecting mycotoxins, including aflatoxins such as aflatoxin B1 (AFB1). Citing benefits such as fewer steps, more cost-efficient equipment, and fewer training level demands, the authors viewed the application of enzyme immunoassay to testing cannabis for mycotoxins a worthy endeavor. Di Nardo

et al. discuss at length how they converted an immunoassay for measuring aflatoxins in eggs to one for the cannabis substrate, as well as the various challenges and caveats associated with the resulting methodology. In comparison to techniques such as ultra-high performance liquid chromatography coupled to high resolution tandem mass spectrometry, the authors concluded that enzyme immunoassay more readily allows for "wide applications in low resource settings and for the affordable monitoring of the safety of cannabis products, including those used recreationally and as a food supplement."

Cybersecurity and how it's handled by an organization has many facets, from examining existing systems, setting organizational goals, and investing in training, to probing for weaknesses, monitoring access, and containing breaches. An additional and vital element an organization must consider is how to conduct "effective and interoperable cybersecurity information sharing." As Rantos et al. note, while the adoption of standards, best practices, and policies prove useful to incorporating shared cybersecurity threat information, "a holistic approach to the interoperability problem" of sharing cyber threat information and intelligence (CTII) is required. The authors lay out their case for this approach in their 2020 paper published in Computers, concluding that their method effectively "addresses all those factors that can affect the exchange of cybersecurity information among stakeholders."

Cybersecurity and how it's handled by an organization has many facets, from examining existing systems, setting organizational goals, and investing in training, to probing for weaknesses, monitoring access, and containing breaches. An additional and vital element an organization must consider is how to conduct "effective and interoperable cybersecurity information sharing." As Rantos et al. note, while the adoption of standards, best practices, and policies prove useful to incorporating shared cybersecurity threat information, "a holistic approach to the interoperability problem" of sharing cyber threat information and intelligence (CTII) is required. The authors lay out their case for this approach in their 2020 paper published in Computers, concluding that their method effectively "addresses all those factors that can affect the exchange of cybersecurity information among stakeholders."

In this "short communication" from the International Journal of Infectious Diseases, Xu et al. provide a succinct review of the currently available laboratory testing technologies used to verify COVID-19 infection in patients. The authors review viral cultures, whole genome sequencing, real-time RT-PCR, isothermal amplification, and serological testing, as well as point-of-care testing utilizing one of those methods. They also discuss the sample types that should be collected, and at what stage they are most effective. They close by noting that choosing "a diagnostic assay for COVID-19 should take the characteristics and advantages of various technologies, as well as different clinical scenarios and requirements, into full consideration."

In this "short communication" from the International Journal of Infectious Diseases, Xu et al. provide a succinct review of the currently available laboratory testing technologies used to verify COVID-19 infection in patients. The authors review viral cultures, whole genome sequencing, real-time RT-PCR, isothermal amplification, and serological testing, as well as point-of-care testing utilizing one of those methods. They also discuss the sample types that should be collected, and at what stage they are most effective. They close by noting that choosing "a diagnostic assay for COVID-19 should take the characteristics and advantages of various technologies, as well as different clinical scenarios and requirements, into full consideration."

In this 2020 paper published in Medical Cannabis and Cannabinoids, ElSohly et al. present the results from an effort to demonstrate the use of a relatively basic gas chromatography–mass spectrometry (GC-MS) method for accurately measuring the cannabidiol, tetrahydrocannabinol, cannabidiolic acid, and tetrahydrocannabinol acid content of CBD oil and hemp oil products. Noting the problems with inaccurate labels and the proliferation of CBD products suddenly for sale, the authors emphasize the importance of a precise and reproducible method for ensuring those products' cannabinoid and acid precursor concentration claims are accurate. From their results, they conclude their validated method achieves that goal.

In this 2020 paper published in Medical Cannabis and Cannabinoids, ElSohly et al. present the results from an effort to demonstrate the use of a relatively basic gas chromatography–mass spectrometry (GC-MS) method for accurately measuring the cannabidiol, tetrahydrocannabinol, cannabidiolic acid, and tetrahydrocannabinol acid content of CBD oil and hemp oil products. Noting the problems with inaccurate labels and the proliferation of CBD products suddenly for sale, the authors emphasize the importance of a precise and reproducible method for ensuring those products' cannabinoid and acid precursor concentration claims are accurate. From their results, they conclude their validated method achieves that goal.

Sure, there are laboratory methods for looking for a small number of specific contaminates in cannabis substrates (target screening), but what about more than a thousand at one time (suspect screening)? In this 2020 paper published in Medical Cannabis and Cannabinoids, Wylie et al. demonstrate a method to screen cannabis extracts for more than 1,000 pesticides, herbicides, fungicides, and other pollutants using gas chromatography paired with a high-resolution accurate mass quadrupole time-of-flight mass spectrometer (GC/Q-TOF), in conjunction with several databases. They note that while some governmental bodies are mandating a specific subset of contaminates to be tested for in cannabis products, some cultivators may still use unapproved pesticides and such that aren't officially tested for, putting medical cannabis and recreational users alike at risk. As proof-of-concept, the authors describe their suspect screening materials, methods, and results of using ever-improving mass spectrometry methods to find hundreds of pollutants at one time. Rather than make specific statements about this method, the authors instead let the results of testing confiscated cannabis samples largely speak to the viability of the method.

Sure, there are laboratory methods for looking for a small number of specific contaminates in cannabis substrates (target screening), but what about more than a thousand at one time (suspect screening)? In this 2020 paper published in Medical Cannabis and Cannabinoids, Wylie et al. demonstrate a method to screen cannabis extracts for more than 1,000 pesticides, herbicides, fungicides, and other pollutants using gas chromatography paired with a high-resolution accurate mass quadrupole time-of-flight mass spectrometer (GC/Q-TOF), in conjunction with several databases. They note that while some governmental bodies are mandating a specific subset of contaminates to be tested for in cannabis products, some cultivators may still use unapproved pesticides and such that aren't officially tested for, putting medical cannabis and recreational users alike at risk. As proof-of-concept, the authors describe their suspect screening materials, methods, and results of using ever-improving mass spectrometry methods to find hundreds of pollutants at one time. Rather than make specific statements about this method, the authors instead let the results of testing confiscated cannabis samples largely speak to the viability of the method.

In this 2020 paper published in Data Science Journal, Stocker et al. present their initial attempts at generating a schema for persistently identifying scientific measuring instruments, much in the same way journal articles and data sets can be persistently identified using digital object identifiers (DOIs). They argue that "a persistent identifier for instruments would enable research data to be persistently associated with" vital metadata associated with instruments used to produce research data, "helping to set data into context." As such, they demonstrate the development and implementation of a schema to address the managements of instruments and the data they produce. After discussing their methodology, results, those results' interpretation, and adopted uses of the schema, the authors conclude by declaring the "practical viability" of the schema "for citation, cross-linking, and retrieval purposes," and promoting the schema's future development and adoption as a necessary task,

In this 2020 paper published in Data Science Journal, Stocker et al. present their initial attempts at generating a schema for persistently identifying scientific measuring instruments, much in the same way journal articles and data sets can be persistently identified using digital object identifiers (DOIs). They argue that "a persistent identifier for instruments would enable research data to be persistently associated with" vital metadata associated with instruments used to produce research data, "helping to set data into context." As such, they demonstrate the development and implementation of a schema to address the managements of instruments and the data they produce. After discussing their methodology, results, those results' interpretation, and adopted uses of the schema, the authors conclude by declaring the "practical viability" of the schema "for citation, cross-linking, and retrieval purposes," and promoting the schema's future development and adoption as a necessary task,

In this 2020 review published in the journal Frontiers in Pharmacology, Montoya et al. discuss the various potential contaminants found in cannabis products and how those contaminants may create negative consequences medically, particularly for immunocompromised individuals. Though the authors take a largely global perspective on the topic, they note at several points the lack of consistent standards—particularly in the United States—and what that means for the long-term health of cannabis users, especially as legalization efforts continue to move forward. In addition to contaminants such as microbes, heavy metals, pesticides, plant growth regulators, and polycyclic aromatic hydrocarbons, the authors also address the dangers that come with inaccurate laboratory analyses and labeling of cannabinoid content in cannabidiol (CBD)-based products. They conclude that "it is imperative to develop universal standards for cultivation and testing of products to protect those who consume cannabis."

In this 2020 review published in the journal Frontiers in Pharmacology, Montoya et al. discuss the various potential contaminants found in cannabis products and how those contaminants may create negative consequences medically, particularly for immunocompromised individuals. Though the authors take a largely global perspective on the topic, they note at several points the lack of consistent standards—particularly in the United States—and what that means for the long-term health of cannabis users, especially as legalization efforts continue to move forward. In addition to contaminants such as microbes, heavy metals, pesticides, plant growth regulators, and polycyclic aromatic hydrocarbons, the authors also address the dangers that come with inaccurate laboratory analyses and labeling of cannabinoid content in cannabidiol (CBD)-based products. They conclude that "it is imperative to develop universal standards for cultivation and testing of products to protect those who consume cannabis."

Appearing originally in a 2019 issue of F1000Research, this recently revised second version sees Navale et al. expand on their development, implementation, and various uses of the Biomedical Research Informatics Computing System (BRICS), "designed to address the wide-ranging needs of several biomedical research programs" in the U.S. With a focus on using common data elements (CDEs) for improving data quality, consistency, and reuse, the authors explain their approach to BRICS development, how it accepts and processes data, and how users can effectively access and share data for improved translational research. The authors also provide examples of how the open-source BRICS software is being used by the National Institutes of Health and other organizations. They conclude that not only does BRICS further biomedical digital data stewardship under the FAIR principles, but it also "results in sustainable digital biomedical repositories that ensure higher data quality."

Appearing originally in a 2019 issue of F1000Research, this recently revised second version sees Navale et al. expand on their development, implementation, and various uses of the Biomedical Research Informatics Computing System (BRICS), "designed to address the wide-ranging needs of several biomedical research programs" in the U.S. With a focus on using common data elements (CDEs) for improving data quality, consistency, and reuse, the authors explain their approach to BRICS development, how it accepts and processes data, and how users can effectively access and share data for improved translational research. The authors also provide examples of how the open-source BRICS software is being used by the National Institutes of Health and other organizations. They conclude that not only does BRICS further biomedical digital data stewardship under the FAIR principles, but it also "results in sustainable digital biomedical repositories that ensure higher data quality."

In this 2020 article published in Frontiers in Public Health, Cicek and Olson of Mayo Clinic discuss the importance of biorepository operations and their focus on the storage and management of biospecimens. In particular, they address the importance of maintaining quality biospecimens and enacting processes and procedures that fulfill the long-term goals of biobanking institutions. After a brief introduction of biobanks and their relation to translational research, the authors discuss the various aspects that go into long-term planning for a successful biobank, including the development of standard operating procedures and staff training programs, as well as the implementation of informatics tools such as the laboratory information management system (LIMS). They conclude by emphasizing that "[b]iorepository operations require an enormous amount of support, from lab and storage space, information technology expertise, and a LIMS to logistics for biospecimen tracking, quality management systems, and appropriate facilities" in order to be most effective in their goals.

In this 2020 article published in Frontiers in Public Health, Cicek and Olson of Mayo Clinic discuss the importance of biorepository operations and their focus on the storage and management of biospecimens. In particular, they address the importance of maintaining quality biospecimens and enacting processes and procedures that fulfill the long-term goals of biobanking institutions. After a brief introduction of biobanks and their relation to translational research, the authors discuss the various aspects that go into long-term planning for a successful biobank, including the development of standard operating procedures and staff training programs, as well as the implementation of informatics tools such as the laboratory information management system (LIMS). They conclude by emphasizing that "[b]iorepository operations require an enormous amount of support, from lab and storage space, information technology expertise, and a LIMS to logistics for biospecimen tracking, quality management systems, and appropriate facilities" in order to be most effective in their goals.

Though the COVID-19 pandemic has been in force for months, seemingly little has been published (even ahead of print) in academic journals of the technological challenges of managing the growing mountain of research and clinical diagnostic data. Weemaes et al. are one of the exceptions, with this pre-print June publication in the Journal of the American Medical Informatics Association. The authors, from Belgium's University Hospitals Leuven, present their approach to rapidly expanding their laboratory's laboratory information system (LIS) to address the sudden workflow bottlenecks associated with COVID-19 testing. They describe how using a change management framework to rapidly drive them forward, the authors were able "to streamline sample ordering through a CPOE system, and streamline reporting by developing a database with contact details of all laboratories in Belgium," while improving epidemiological reporting and exploratory data mining of the data for scientific research. They also address the element of "meaningful use" of their LIS, which often gets overlooked.

Though the COVID-19 pandemic has been in force for months, seemingly little has been published (even ahead of print) in academic journals of the technological challenges of managing the growing mountain of research and clinical diagnostic data. Weemaes et al. are one of the exceptions, with this pre-print June publication in the Journal of the American Medical Informatics Association. The authors, from Belgium's University Hospitals Leuven, present their approach to rapidly expanding their laboratory's laboratory information system (LIS) to address the sudden workflow bottlenecks associated with COVID-19 testing. They describe how using a change management framework to rapidly drive them forward, the authors were able "to streamline sample ordering through a CPOE system, and streamline reporting by developing a database with contact details of all laboratories in Belgium," while improving epidemiological reporting and exploratory data mining of the data for scientific research. They also address the element of "meaningful use" of their LIS, which often gets overlooked.

With COVID-19, we think of small outbreaks occurring from humans being in close proximity at restaurants, parties, and other events. But what about laboratories? Zuckerman et al. share their tale of a small COVID-19 outbreak at Israel’s Central Virology Laboratory (ICVL) in mid-March 2020 and how they used their lab tech to determine the transmission sources. With eight known individuals testing positive for the SARS-CoV-2 virus overall, the researchers walk step by step through their quarantine and testing processes to "elucidate person-to-person transmission events, map individual and common mutations, and examine suspicions regarding contaminated surfaces." Their analyses found person-to-person contact—not contaminated surface contact—was the transmission path. They conclude that their overall analysis verifies the value of molecular testing and capturing complete viral genomes towards determining transmission vectors "and confirms that the strict safety regulations observed in ICVL most likely prevented further spread of the virus."

With COVID-19, we think of small outbreaks occurring from humans being in close proximity at restaurants, parties, and other events. But what about laboratories? Zuckerman et al. share their tale of a small COVID-19 outbreak at Israel’s Central Virology Laboratory (ICVL) in mid-March 2020 and how they used their lab tech to determine the transmission sources. With eight known individuals testing positive for the SARS-CoV-2 virus overall, the researchers walk step by step through their quarantine and testing processes to "elucidate person-to-person transmission events, map individual and common mutations, and examine suspicions regarding contaminated surfaces." Their analyses found person-to-person contact—not contaminated surface contact—was the transmission path. They conclude that their overall analysis verifies the value of molecular testing and capturing complete viral genomes towards determining transmission vectors "and confirms that the strict safety regulations observed in ICVL most likely prevented further spread of the virus."

In this 2020 short report from Dixon et al., lessons learned from attempts to expand data quality measures in the open-source tool Observational Health Data Science and Informatics (OHDSI) are presented. Noting a lack of data quality assessment and improvement mechanisms in health information systems in general, the researchers sought to improve OHDSI for public health surveillance use cases. After explaining the practical uses of OHDSI, the authors state their case for why measuring completeness, timeliness, and information entropy within OHDSI would be useful. Though not getting approval to add timeliness to the system, they conclude that high value remains "in adapting existing infrastructure and tools to support expanded use cases rather than to just create independent tools for use by a niche group."

In this 2020 short report from Dixon et al., lessons learned from attempts to expand data quality measures in the open-source tool Observational Health Data Science and Informatics (OHDSI) are presented. Noting a lack of data quality assessment and improvement mechanisms in health information systems in general, the researchers sought to improve OHDSI for public health surveillance use cases. After explaining the practical uses of OHDSI, the authors state their case for why measuring completeness, timeliness, and information entropy within OHDSI would be useful. Though not getting approval to add timeliness to the system, they conclude that high value remains "in adapting existing infrastructure and tools to support expanded use cases rather than to just create independent tools for use by a niche group."

This February 2020 paper published in BMC Medical Informatics and Decision Making examines the state of health information exchange in Québec and other parts of Canada and how its application to laboratory medicine might be improved. In particular, laboratory information exchange (LIE) systems that "improve the reliability of the laboratory testing process" and integrate "with other clinical information systems (CISs) physicians use in hospitals" are examined in this work. Surveying hospital-based specialist physicians, Raymond et al. paint a picture of how varying clinical information management solutions are used, what functionality is being used and not used, and how physicians view the potential benefits of the clinical systems they use. They conclude that there is very much a "complementary nature" between systems and that "system designers should take a step back to imagine a way to design systems as part of an interconnected network of features."

This February 2020 paper published in BMC Medical Informatics and Decision Making examines the state of health information exchange in Québec and other parts of Canada and how its application to laboratory medicine might be improved. In particular, laboratory information exchange (LIE) systems that "improve the reliability of the laboratory testing process" and integrate "with other clinical information systems (CISs) physicians use in hospitals" are examined in this work. Surveying hospital-based specialist physicians, Raymond et al. paint a picture of how varying clinical information management solutions are used, what functionality is being used and not used, and how physicians view the potential benefits of the clinical systems they use. They conclude that there is very much a "complementary nature" between systems and that "system designers should take a step back to imagine a way to design systems as part of an interconnected network of features."

In this 2020 paper published in the journal Matabolites, Krill et al. of Australia's AgriBio present their findings in an attempt to reduce runtimes and extraction complexity associated with quantifying terpenes in cannabis biomass. Noting the evolving needs of high-throughput cannabis breeding programs, the researchers present a method "based on a simple hexane extract from 40 mg of biomass, with 50 μg/mL dodecane as internal standard, and a gradient of less than 30 minutes." After presenting current background on terpene extraction, the researchers discuss the various aspects of their method and provide the details of materials and equipment used. They conclude that their method "covers a large cross-section of commonly detected cannabis volatiles, is validated for a large proportion of compounds it covers, and offers significant improvement in terms of sample preparation and sample throughput over previously published studies."

In this 2020 paper published in the journal Matabolites, Krill et al. of Australia's AgriBio present their findings in an attempt to reduce runtimes and extraction complexity associated with quantifying terpenes in cannabis biomass. Noting the evolving needs of high-throughput cannabis breeding programs, the researchers present a method "based on a simple hexane extract from 40 mg of biomass, with 50 μg/mL dodecane as internal standard, and a gradient of less than 30 minutes." After presenting current background on terpene extraction, the researchers discuss the various aspects of their method and provide the details of materials and equipment used. They conclude that their method "covers a large cross-section of commonly detected cannabis volatiles, is validated for a large proportion of compounds it covers, and offers significant improvement in terms of sample preparation and sample throughput over previously published studies."

In this data resource review, researchers at several Danish hospitals discuss how the country puts two laboratory information systems (LISs) that collect routine biomarker data to use, as well as how it can be accessed for research. The researchers explain how data is collected into the LISs, how data quality is managed, and how it is used, providing several real-world examples. They then discuss the strengths and weaknesses of their data as they relate to epidemiology, as well as how the data can be accessed. They emphasize "that access to data on routine biomarkers expands the detailed biological and clinical information available on patients in the Danish healthcare system," while the "full potential is enabled through linkage to other Danish healthcare registries."

In this data resource review, researchers at several Danish hospitals discuss how the country puts two laboratory information systems (LISs) that collect routine biomarker data to use, as well as how it can be accessed for research. The researchers explain how data is collected into the LISs, how data quality is managed, and how it is used, providing several real-world examples. They then discuss the strengths and weaknesses of their data as they relate to epidemiology, as well as how the data can be accessed. They emphasize "that access to data on routine biomarkers expands the detailed biological and clinical information available on patients in the Danish healthcare system," while the "full potential is enabled through linkage to other Danish healthcare registries."

Effective biobanking of biospecimens for multicenter studies today requires more than spreadsheets and paper documents; a software system capable of improving workflows and sharing while keeping critical personal information deidentified is critical. Both commercial off-the-shelf (COTS) and open-source biobanking laboratory information management systems (LIMS) are available, but, as the University of Cologne found out, those options may be too complex to implement or cost-intensive for multicenter research networks. The university took matters into their own hands and developed the HIV Engaged Research Technology (HEnRY) LIMS, which has since expanded into a broader, open-source biobanking solution that can be applied to contexts beyond HIV research. This 2020 paper discusses the LIMS and its development and application, concluding that it offers "immense potential for emerging research groups, especially in the setting of limited resources and/or complex multicenter studies."

Effective biobanking of biospecimens for multicenter studies today requires more than spreadsheets and paper documents; a software system capable of improving workflows and sharing while keeping critical personal information deidentified is critical. Both commercial off-the-shelf (COTS) and open-source biobanking laboratory information management systems (LIMS) are available, but, as the University of Cologne found out, those options may be too complex to implement or cost-intensive for multicenter research networks. The university took matters into their own hands and developed the HIV Engaged Research Technology (HEnRY) LIMS, which has since expanded into a broader, open-source biobanking solution that can be applied to contexts beyond HIV research. This 2020 paper discusses the LIMS and its development and application, concluding that it offers "immense potential for emerging research groups, especially in the setting of limited resources and/or complex multicenter studies."

This "short communications" paper published in the International Journal of Infectious Diseases describes the results of simplifying reverse transcription and real-time quantitative PCR (RT-qPCR) analyses for SARS-CoV-2 by skipping the RNA extraction step and instead testing three different types of direct heating of the nasopharyngeal swab. Among the three methods tested—heating directly without additives, heating with a formamide-EDTA buffer, and heating with an RNAsnap buffer—the direct heating method "provided the best results, which were highly consistent with the SARS-CoV-2 infection diagnosis based on standard RNA extraction," while also processing nearly half the time. The authors warn, however, that "choice of RT-qPCR kits might have an impact on the sensitivity of the Direct protocol" when trying to replicate their results.

This "short communications" paper published in the International Journal of Infectious Diseases describes the results of simplifying reverse transcription and real-time quantitative PCR (RT-qPCR) analyses for SARS-CoV-2 by skipping the RNA extraction step and instead testing three different types of direct heating of the nasopharyngeal swab. Among the three methods tested—heating directly without additives, heating with a formamide-EDTA buffer, and heating with an RNAsnap buffer—the direct heating method "provided the best results, which were highly consistent with the SARS-CoV-2 infection diagnosis based on standard RNA extraction," while also processing nearly half the time. The authors warn, however, that "choice of RT-qPCR kits might have an impact on the sensitivity of the Direct protocol" when trying to replicate their results.

When it comes to analyzing the effects on humans of chemicals and other substances that make their way into the environment, "large, complex data sets" are often required. However, those data sets are often disparate and inconsistent. This has made epidemiological studies of air pollution and other types of environmental contamination difficult and limited in effectiveness. This problem has compounded in the age of big data. Comess et al. address the challenges associated with today's environmental public health data in this recent paper published in Frontiers in Artificial Intelligence and discuss how augmented intelligence, artificial intelligence (AI), and machine learning can enhance understanding of that data. However, they note, additional work must be put into improving not only analysis but also into how data is collected and shared, how researchers are trained in scientific computing and data science, and how familiarity with the benefits of AI and machine learning must be expanded. They conclude their paper with a table of five distinct challenges and their recommended way to address them so as to "create an environment that will foster the data revolution" in environmental public health and beyond.

When it comes to analyzing the effects on humans of chemicals and other substances that make their way into the environment, "large, complex data sets" are often required. However, those data sets are often disparate and inconsistent. This has made epidemiological studies of air pollution and other types of environmental contamination difficult and limited in effectiveness. This problem has compounded in the age of big data. Comess et al. address the challenges associated with today's environmental public health data in this recent paper published in Frontiers in Artificial Intelligence and discuss how augmented intelligence, artificial intelligence (AI), and machine learning can enhance understanding of that data. However, they note, additional work must be put into improving not only analysis but also into how data is collected and shared, how researchers are trained in scientific computing and data science, and how familiarity with the benefits of AI and machine learning must be expanded. They conclude their paper with a table of five distinct challenges and their recommended way to address them so as to "create an environment that will foster the data revolution" in environmental public health and beyond.

In this 2020 paper published in the journal Toxins, Di Nardo et al. of the University of Turin present their findings from adapting an existing enzyme immunoassay to cannabis testing for purposes of more accurately detecting mycotoxins, including aflatoxins such as aflatoxin B1 (AFB1). Citing benefits such as fewer steps, more cost-efficient equipment, and fewer training level demands, the authors viewed the application of enzyme immunoassay to testing cannabis for mycotoxins a worthy endeavor. Di Nardo et al. discuss at length how they converted an immunoassay for measuring aflatoxins in eggs to one for the cannabis substrate, as well as the various challenges and caveats associated with the resulting methodology. In comparison to techniques such as ultra-high performance liquid chromatography coupled to high resolution tandem mass spectrometry, the authors concluded that enzyme immunoassay more readily allows for "wide applications in low resource settings and for the affordable monitoring of the safety of cannabis products, including those used recreationally and as a food supplement."

In this 2020 paper published in the journal Toxins, Di Nardo et al. of the University of Turin present their findings from adapting an existing enzyme immunoassay to cannabis testing for purposes of more accurately detecting mycotoxins, including aflatoxins such as aflatoxin B1 (AFB1). Citing benefits such as fewer steps, more cost-efficient equipment, and fewer training level demands, the authors viewed the application of enzyme immunoassay to testing cannabis for mycotoxins a worthy endeavor. Di Nardo et al. discuss at length how they converted an immunoassay for measuring aflatoxins in eggs to one for the cannabis substrate, as well as the various challenges and caveats associated with the resulting methodology. In comparison to techniques such as ultra-high performance liquid chromatography coupled to high resolution tandem mass spectrometry, the authors concluded that enzyme immunoassay more readily allows for "wide applications in low resource settings and for the affordable monitoring of the safety of cannabis products, including those used recreationally and as a food supplement."